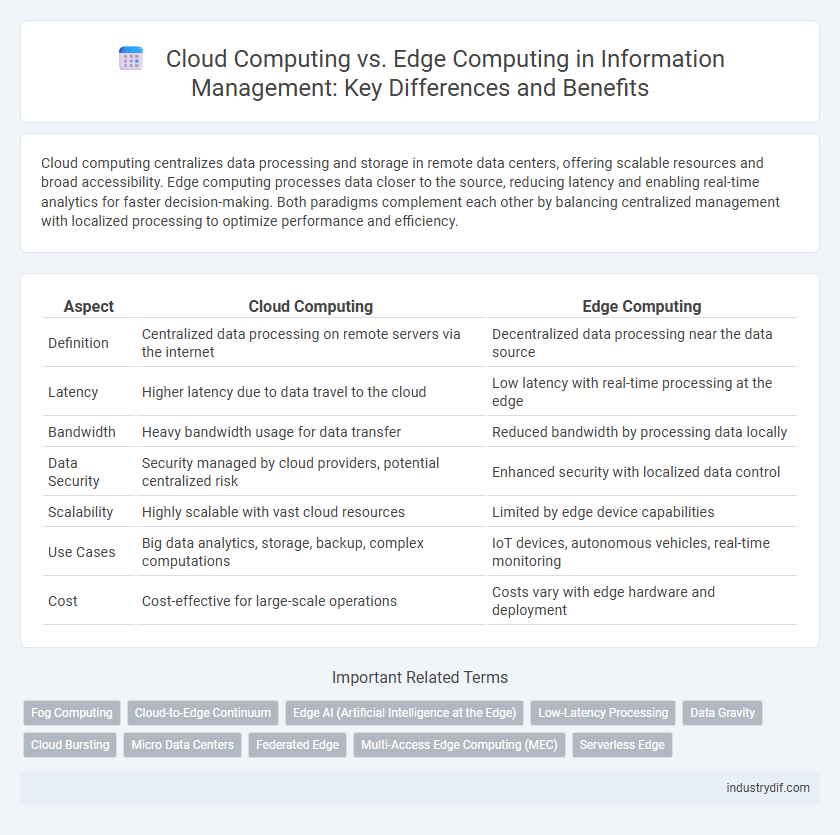

Cloud computing centralizes data processing and storage in remote data centers, offering scalable resources and broad accessibility. Edge computing processes data closer to the source, reducing latency and enabling real-time analytics for faster decision-making. Both paradigms complement each other by balancing centralized management with localized processing to optimize performance and efficiency.

Table of Comparison

| Aspect | Cloud Computing | Edge Computing |

|---|---|---|

| Definition | Centralized data processing on remote servers via the internet | Decentralized data processing near the data source |

| Latency | Higher latency due to data travel to the cloud | Low latency with real-time processing at the edge |

| Bandwidth | Heavy bandwidth usage for data transfer | Reduced bandwidth by processing data locally |

| Data Security | Security managed by cloud providers, potential centralized risk | Enhanced security with localized data control |

| Scalability | Highly scalable with vast cloud resources | Limited by edge device capabilities |

| Use Cases | Big data analytics, storage, backup, complex computations | IoT devices, autonomous vehicles, real-time monitoring |

| Cost | Cost-effective for large-scale operations | Costs vary with edge hardware and deployment |

Introduction to Cloud Computing and Edge Computing

Cloud computing delivers scalable computing resources and storage over the internet from centralized data centers, enabling on-demand access and efficient data management for businesses worldwide. Edge computing processes data closer to the source, such as IoT devices or local servers, reducing latency and bandwidth use by handling real-time analytics at the network's edge. Both technologies address different needs in IT infrastructure, with cloud providing vast computational power and edge offering immediate data processing capabilities.

Key Differences Between Cloud and Edge Computing

Cloud computing centralizes data processing and storage in remote data centers, offering scalable resources and extensive computational power accessible via the internet. Edge computing processes data closer to the data source or end-user, reducing latency and improving real-time responsiveness by handling critical tasks locally. Key differences include latency reduction, bandwidth optimization, enhanced security through localized data handling, and specific use cases such as IoT devices relying heavily on edge computing, while large-scale data analytics favor cloud environments.

Core Components of Cloud Computing

Core components of cloud computing include infrastructure as a service (IaaS), platform as a service (PaaS), and software as a service (SaaS), which provide scalable computing resources, application development platforms, and ready-to-use software applications respectively. Virtualization technology enables efficient resource pooling and dynamic allocation across distributed data centers, forming the backbone of cloud infrastructure. Storage systems such as object storage and block storage, along with robust networking and security frameworks, ensure seamless data management, accessibility, and protection in cloud environments.

Essential Features of Edge Computing

Edge computing processes data near the source of generation, reducing latency and bandwidth usage compared to traditional cloud computing. Essential features include real-time data processing, enhanced security through localized data handling, and improved reliability by minimizing dependency on centralized data centers. This decentralized architecture enables faster decision-making and supports IoT applications requiring immediate data analysis.

Data Processing: Cloud vs Edge

Cloud computing processes data in centralized data centers, offering extensive computational resources and scalability for large-scale analytics. Edge computing handles data processing closer to the source, reducing latency and bandwidth usage by performing real-time analysis on local devices or edge servers. This decentralized approach enhances responsiveness and efficiency in applications requiring immediate data insights, such as IoT and autonomous systems.

Latency and Real-Time Performance

Cloud computing offers scalable processing power but often experiences higher latency due to data traveling to centralized data centers, impacting real-time performance. Edge computing processes data closer to the source, significantly reducing latency and enabling faster response times critical for applications like autonomous vehicles or smart manufacturing. Lower latency in edge computing ensures immediate data analysis and decision-making, enhancing real-time operational efficiency.

Security and Compliance Considerations

Cloud computing centralizes data storage and processing, offering robust, scalable security measures and compliance certifications such as ISO 27001 and GDPR. Edge computing processes data locally, reducing latency and exposure but requires stringent endpoint security protocols and real-time compliance monitoring to mitigate risks. Organizations must evaluate data sensitivity, regulatory requirements, and risk tolerance when choosing between cloud and edge computing for secure and compliant operations.

Scalability and Flexibility Comparison

Cloud computing offers extensive scalability by providing on-demand resource allocation across global data centers, allowing businesses to scale operations effortlessly and handle varying workloads. Edge computing enhances flexibility by processing data closer to the source, reducing latency and optimizing bandwidth usage for real-time applications, but it typically has limited scalability compared to centralized cloud resources. Integrating cloud and edge solutions can balance scalability and flexibility, enabling efficient data processing and dynamic resource management tailored to specific use cases.

Industry Use Cases for Cloud and Edge Computing

Cloud computing powers large-scale data analytics and storage for industries like retail and finance, enabling real-time insights and scalable resource management. Edge computing supports latency-sensitive applications in manufacturing and healthcare by processing data near the source, enhancing response times and minimizing bandwidth use. Both technologies drive digital transformation by optimizing operations through tailored deployment based on specific industry requirements.

Future Trends in Cloud and Edge Computing

Future trends in cloud and edge computing emphasize increased integration, with edge computing enhancing real-time data processing and reducing latency by handling workloads closer to data sources. Cloud platforms will continue expanding AI and machine learning capabilities, enabling scalable analytics and cross-device synchronization. The convergence of 5G technology and edge infrastructure is expected to accelerate innovation in IoT, autonomous vehicles, and smart cities.

Related Important Terms

Fog Computing

Fog computing extends cloud capabilities by processing data closer to the source, reducing latency and bandwidth usage essential for real-time applications in IoT and smart devices. Unlike traditional cloud computing, fog computing operates on a decentralized network architecture, enabling faster decision-making and improved security by distributing data analysis across multiple edge nodes.

Cloud-to-Edge Continuum

The Cloud-to-Edge Continuum integrates cloud computing's vast scalability with edge computing's low-latency processing, enabling seamless data flow and real-time analytics across distributed environments. This continuum supports applications in IoT, AI, and 5G by optimizing resource allocation, reducing bandwidth usage, and enhancing security through localized data handling.

Edge AI (Artificial Intelligence at the Edge)

Edge AI processes data locally on edge devices, reducing latency and enhancing real-time decision-making compared to traditional cloud computing, which relies on centralized data centers. This decentralized approach enables faster AI inference, improved data privacy, and reduced bandwidth usage, making it ideal for applications like autonomous vehicles and smart IoT devices.

Low-Latency Processing

Cloud computing offers scalable resources but often encounters latency issues due to data transmission over long distances. Edge computing reduces latency by processing data locally near the source, enabling real-time analytics and faster decision-making for applications like autonomous vehicles and IoT devices.

Data Gravity

Data gravity significantly influences cloud computing by centralizing large datasets in cloud data centers, enhancing processing efficiency but increasing latency for remote users. Edge computing counters this by processing data closer to its source, reducing latency and bandwidth use while addressing the challenges posed by massive data gravity in distributed environments.

Cloud Bursting

Cloud bursting enables businesses to seamlessly extend their on-premises infrastructure to cloud resources during peak demand, optimizing scalability and cost-efficiency. This hybrid approach contrasts with edge computing by leveraging centralized cloud capacity for overflow workloads rather than processing data locally at the edge.

Micro Data Centers

Micro data centers enable edge computing by processing data closer to the source, reducing latency and improving real-time analytics compared to centralized cloud computing infrastructures. These compact, self-contained units support scalability and enhanced security for IoT deployments and critical applications requiring immediate data processing at the network edge.

Federated Edge

Federated edge computing enhances cloud computing by distributing data processing across multiple localized edge nodes, reducing latency and improving data privacy through decentralized management. This approach leverages the federated model to enable collaborative AI training on edge devices without transferring sensitive data to central cloud servers, optimizing performance in real-time applications.

Multi-Access Edge Computing (MEC)

Multi-Access Edge Computing (MEC) enhances cloud computing by bringing processing power closer to end-users, reducing latency and enabling real-time data processing at the network edge. This architecture supports bandwidth-intensive applications like IoT, autonomous vehicles, and augmented reality by offloading tasks from centralized cloud servers to distributed edge nodes, improving performance and reliability.

Serverless Edge

Serverless edge computing combines the scalability of cloud computing with the low latency of edge locations by running event-driven functions closer to users without managing servers. This approach optimizes real-time data processing and reduces bandwidth costs by executing code at the network edge, enhancing performance for applications like IoT, AR/VR, and content delivery.

Cloud Computing vs Edge Computing Infographic

industrydif.com

industrydif.com