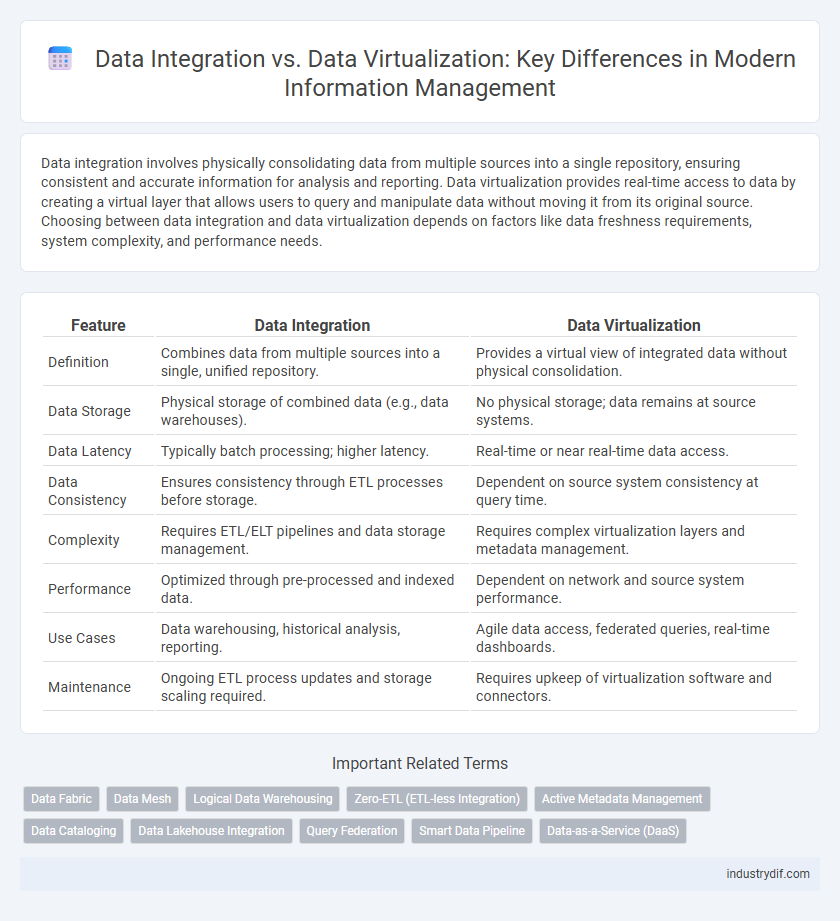

Data integration involves physically consolidating data from multiple sources into a single repository, ensuring consistent and accurate information for analysis and reporting. Data virtualization provides real-time access to data by creating a virtual layer that allows users to query and manipulate data without moving it from its original source. Choosing between data integration and data virtualization depends on factors like data freshness requirements, system complexity, and performance needs.

Table of Comparison

| Feature | Data Integration | Data Virtualization |

|---|---|---|

| Definition | Combines data from multiple sources into a single, unified repository. | Provides a virtual view of integrated data without physical consolidation. |

| Data Storage | Physical storage of combined data (e.g., data warehouses). | No physical storage; data remains at source systems. |

| Data Latency | Typically batch processing; higher latency. | Real-time or near real-time data access. |

| Data Consistency | Ensures consistency through ETL processes before storage. | Dependent on source system consistency at query time. |

| Complexity | Requires ETL/ELT pipelines and data storage management. | Requires complex virtualization layers and metadata management. |

| Performance | Optimized through pre-processed and indexed data. | Dependent on network and source system performance. |

| Use Cases | Data warehousing, historical analysis, reporting. | Agile data access, federated queries, real-time dashboards. |

| Maintenance | Ongoing ETL process updates and storage scaling required. | Requires upkeep of virtualization software and connectors. |

Understanding Data Integration: Definition and Core Concepts

Data integration involves combining data from multiple sources into a unified view, enabling comprehensive analysis and reporting. It relies on processes such as ETL (Extract, Transform, Load) to extract data, transform it into a consistent format, and load it into a centralized data warehouse. Core concepts include data consolidation, data quality management, and ensuring data consistency across diverse systems.

What is Data Virtualization? Key Principles Explained

Data virtualization is a data management approach that allows users to access and manipulate data without requiring physical movement or replication. It integrates data from multiple sources in real-time, providing a unified, virtual data layer for analytics and reporting. Key principles include real-time data access, abstraction of underlying data complexities, and seamless integration across diverse platforms.

Data Integration Types: ETL, ELT, and Beyond

Data integration encompasses various methods including ETL (Extract, Transform, Load), ELT (Extract, Load, Transform), and advanced approaches such as real-time and streaming integration, facilitating comprehensive data consolidation for analytics. ETL processes data by extracting it from sources, transforming it in a staging area, and then loading it into a target system, whereas ELT loads raw data first and performs transformations within the target database, optimizing for big data environments. Beyond these traditional types, modern data integration leverages API-based integration and event-driven architectures to enable agile, scalable, and near real-time data flow across diverse platforms.

How Data Virtualization Works: Architecture Overview

Data virtualization operates through a middleware layer that connects multiple data sources in real-time without physical data movement, enabling unified access and query execution. Its architecture typically consists of a virtual data layer that abstracts and integrates heterogeneous data repositories, a semantic layer that translates user queries into optimized source-specific commands, and a connection layer that handles communication protocols and security. This design allows seamless data retrieval and transformation on-demand, supporting agile analytics and reducing data replication overhead.

Key Differences Between Data Integration and Data Virtualization

Data integration involves physically consolidating data from multiple sources into a centralized repository, enhancing data consistency and enabling comprehensive analytics. Data virtualization creates a virtual data layer that allows real-time access to disparate data sources without data movement, optimizing flexibility and reducing data replication costs. Key differences include data storage requirements, latency in data access, and complexity of implementation, with integration favoring batch processing and virtualization excelling in dynamic querying environments.

Benefits of Data Integration for Enterprise Environments

Data integration enhances enterprise environments by providing a unified view of data across multiple sources, improving data consistency and accuracy for better decision-making. It enables centralized data management, which supports comprehensive analytics and regulatory compliance while reducing data silos. Enterprises benefit from streamlined workflows and increased operational efficiency through automated data consolidation and transformation processes.

Advantages of Data Virtualization in Modern Data Management

Data virtualization offers significant advantages in modern data management by enabling real-time access to disparate data sources without physical data movement, which reduces latency and storage costs. It provides a unified, consistent view of data across multiple platforms, enhancing decision-making and operational efficiency. The approach also supports agile data governance and simplifies data access for analytics, accelerating time-to-insight and business responsiveness.

Use Cases: When to Choose Data Integration vs Data Virtualization

Data integration excels in scenarios requiring consolidated, high-quality data for reporting, analytics, and storage in data warehouses or lakes, supporting complex transformations and historical data management. Data virtualization suits real-time access needs across multiple, heterogeneous sources without physical data movement, ideal for agile BI, operational dashboards, and rapid prototyping. Organizations prioritize data integration for comprehensive, batch-processed datasets, while data virtualization is chosen for flexible, on-demand data access and seamless system interoperability.

Challenges and Limitations of Data Integration and Virtualization

Data integration faces challenges such as high latency due to data movement, complex ETL processes, and difficulties in maintaining data consistency across diverse sources. Data virtualization struggles with performance issues when querying large datasets in real-time and limited support for complex transformations or historical data analysis. Both approaches encounter limitations in handling heterogeneous data formats and ensuring data governance and security across multiple platforms.

Best Practices for Implementing Data Integration and Data Virtualization

Effective implementation of data integration requires establishing robust ETL processes, ensuring data quality, and maintaining consistent metadata management to unify disparate data sources into a single repository. Data virtualization best practices include creating a unified data access layer, minimizing data movement by querying data in real-time, and enforcing security policies through role-based access controls. Both approaches benefit from thorough documentation, scalable architecture, and continuous monitoring to optimize data accessibility and performance.

Related Important Terms

Data Fabric

Data Fabric unifies data integration and data virtualization by providing a seamless, intelligent layer that connects disparate data sources, enabling real-time access and advanced analytics without moving data. This approach enhances agility and reduces complexity by combining the strengths of both data integration's physical consolidation and data virtualization's logical abstraction.

Data Mesh

Data Mesh architecture emphasizes decentralized data ownership and domain-oriented data integration, contrasting with traditional data virtualization that centralizes data access without physically moving data. By leveraging Data Mesh principles, organizations facilitate scalable, real-time data integration across distributed domains while maintaining governance and data quality.

Logical Data Warehousing

Logical data warehousing combines data integration and data virtualization to create a unified, real-time view of disparate data sources without physical data movement, enhancing agility and reducing storage costs. Data integration consolidates data physically through ETL processes, while data virtualization enables on-demand access to live data, making logical data warehousing a hybrid solution that optimizes data accessibility and analytics performance.

Zero-ETL (ETL-less Integration)

Zero-ETL integration eliminates the need for traditional Extract, Transform, Load processes by enabling real-time data access through data virtualization, which creates a unified data model without physical data movement. Data virtualization accelerates analytics and decision-making by providing seamless, on-demand data integration from diverse sources while maintaining data governance and security.

Active Metadata Management

Active metadata management enhances data integration by continuously collecting and analyzing metadata to improve data quality, governance, and lineage across diverse sources. In contrast, data virtualization leverages active metadata to provide real-time, unified access to distributed data without physical consolidation, enabling faster decision-making and agility.

Data Cataloging

Data cataloging enhances both data integration and data virtualization by providing a centralized metadata repository that improves data discovery, lineage, and governance across diverse sources. Effective data cataloging accelerates decision-making and ensures data accuracy by enabling seamless access to unified, well-documented datasets in real-time or batch environments.

Data Lakehouse Integration

Data lakehouse integration combines the scalability and schema flexibility of data lakes with the data management and performance features of data warehouses, enabling unified data access and analytics. Unlike traditional data integration that physically moves data, data virtualization in lakehouse architectures provides real-time, abstracted views without data duplication, optimizing agility and reducing latency in complex analytic environments.

Query Federation

Query federation in data virtualization allows real-time access to multiple heterogeneous data sources without the need for physical data movement, enhancing agility and reducing latency. In contrast, data integration typically requires consolidating data into a unified repository, which can increase query response time due to ETL processing and data synchronization efforts.

Smart Data Pipeline

Smart data pipelines streamline data integration by consolidating disparate sources into a unified, accessible repository, enhancing data quality and reducing latency. Data virtualization enables real-time data access without replication, supporting agile analytics and decision-making through a virtualized layer over integrated data environments.

Data-as-a-Service (DaaS)

Data Integration consolidates data from disparate sources into a single repository, enabling comprehensive analysis and reporting, while Data Virtualization provides real-time access to data across multiple systems without physical consolidation. Data-as-a-Service (DaaS) leverages both approaches to deliver scalable, on-demand data access and integration capabilities, optimizing data availability and agility for enterprises.

Data Integration vs Data Virtualization Infographic

industrydif.com

industrydif.com