A database is a structured system optimized for transactional processing and quick query responses using predefined schemas, making it ideal for operational applications. A data lakehouse combines the scalable storage of data lakes with the management features of data warehouses, enabling both structured and unstructured data to be analyzed efficiently. Companies use data lakehouses to unify analytics and machine learning workloads, overcoming the limitations of traditional databases and data lakes.

Table of Comparison

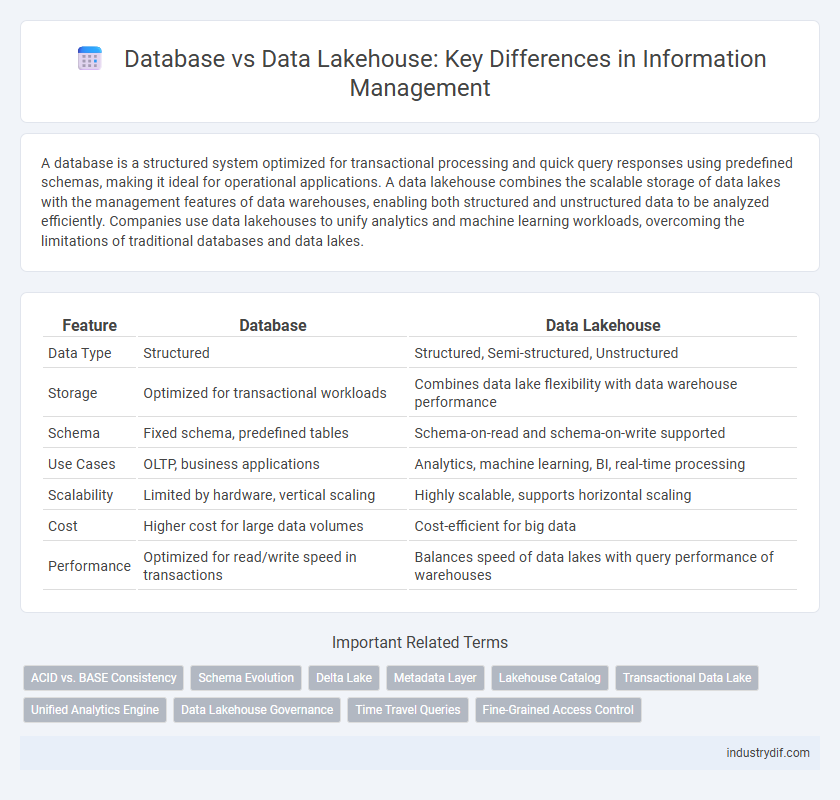

| Feature | Database | Data Lakehouse |

|---|---|---|

| Data Type | Structured | Structured, Semi-structured, Unstructured |

| Storage | Optimized for transactional workloads | Combines data lake flexibility with data warehouse performance |

| Schema | Fixed schema, predefined tables | Schema-on-read and schema-on-write supported |

| Use Cases | OLTP, business applications | Analytics, machine learning, BI, real-time processing |

| Scalability | Limited by hardware, vertical scaling | Highly scalable, supports horizontal scaling |

| Cost | Higher cost for large data volumes | Cost-efficient for big data |

| Performance | Optimized for read/write speed in transactions | Balances speed of data lakes with query performance of warehouses |

Understanding Database and Data Lakehouse Concepts

A database is a structured system designed to store, organize, and manage data efficiently, typically using schemas and tables for quick retrieval and transaction processing. A data lakehouse combines the capabilities of data lakes and data warehouses, enabling large-scale storage of raw data along with structured data analytics in a unified platform. Understanding the differences in architecture and use cases is critical for optimizing data management strategies in modern enterprises.

Key Differences Between Databases and Data Lakehouses

Databases primarily store structured data optimized for transaction processing and complex queries using predefined schemas. Data lakehouses combine elements of data lakes and warehouses, supporting both structured and unstructured data with flexible schema enforcement, enabling diverse analytics workloads. Key differences include schema management, data types handled, and scalability, where lakehouses provide greater flexibility and integration with big data frameworks compared to traditional databases.

Data Storage Architecture: Structured vs. Unstructured

Databases store data in structured formats with predefined schemas, optimizing for transactional operations and query efficiency. Data Lakehouses combine the storage of both structured and unstructured data, enabling flexible schema-on-read architectures that support diverse data types from raw logs to relational tables. This hybrid approach enhances analytical capabilities by integrating data warehousing techniques with data lake scalability and versatility.

Scalability and Performance Considerations

Database systems typically offer optimized query performance and consistency for structured data but face scalability challenges with vast or varied datasets. Data lakehouses combine the scalability of data lakes with the performance features of databases, enabling efficient handling of large-scale, diverse data while supporting ACID transactions and BI workloads. Scalability in data lakehouses is enhanced by decoupled storage and compute resources, allowing dynamic resource allocation for improved throughput and latency.

Real-Time Data Processing Capabilities

Databases excel at structured data storage with efficient real-time transaction processing using ACID compliance and indexing techniques. Data lakehouses combine the scalability of data lakes with the schema and performance optimizations of data warehouses, enabling real-time analytics on both structured and unstructured data. Real-time data processing in lakehouses leverages streaming engines like Apache Spark and Delta Lake to provide low-latency insights across diverse data types.

Data Governance and Security Features

Data governance in databases relies on structured schemas and predefined access controls to ensure data integrity and compliance, while data lakehouses integrate governance frameworks that support both structured and unstructured data with metadata management and fine-grained access policies. Security features in traditional databases include role-based access control (RBAC), encryption at rest and in transit, and audit logging, whereas data lakehouses enhance security through unified access control layers, data masking, and real-time monitoring across diverse data types. This combination in lakehouses facilitates centralized data governance and advanced security mechanisms crucial for modern analytics and regulatory compliance.

Cost Efficiency and Resource Management

Databases typically require higher costs for storage and compute resources due to rigid schemas and transaction management, whereas data lakehouses optimize cost efficiency by combining data lake scalability with structured query capabilities. Data lakehouses enable better resource management through separated storage and compute layers, allowing dynamic scaling based on workload demands, reducing overall infrastructure expenses. This architectural flexibility in data lakehouses leads to lower total cost of ownership compared to traditional databases, especially for large-scale, diverse data environments.

Integration and Interoperability with BI Tools

Databases provide structured data storage optimized for transactional systems, ensuring seamless integration with traditional BI tools through standard SQL interfaces and connectors. Data lakehouses combine the scalability of data lakes with the management features of data warehouses, enabling interoperability with diverse BI tools via unified query engines supporting multiple data formats. This integration versatility allows BI platforms to access both real-time analytics and complex, large-scale data processing within a single environment.

Use Cases: When to Choose Database vs. Data Lakehouse

Databases excel in structured data management, ideal for transaction processing, operational analytics, and applications requiring ACID compliance. Data lakehouses combine the scalability of data lakes with the performance of databases, making them suitable for big data analytics, machine learning, and handling diverse data types in a unified platform. Choose databases for consistent, high-performance queries on structured data, while opting for data lakehouses when integrating large volumes of structured and unstructured data for advanced analytics.

Future Trends in Data Management Technologies

Future trends in data management technologies emphasize the convergence of traditional databases and data lakehouses, leveraging the structured query capabilities of databases with the scalable, flexible storage of lakehouses. Innovations such as real-time analytics, AI-driven data governance, and cloud-native architectures are shaping the evolution of hybrid platforms that support complex, high-volume data. Enterprises are increasingly adopting lakehouse solutions to enable seamless integration of machine learning models and advanced BI tools, driving faster, more intelligent decision-making processes.

Related Important Terms

ACID vs. BASE Consistency

Databases prioritize ACID consistency, ensuring atomicity, consistency, isolation, and durability for reliable transactions in structured environments. Data Lakehouses lean towards BASE consistency, allowing for eventual consistency and higher availability in handling vast, unstructured data with flexible schema management.

Schema Evolution

Databases typically enforce rigid schema definitions, limiting flexibility during schema evolution and requiring downtime or complex migrations for structural changes. Data Lakehouses support dynamic schema evolution by allowing seamless schema updates and handling both structured and unstructured data, enhancing adaptability for growing and changing datasets.

Delta Lake

Delta Lake combines the ACID transactions and schema enforcement of traditional databases with the scalability and flexibility of data lakes, enabling reliable, high-performance data pipelines for big data analytics. Its unified architecture supports both batch and streaming data, facilitating real-time data processing while ensuring data integrity and consistency across diverse data workloads.

Metadata Layer

The metadata layer in databases typically organizes structured schema information to optimize query performance and data integrity, while in data lakehouses, the metadata layer integrates both structured and unstructured data schemas, enabling unified data governance and faster analytics. Advanced metadata management in lakehouses supports schema evolution, data versioning, and lineage tracking, enhancing overall data reliability and accessibility compared to traditional database metadata systems.

Lakehouse Catalog

A Lakehouse catalog integrates metadata management by combining the structured schema enforcement of traditional databases with the scalable, flexible storage of data lakes. This unified catalog enables efficient data discovery, governance, and ACID transactions across diverse data types, optimizing analytics workflows in modern data architectures.

Transactional Data Lake

A transactional data lake integrates the scalability and flexibility of data lakes with the ACID compliance and performance of traditional databases, enabling real-time processing of structured and unstructured transactional data. This hybrid architecture supports seamless data ingestion, storage, and analytics by unifying batch and streaming workloads for enterprise-grade data management.

Unified Analytics Engine

A Database primarily manages structured data with predefined schemas optimized for transactional and analytical queries, whereas a Data Lakehouse combines the flexibility of data lakes with the reliability and performance of data warehouses, supporting both structured and unstructured data. Unified Analytics Engines in Data Lakehouses streamline data processing by integrating storage and compute layers, enabling real-time analytics, machine learning, and BI workloads on a single platform.

Data Lakehouse Governance

Data Lakehouse governance combines the structured data management features of databases with the flexible, scalable storage of data lakes, enabling unified security, compliance, and metadata management across diverse data types. Its architecture ensures robust access controls, lineage tracking, and data quality enforcement to support enterprise-wide regulatory requirements and operational transparency.

Time Travel Queries

Time travel queries in databases enable accessing historical data by querying snapshots stored over time, ensuring data consistency and auditability. Data lakehouses enhance this capability by combining the scalability of data lakes with structured query performance, supporting efficient time travel queries over vast and diverse datasets.

Fine-Grained Access Control

Databases provide fine-grained access control through role-based permissions and column-level security, enabling precise user access management for sensitive data. Data lakehouses combine the scalability of data lakes with enhanced access controls similar to databases, leveraging attribute-based access control and policy enforcement to secure diverse data types.

Database vs Data Lakehouse Infographic

industrydif.com

industrydif.com