Machine Learning encompasses a broad set of algorithms that learn patterns from labeled or unlabeled data, while Self-Supervised Learning specifically generates its own labels from the input data to improve learning efficiency without requiring human annotation. Self-Supervised Learning leverages intrinsic data structures to create supervisory signals, enabling models to pre-train on vast amounts of unlabeled data before fine-tuning on specific tasks. This approach enhances robustness and reduces dependency on large labeled datasets compared to traditional supervised Machine Learning methods.

Table of Comparison

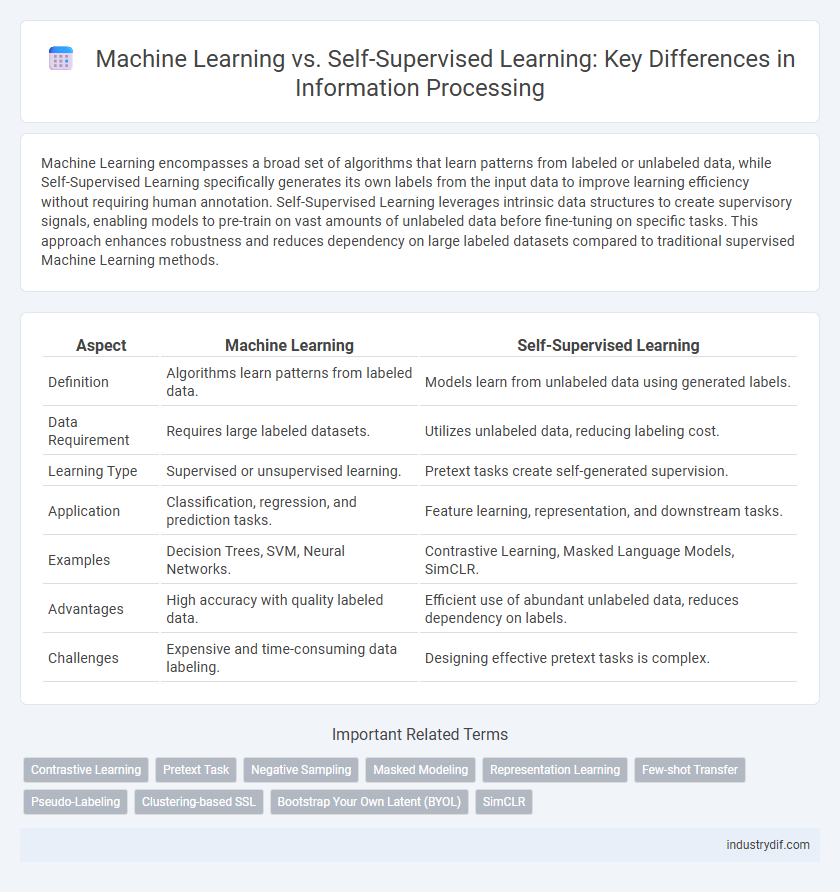

| Aspect | Machine Learning | Self-Supervised Learning |

|---|---|---|

| Definition | Algorithms learn patterns from labeled data. | Models learn from unlabeled data using generated labels. |

| Data Requirement | Requires large labeled datasets. | Utilizes unlabeled data, reducing labeling cost. |

| Learning Type | Supervised or unsupervised learning. | Pretext tasks create self-generated supervision. |

| Application | Classification, regression, and prediction tasks. | Feature learning, representation, and downstream tasks. |

| Examples | Decision Trees, SVM, Neural Networks. | Contrastive Learning, Masked Language Models, SimCLR. |

| Advantages | High accuracy with quality labeled data. | Efficient use of abundant unlabeled data, reduces dependency on labels. |

| Challenges | Expensive and time-consuming data labeling. | Designing effective pretext tasks is complex. |

Introduction to Machine Learning and Self-Supervised Learning

Machine Learning involves algorithms that enable computers to learn from labeled data and make predictions or decisions without explicit programming. Self-Supervised Learning is a subset of Machine Learning where models generate their own labels from unlabeled data, improving efficiency in data utilization. This approach leverages pretext tasks to extract meaningful representations, enhancing performance in downstream tasks without relying heavily on annotated datasets.

Core Definitions and Industry Terminology

Machine Learning encompasses algorithms that enable computers to learn from data and improve performance over time without explicit programming. Self-Supervised Learning is a subset of Machine Learning where models generate their own labels from unlabeled data, optimizing learning efficiency and reducing dependency on annotated datasets. Industry terminology distinguishes supervised learning with labeled data, unsupervised learning for pattern discovery without labels, and self-supervised learning as an intermediate approach leveraging intrinsic data structures.

Key Differences Between Machine Learning and Self-Supervised Learning

Machine learning encompasses a broad range of algorithms that rely on labeled data to learn patterns, whereas self-supervised learning utilizes automatically generated labels from the data itself, minimizing the need for manual annotation. Self-supervised learning excels in tasks where acquiring labeled datasets is costly or impractical, leveraging intrinsic data structures to create predictive models. Unlike traditional machine learning, self-supervised methods improve model efficiency by pre-training on vast amounts of unlabeled data before fine-tuning with smaller labeled datasets.

Data Requirements: Labeled vs Unlabeled Data

Machine learning traditionally relies on large volumes of labeled data to train models effectively, requiring significant human effort for annotation. Self-supervised learning reduces this dependency by leveraging unlabeled data through pretext tasks, enabling models to learn useful representations without manual labeling. This approach significantly lowers data preparation costs and expands applicability in scenarios with scarce labeled datasets.

Underlying Algorithms and Approaches

Machine learning encompasses diverse algorithms such as supervised, unsupervised, and reinforcement learning, relying heavily on labeled data for pattern recognition and prediction. Self-supervised learning, a subset of machine learning, employs pretext tasks to generate labels internally, enabling models to learn representations from large unlabeled datasets. This approach leverages contrastive learning, generative models, and clustering-based methods to enhance feature extraction without explicit human annotation.

Practical Applications in Modern Industries

Machine learning powers diverse modern industries by enabling predictive analytics, personalized recommendations, and automation, thereby enhancing efficiency and decision-making processes. Self-supervised learning, a subset of machine learning, excels in scenarios with limited labeled data by leveraging unlabeled datasets to improve model accuracy in fields such as natural language processing, computer vision, and autonomous driving. Practical applications include healthcare diagnostics, financial fraud detection, and manufacturing quality control, where self-supervised models reduce dependency on extensive labeled data while maintaining high performance.

Benefits and Limitations of Each Method

Machine learning offers a broad range of techniques capable of handling diverse data types, with supervised learning excelling in accuracy through labeled datasets but requiring extensive human annotation. Self-supervised learning reduces dependency on labeled data by leveraging inherent data structures, enabling models to learn useful representations from unlabeled inputs, yet it may struggle with performance consistency across complex tasks. Balancing the scalability of self-supervised learning with the precision of traditional machine learning remains crucial for advancing AI applications efficiently.

Current Industry Adoption Trends

Machine learning adoption spans numerous industries, but self-supervised learning is rapidly gaining traction due to its ability to leverage unlabeled data, reducing dependency on costly annotated datasets. Sectors like healthcare, finance, and autonomous driving are increasingly integrating self-supervised models to enhance data efficiency and improve predictive accuracy. Current trends show enterprises prioritizing self-supervised learning to accelerate AI deployment and optimize resource utilization.

Challenges and Future Directions

Machine learning faces challenges such as dependency on large labeled datasets, limited generalization in diverse environments, and computational resource intensity. Self-supervised learning addresses data labeling issues by leveraging unlabeled data, but struggles with feature representation quality and scalability in complex tasks. Future directions emphasize improving model robustness, enhancing interpretability, and integrating multimodal data to advance autonomous learning capabilities.

Conclusion: Selecting the Right Approach

Selecting the right approach between machine learning and self-supervised learning depends on the availability of labeled data and the specific task complexity. Machine learning excels with abundant labeled datasets, while self-supervised learning leverages unlabeled data to improve performance in data-scarce environments. Organizations should evaluate dataset size, annotation costs, and problem type to optimize model accuracy and efficiency.

Related Important Terms

Contrastive Learning

Machine learning encompasses various methods, with self-supervised learning emerging as a cutting-edge approach that leverages unlabeled data to generate predictive models. Contrastive learning, a prominent technique within self-supervised learning, optimizes representations by contrasting positive and negative sample pairs, significantly improving performance in tasks such as image recognition and natural language processing.

Pretext Task

Machine learning involves training models on labeled datasets to make predictions, whereas self-supervised learning utilizes pretext tasks, such as predicting missing parts of data, to generate supervisory signals without manual labeling. Pretext tasks enable models to learn useful representations from unlabeled data, improving performance on downstream tasks.

Negative Sampling

Machine learning techniques often rely on large labeled datasets, whereas self-supervised learning leverages unlabeled data by generating supervisory signals from the data itself, enhancing feature extraction without manual labeling. Negative sampling plays a crucial role in self-supervised learning by efficiently selecting informative negative examples to improve representation learning and model convergence.

Masked Modeling

Machine learning involves training algorithms on labeled data to predict outcomes, while self-supervised learning leverages unlabeled data by generating supervisory signals from the data itself, significantly reducing the need for manual annotation. Masked modeling, a key technique in self-supervised learning, improves representation learning by randomly masking parts of input data and tasking the model with reconstructing the missing information, enhancing capabilities in natural language processing and computer vision.

Representation Learning

Machine learning encompasses various techniques for training models, with representation learning focusing on automatically discovering meaningful features from raw data. Self-supervised learning, a subset of representation learning, leverages unlabeled data by generating proxy tasks to improve feature extraction without relying on extensive labeled datasets.

Few-shot Transfer

Self-supervised learning leverages unlabeled data to pretrain models, significantly enhancing few-shot transfer performance by enabling effective generalization from limited labeled examples. Machine learning approaches relying solely on supervised data often struggle with few-shot transfer, as they require extensive labeled datasets to achieve comparable accuracy and adaptability.

Pseudo-Labeling

Pseudo-labeling in self-supervised learning leverages unlabeled data by generating artificial labels through a model's confident predictions, enhancing training efficiency and performance without extensive human annotation. This technique contrasts traditional machine learning, which typically relies on fully labeled datasets, by enabling models to learn from vast amounts of unannotated data and improve generalization.

Clustering-based SSL

Machine Learning encompasses a wide range of algorithms, while Self-Supervised Learning (SSL) specifically leverages unlabeled data to generate supervisory signals, with clustering-based SSL methods using data grouping to create pseudo-labels that improve feature representation. Clustering-based SSL techniques such as DeepCluster and SwAV enable models to learn meaningful embeddings by iteratively assigning and refining cluster assignments, enhancing performance on downstream tasks without manual annotation.

Bootstrap Your Own Latent (BYOL)

Bootstrap Your Own Latent (BYOL) is a self-supervised learning algorithm that improves representation learning without requiring labeled data by leveraging two neural networks: an online network and a target network. Unlike traditional machine learning that relies on labeled datasets, BYOL optimizes latent representations by minimizing the distance between predictions from the online network and projections from the target network, enabling superior performance in downstream tasks.

SimCLR

Machine learning encompasses various approaches, with self-supervised learning like SimCLR leveraging unlabeled data by creating augmented views of the same image and maximizing agreement between their representations to learn useful features without explicit labels. SimCLR's framework uses contrastive loss and data augmentation techniques, significantly improving representation quality and downstream task performance compared to traditional supervised learning models.

Machine Learning vs Self-Supervised Learning Infographic

industrydif.com

industrydif.com