Edge computing processes data locally on devices near the source, enabling real-time analysis and reducing latency, while TinyML optimizes machine learning models for deployment on ultra-low-power, resource-constrained devices. Combining edge computing with TinyML allows smart pets and wearable health monitors to perform sophisticated AI tasks without continuous cloud connectivity. This synergy enhances privacy, reduces bandwidth use, and enables faster decision-making in technical pet applications.

Table of Comparison

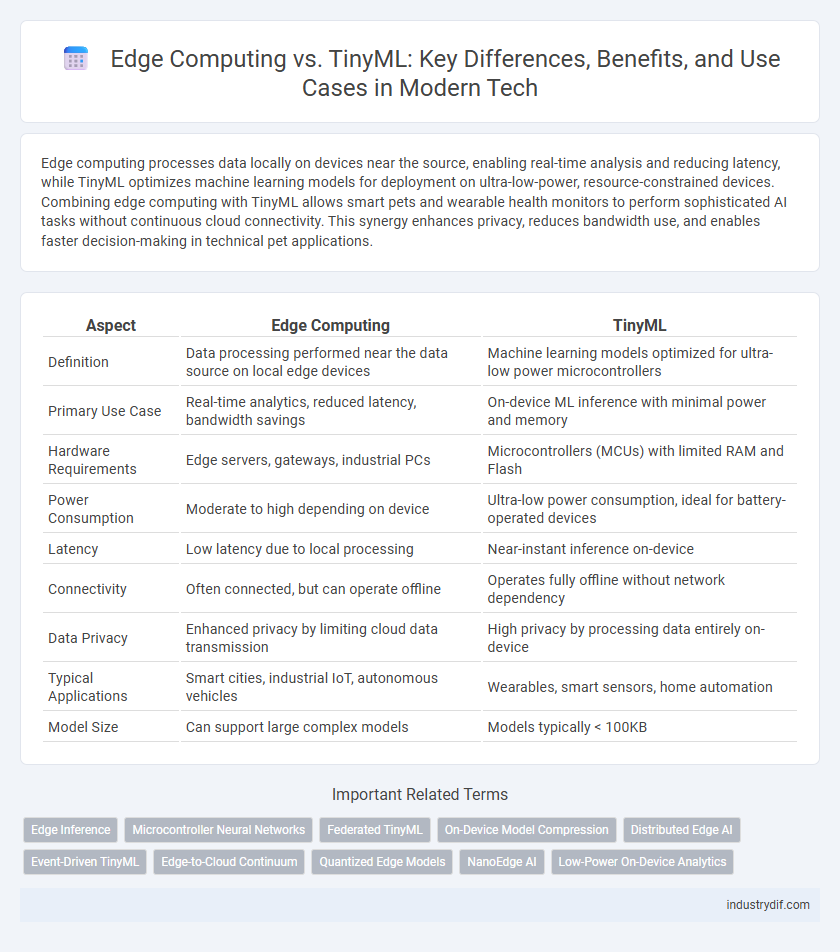

| Aspect | Edge Computing | TinyML |

|---|---|---|

| Definition | Data processing performed near the data source on local edge devices | Machine learning models optimized for ultra-low power microcontrollers |

| Primary Use Case | Real-time analytics, reduced latency, bandwidth savings | On-device ML inference with minimal power and memory |

| Hardware Requirements | Edge servers, gateways, industrial PCs | Microcontrollers (MCUs) with limited RAM and Flash |

| Power Consumption | Moderate to high depending on device | Ultra-low power consumption, ideal for battery-operated devices |

| Latency | Low latency due to local processing | Near-instant inference on-device |

| Connectivity | Often connected, but can operate offline | Operates fully offline without network dependency |

| Data Privacy | Enhanced privacy by limiting cloud data transmission | High privacy by processing data entirely on-device |

| Typical Applications | Smart cities, industrial IoT, autonomous vehicles | Wearables, smart sensors, home automation |

| Model Size | Can support large complex models | Models typically < 100KB |

Introduction to Edge Computing and TinyML

Edge computing processes data near the data source to reduce latency and bandwidth usage, enabling real-time analytics and improved device autonomy. TinyML involves deploying machine learning models on ultra-low-power microcontrollers, facilitating AI capabilities on edge devices with constrained resources. Combining edge computing with TinyML enhances IoT efficiency by enabling localized, scalable, and intelligent processing at the device level.

Core Concepts: Edge Computing Explained

Edge computing processes data near the source of generation, reducing latency and bandwidth use by minimizing reliance on centralized cloud servers. It deploys localized hardware and software resources to handle real-time analytics, enhancing responsiveness for IoT devices and applications. This architecture supports scalable, distributed data processing essential for time-sensitive operations and efficient network utilization.

What is TinyML? Key Definitions

TinyML is a subset of machine learning focused on deploying models on ultra-low power devices such as microcontrollers, enabling local data processing at the edge. It emphasizes minimal resource consumption, real-time inference, and efficient energy use, distinguishing it from broader edge computing that includes larger, more capable devices. Key definitions include on-device intelligence, neural network optimization for limited hardware, and embedded machine learning applications.

Architecture Comparison: Edge vs Embedded ML

Edge computing architecture processes data on local edge devices like gateways or micro data centers, enabling low-latency responses and reducing bandwidth usage by minimizing cloud dependency. TinyML, an embedded ML approach, integrates machine learning models directly within resource-constrained microcontrollers, emphasizing ultra-low power consumption and on-device inferencing without external connectivity. The primary architectural distinction lies in Edge computing's reliance on more capable hardware at the network edge versus TinyML's focus on highly optimized, compact models deployed on minimalistic embedded systems.

Performance and Scalability Considerations

Edge computing offers superior performance by processing data locally on distributed devices, minimizing latency and reducing bandwidth usage. TinyML excels in scalability by enabling machine learning inference on ultra-low-power microcontrollers, fitting vast IoT deployments with constrained resources. Balancing edge computing's robust processing capabilities with TinyML's energy-efficient scalability is crucial for optimizing real-time analytics across diverse application scales.

Power Efficiency and Resource Constraints

Edge computing processes data near the source, reducing latency and bandwidth usage, but often requires more power and higher computational resources compared to TinyML. TinyML optimizes machine learning models to run efficiently on ultra-low-power microcontrollers, making it ideal for devices with severe resource constraints and limited energy budgets. Power efficiency in TinyML enables extended battery life in edge devices, while edge computing typically demands more robust hardware capable of handling complex workloads.

Real-World Applications: Use Cases of Edge Computing

Edge computing drives real-time data processing in autonomous vehicles, smart cities, and industrial IoT by minimizing latency and reducing bandwidth use. It enables predictive maintenance in manufacturing by analyzing sensor data locally to prevent equipment failure. Retailers leverage edge computing for personalized customer experiences through instant analytics on in-store devices.

Industrial Adoption of TinyML Solutions

Industrial adoption of TinyML solutions accelerates due to its ability to process data locally on low-power edge devices, reducing latency and enhancing real-time decision-making. TinyML enables predictive maintenance and anomaly detection in manufacturing environments by embedding machine learning models directly into sensors and actuators. Compared to traditional edge computing, TinyML offers lower energy consumption and cost-effective scalability, driving widespread implementation in Industry 4.0 applications.

Security and Privacy Implications

Edge computing enhances security by processing data locally on devices, reducing exposure to centralized cloud vulnerabilities and minimizing latency-related risks. TinyML further strengthens privacy through on-device machine learning, enabling real-time data analysis without transmitting sensitive information externally. Both technologies collectively mitigate data breaches and unauthorized access by limiting the data flow across networks and enforcing stringent local security protocols.

Future Trends: Edge Computing and TinyML Synergy

Edge computing and TinyML are converging to drive future innovations in real-time data processing and AI deployment on resource-constrained devices. The synergy enhances low-latency decision-making by enabling machine learning models to run locally on edge devices, reducing dependency on cloud infrastructure. Emerging trends highlight the integration of TinyML with advanced edge architectures to optimize energy efficiency, scalability, and privacy in IoT ecosystems.

Related Important Terms

Edge Inference

Edge inference leverages local processing power to analyze data in real-time on edge devices, reducing latency and bandwidth usage compared to cloud-based solutions. TinyML enhances edge inference by enabling machine learning models to run efficiently on ultra-low-power microcontrollers, optimizing performance for resource-constrained environments.

Microcontroller Neural Networks

Microcontroller neural networks in edge computing enable real-time data processing with low latency and minimal power consumption, optimizing performance for IoT devices. TinyML leverages these neural networks to run AI models directly on microcontrollers, reducing the need for cloud connectivity and enhancing privacy and efficiency in embedded systems.

Federated TinyML

Federated TinyML integrates edge computing with tiny machine learning by enabling distributed model training across multiple resource-constrained devices while preserving data privacy. This approach reduces latency and bandwidth usage compared to traditional centralized methods, optimizing real-time inference and model updates at the network edge.

On-Device Model Compression

On-device model compression in edge computing enhances processing efficiency by reducing the model size, enabling real-time analytics with minimal latency and lower energy consumption. TinyML leverages specialized compression techniques like quantization and pruning to deploy machine learning models directly on resource-constrained devices, optimizing performance without relying on cloud connectivity.

Distributed Edge AI

Distributed Edge AI leverages Edge Computing by processing data locally across multiple edge nodes to reduce latency and enhance privacy, while TinyML focuses on deploying ultra-low-power machine learning models on embedded devices with minimal computational resources. Together, they enable scalable, efficient, and real-time AI inference at the network edge, transforming IoT applications and distributed systems.

Event-Driven TinyML

Edge computing processes data locally on devices near the data source, reducing latency and bandwidth usage, while Event-Driven TinyML specifically activates machine learning models in response to sensor-triggered events, optimizing power and computation for resource-constrained environments. This event-triggered approach in TinyML enables real-time, energy-efficient decision-making at the edge, outperforming continuous processing models in IoT applications.

Edge-to-Cloud Continuum

Edge computing enhances real-time data processing by distributing workloads near data sources, reducing latency and bandwidth use across the edge-to-cloud continuum. TinyML complements this by enabling machine learning models to run locally on microcontrollers, facilitating efficient, low-power inference at the edge.

Quantized Edge Models

Quantized edge models enable efficient deployment of machine learning algorithms on resource-constrained devices by reducing model size and computation requirements through precision reduction techniques. Edge computing leverages these quantized models to perform real-time data processing locally, minimizing latency and bandwidth usage compared to cloud-centric TinyML implementations.

NanoEdge AI

NanoEdge AI leverages TinyML to enable ultra-efficient machine learning inference directly on edge devices, reducing latency and preserving data privacy without reliance on cloud connectivity. Unlike traditional edge computing that processes data on local servers or gateways, NanoEdge AI integrates adaptive learning algorithms within microcontrollers, optimizing energy consumption and enabling real-time decision-making in constrained environments.

Low-Power On-Device Analytics

Edge computing enables real-time data processing by leveraging localized servers, reducing latency and bandwidth use, while TinyML specializes in ultra-low-power machine learning models optimized for on-device analytics in constrained environments. Combining these technologies enhances energy efficiency and responsiveness in applications such as IoT sensors, wearable devices, and smart industrial systems.

Edge Computing vs TinyML Infographic

industrydif.com

industrydif.com