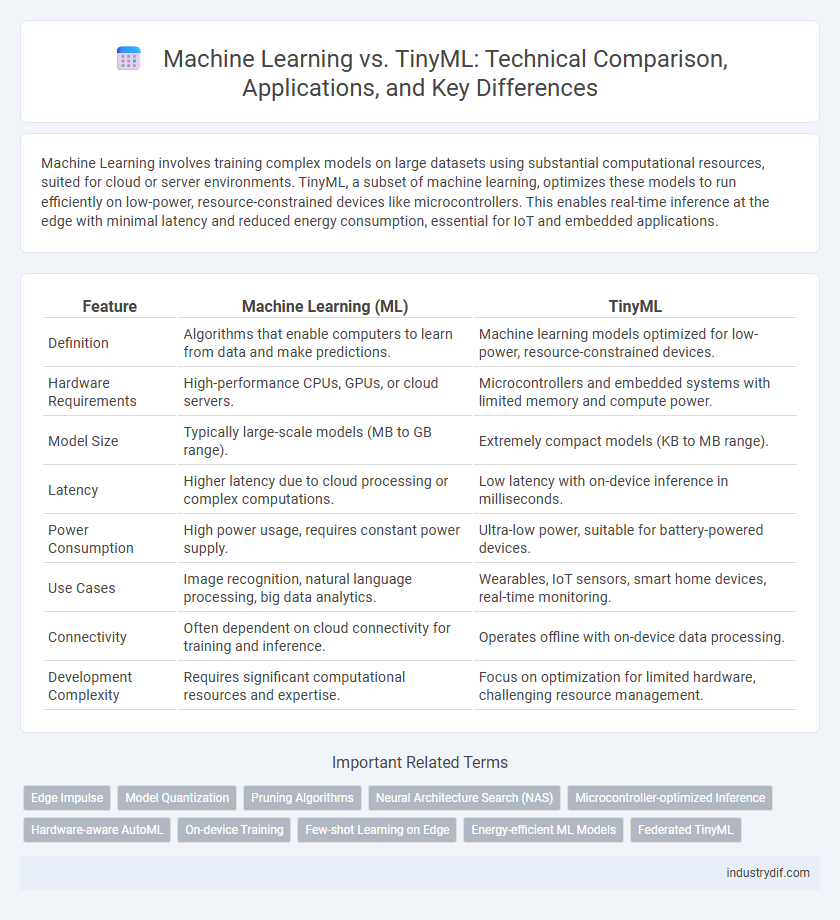

Machine Learning involves training complex models on large datasets using substantial computational resources, suited for cloud or server environments. TinyML, a subset of machine learning, optimizes these models to run efficiently on low-power, resource-constrained devices like microcontrollers. This enables real-time inference at the edge with minimal latency and reduced energy consumption, essential for IoT and embedded applications.

Table of Comparison

| Feature | Machine Learning (ML) | TinyML |

|---|---|---|

| Definition | Algorithms that enable computers to learn from data and make predictions. | Machine learning models optimized for low-power, resource-constrained devices. |

| Hardware Requirements | High-performance CPUs, GPUs, or cloud servers. | Microcontrollers and embedded systems with limited memory and compute power. |

| Model Size | Typically large-scale models (MB to GB range). | Extremely compact models (KB to MB range). |

| Latency | Higher latency due to cloud processing or complex computations. | Low latency with on-device inference in milliseconds. |

| Power Consumption | High power usage, requires constant power supply. | Ultra-low power, suitable for battery-powered devices. |

| Use Cases | Image recognition, natural language processing, big data analytics. | Wearables, IoT sensors, smart home devices, real-time monitoring. |

| Connectivity | Often dependent on cloud connectivity for training and inference. | Operates offline with on-device data processing. |

| Development Complexity | Requires significant computational resources and expertise. | Focus on optimization for limited hardware, challenging resource management. |

Overview of Machine Learning and TinyML

Machine learning encompasses algorithms and statistical models enabling computers to perform tasks without explicit programming, primarily reliant on cloud or high-performance computing environments. TinyML focuses on deploying these machine learning models on ultra-low power, resource-constrained edge devices such as microcontrollers, enabling real-time inference with minimal latency and reduced energy consumption. While traditional machine learning often requires substantial computational resources, TinyML optimizes model size and efficiency to function seamlessly in embedded systems and IoT applications.

Key Differences Between Machine Learning and TinyML

Machine Learning involves training complex models on large datasets using powerful computational resources, whereas TinyML focuses on deploying efficient models on resource-constrained edge devices with limited memory and processing power. TinyML emphasizes low latency, reduced energy consumption, and on-device inference, suitable for IoT applications, while traditional Machine Learning often relies on cloud-based infrastructure for model training and inference. The key differences include model size, computational requirements, latency, and deployment environments.

Technical Requirements for Machine Learning vs TinyML

Machine learning requires substantial computational power, large memory capacity, and high energy consumption often relying on cloud-based GPU or TPU accelerators for training complex models. TinyML is designed to run on resource-constrained edge devices with limited RAM (usually under 512 KB), low-power microcontrollers, and minimal storage, emphasizing energy-efficient inference without internet connectivity. The technical constraints in TinyML demand model quantization, pruning, and optimized algorithms to meet real-time performance while maintaining accuracy on embedded platforms.

Model Size and Resource Constraints

Machine Learning models typically require substantial computational power and memory, making them suitable for cloud or server environments with ample resources. TinyML specializes in deploying highly optimized, compact models that operate efficiently on resource-constrained devices such as microcontrollers with limited RAM and processing capability. The key distinction lies in TinyML's emphasis on minimizing model size--often under a few hundred kilobytes--to enable real-time inference on edge devices without compromising energy efficiency.

Real-Time Processing Capabilities

Machine learning typically involves processing large datasets on powerful servers, enabling complex model training but often facing latency issues in real-time applications. TinyML specializes in deploying lightweight machine learning models on resource-constrained edge devices, delivering low-latency inference for real-time processing scenarios. The integration of TinyML facilitates on-device decision-making, critical for applications like IoT sensors and autonomous systems where immediate responsiveness is essential.

Edge Deployment: TinyML Advantages

TinyML excels in edge deployment by enabling machine learning models to run efficiently on ultra-low-power microcontrollers with limited memory and processing capabilities. This approach reduces latency and dependency on cloud connectivity, enhancing data privacy and real-time responsiveness in IoT devices. The optimized model size and energy efficiency of TinyML make it ideal for distributed applications where bandwidth and power consumption are critical constraints.

Power Consumption and Efficiency

TinyML significantly reduces power consumption compared to traditional Machine Learning by enabling on-device inference with ultra-low-power microcontrollers. Its efficiency stems from optimized models designed to perform real-time processing without reliance on cloud connectivity, minimizing energy usage and latency. Machine Learning typically requires more computational resources and energy due to larger data processing and dependency on external servers for model execution.

Training and Inference Workflows

Machine Learning typically involves extensive data preprocessing, model training on powerful cloud-based GPUs or TPUs, and subsequent deployment for inference, often requiring substantial memory and computational resources. TinyML optimizes these workflows by enabling on-device training or transfer learning with constrained resources, focusing on energy-efficient inference using microcontrollers or edge devices with minimal latency. This shift reduces dependency on cloud connectivity and enhances real-time decision-making in constrained environments.

Application Use Cases: Machine Learning vs TinyML

Machine Learning excels in applications requiring extensive data processing and complex model training, such as image recognition, natural language processing, and predictive analytics in cloud environments. TinyML is optimized for low-power, real-time inference on edge devices like IoT sensors, wearable health monitors, and smart home gadgets where latency and connectivity limitations are critical. Use cases favoring TinyML include anomaly detection in industrial equipment, voice activation on microcontrollers, and environmental monitoring using battery-operated sensors.

Future Trends in Machine Learning and TinyML

Emerging trends in machine learning emphasize the integration of TinyML to enable on-device inference with ultra-low power consumption, expanding AI capabilities to edge devices like sensors and wearables. Advances in model compression, efficient architectures, and hardware accelerators are accelerating the deployment of TinyML in real-time and resource-constrained environments, promoting scalability and privacy by minimizing cloud dependency. Future developments will likely focus on enhancing interoperability between cloud-based machine learning frameworks and TinyML solutions to create seamless, adaptive AI ecosystems across diverse industries.

Related Important Terms

Edge Impulse

Machine Learning involves training complex models on large datasets typically processed in the cloud, whereas TinyML optimizes these models for resource-constrained devices at the edge, enhancing real-time decision-making. Edge Impulse specializes in TinyML by providing an end-to-end platform that enables developers to build, deploy, and optimize machine learning models directly on embedded devices, significantly reducing latency and power consumption.

Model Quantization

Machine learning models often require significant computational resources, whereas TinyML leverages model quantization techniques to compress models, reducing memory footprint and power consumption for deployment on edge devices. Quantization converts floating-point weights to lower-precision formats like INT8, maintaining accuracy while enabling real-time inference on microcontrollers with limited hardware capabilities.

Pruning Algorithms

Pruning algorithms in machine learning reduce model complexity by eliminating redundant parameters, enhancing inference speed and efficiency, particularly in resource-constrained environments. TinyML leverages advanced pruning techniques to optimize neural networks for deployment on low-power microcontrollers, achieving high accuracy with minimal computational overhead.

Neural Architecture Search (NAS)

Neural Architecture Search (NAS) automates the design of efficient machine learning models, significantly advancing performance in both traditional Machine Learning and resource-constrained TinyML environments. TinyML leverages NAS to optimize neural network architectures for minimal power consumption and low latency, enabling real-time inferencing on embedded devices with limited computational capacity.

Microcontroller-optimized Inference

Machine Learning typically requires substantial computational resources, making it less suitable for microcontroller-based devices, whereas TinyML enables efficient inference directly on low-power microcontrollers by optimizing model size and computation. Techniques such as quantization, pruning, and hardware-aware model design are critical in TinyML to achieve real-time inference with minimal energy consumption on embedded systems.

Hardware-aware AutoML

Hardware-aware AutoML optimizes model architectures by considering the constraints of embedded devices, enhancing the deployment efficiency of TinyML applications. Unlike traditional Machine Learning, which often targets high-performance servers, TinyML leverages AutoML techniques tailored to limited memory, power, and processing capabilities of IoT hardware.

On-device Training

On-device training in TinyML enables real-time model updates directly on low-power edge devices, reducing latency and preserving data privacy compared to traditional cloud-based machine learning workflows. TinyML frameworks optimize resource-constrained hardware such as microcontrollers by employing efficient algorithms and quantization techniques, facilitating continuous learning without the need for external servers.

Few-shot Learning on Edge

Few-shot learning on edge devices leverages TinyML frameworks to enable real-time model adaptation with minimal data, significantly reducing latency and preserving privacy compared to traditional cloud-based machine learning. By optimizing resource-constrained hardware for efficient inference and incremental learning, TinyML enhances autonomous decision-making in IoT applications while minimizing power consumption.

Energy-efficient ML Models

Energy-efficient ML models in Machine Learning often require substantial computational power and memory, limiting their use in resource-constrained devices. TinyML optimizes algorithms for microcontrollers, enabling ultra-low-power consumption while maintaining acceptable accuracy for real-time, on-device processing.

Federated TinyML

Federated TinyML combines the privacy and efficiency of edge-based TinyML devices with federated learning's decentralized training, enabling machine learning models to be trained locally across multiple devices without sharing raw data. This approach optimizes computational resources in IoT environments while enhancing data security and reducing latency compared to traditional centralized machine learning frameworks.

Machine Learning vs TinyML Infographic

industrydif.com

industrydif.com