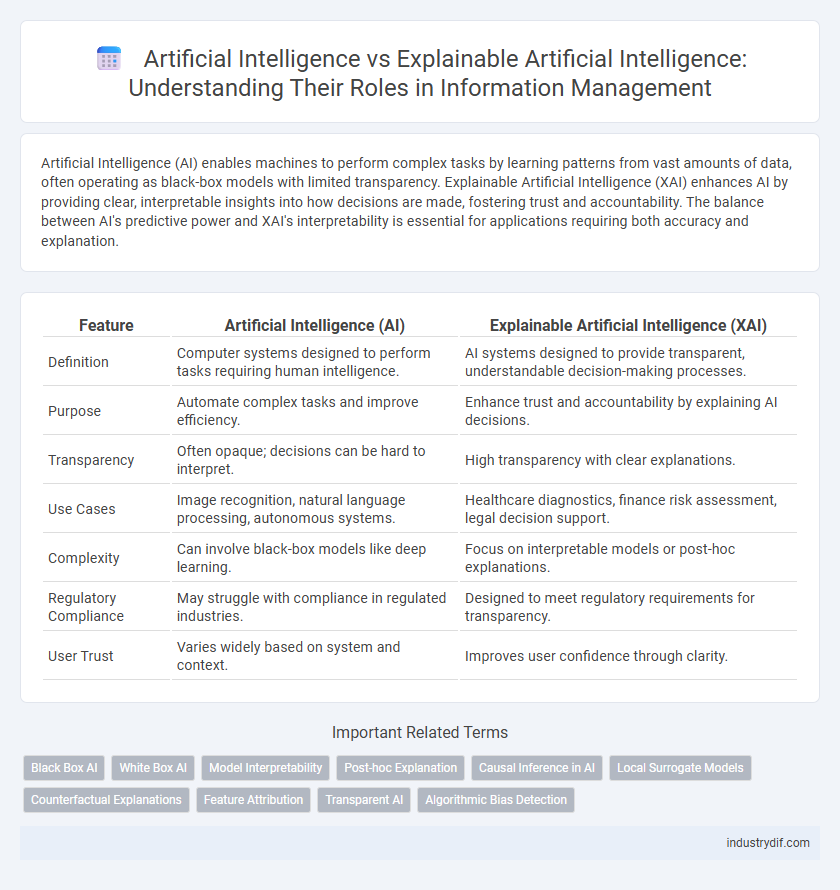

Artificial Intelligence (AI) enables machines to perform complex tasks by learning patterns from vast amounts of data, often operating as black-box models with limited transparency. Explainable Artificial Intelligence (XAI) enhances AI by providing clear, interpretable insights into how decisions are made, fostering trust and accountability. The balance between AI's predictive power and XAI's interpretability is essential for applications requiring both accuracy and explanation.

Table of Comparison

| Feature | Artificial Intelligence (AI) | Explainable Artificial Intelligence (XAI) |

|---|---|---|

| Definition | Computer systems designed to perform tasks requiring human intelligence. | AI systems designed to provide transparent, understandable decision-making processes. |

| Purpose | Automate complex tasks and improve efficiency. | Enhance trust and accountability by explaining AI decisions. |

| Transparency | Often opaque; decisions can be hard to interpret. | High transparency with clear explanations. |

| Use Cases | Image recognition, natural language processing, autonomous systems. | Healthcare diagnostics, finance risk assessment, legal decision support. |

| Complexity | Can involve black-box models like deep learning. | Focus on interpretable models or post-hoc explanations. |

| Regulatory Compliance | May struggle with compliance in regulated industries. | Designed to meet regulatory requirements for transparency. |

| User Trust | Varies widely based on system and context. | Improves user confidence through clarity. |

Defining Artificial Intelligence (AI)

Artificial Intelligence (AI) refers to computer systems designed to perform tasks that typically require human intelligence, such as learning, reasoning, problem-solving, and decision-making. It encompasses machine learning, neural networks, natural language processing, and computer vision, enabling autonomous data interpretation and action. Explainable Artificial Intelligence (XAI) enhances AI by providing transparent and interpretable models, allowing users to understand, trust, and effectively manage AI-driven decisions.

Understanding Explainable Artificial Intelligence (XAI)

Explainable Artificial Intelligence (XAI) enhances traditional AI models by providing transparent and interpretable insights into decision-making processes, enabling users to understand how outcomes are derived. XAI employs techniques like model-agnostic methods, rule-based explanations, and visualizations to clarify complex algorithmic behaviors, improving trust and accountability in AI systems. Emphasizing human-centered explanations, XAI bridges the gap between AI's predictive power and the necessity for ethical and regulatory compliance.

Key Differences Between AI and XAI

Artificial Intelligence (AI) focuses on creating systems capable of performing tasks that typically require human intelligence, including pattern recognition, decision-making, and problem-solving. Explainable Artificial Intelligence (XAI) emphasizes transparency and interpretability, allowing users to understand the reasoning behind AI decisions through clear explanations and traceable outputs. Key differences between AI and XAI include the trade-off between model complexity and interpretability, with XAI prioritizing user trust and accountability in high-stakes applications such as healthcare, finance, and legal systems.

Importance of Transparency in AI Models

Transparency in AI models is crucial for building trust and ensuring accountability in artificial intelligence systems. Explainable Artificial Intelligence (XAI) enhances transparency by providing clear insights into how AI algorithms make decisions, enabling users to understand, verify, and challenge outcomes. This transparency reduces risks of bias, increases regulatory compliance, and supports ethical AI deployment across industries.

Trust and Accountability in AI Systems

Artificial Intelligence (AI) systems often operate as black boxes, making it difficult to understand their decision-making processes, which poses challenges for trust and accountability. Explainable Artificial Intelligence (XAI) addresses these challenges by providing transparent and interpretable models that enable users to trace and comprehend how decisions are made. Enhanced transparency in XAI fosters greater trust among stakeholders and supports accountability by facilitating error diagnosis and regulatory compliance.

Industry Applications of AI vs XAI

Artificial Intelligence (AI) drives automation and predictive analytics across industries such as healthcare, finance, and manufacturing, enhancing efficiency and decision-making but often operates as a black box with limited transparency. Explainable Artificial Intelligence (XAI) offers interpretable models that provide clarity on decision processes, crucial for regulated sectors like finance and healthcare where trust and compliance are paramount. Industry adoption of XAI improves model accountability and enables stakeholders to validate AI-driven outcomes, fostering broader acceptance and safer deployment of AI systems.

Regulatory Impacts on AI and XAI Adoption

Regulatory frameworks increasingly mandate transparency and accountability in AI systems, driving the adoption of Explainable Artificial Intelligence (XAI) over traditional black-box models. Compliance with data protection laws like GDPR requires AI models to provide interpretable outputs that facilitate user trust and regulatory audits. This shift emphasizes the importance of explainability in AI development to meet legal standards and ensure ethical deployment.

Challenges in Achieving Explainability

Artificial Intelligence (AI) systems often operate as complex black boxes, making it difficult to interpret their decision-making processes, which poses significant challenges in achieving explainability. Explainable Artificial Intelligence (XAI) seeks to address this by developing models that provide transparent and understandable justifications for their outputs, yet balancing accuracy and transparency remains a critical hurdle. High-dimensional data, model complexity, and the need for real-time explanations further complicate the implementation of effective XAI solutions across various industries.

Future Trends in AI and XAI Development

Future trends in Artificial Intelligence (AI) emphasize advancements in explainability, with Explainable Artificial Intelligence (XAI) evolving to provide transparent decision-making processes that enhance trust and accountability. Integration of XAI techniques like model-agnostic methods and interpretable neural networks will drive regulatory compliance and improve human-AI collaboration across industries. Continuous research in AI interpretability and ethical AI deployment is set to transform complex AI systems into more user-centric and socially responsible technologies.

Selecting Between AI and XAI for Business Solutions

Artificial Intelligence (AI) offers powerful data-driven decision-making capabilities but often lacks transparency, making it challenging for businesses to trust and comply with regulatory standards. Explainable Artificial Intelligence (XAI) enhances AI by providing clear, interpretable models that allow stakeholders to understand the decision process, crucial for industries with high accountability requirements such as finance and healthcare. Selecting between AI and XAI depends on the business need for model accuracy versus interpretability, risk management, and regulatory compliance.

Related Important Terms

Black Box AI

Artificial Intelligence often relies on Black Box models that provide high accuracy but lack transparency, making it difficult to understand decision-making processes. Explainable Artificial Intelligence aims to enhance trust and accountability by offering interpretable insights into these opaque algorithms.

White Box AI

Artificial Intelligence (AI) encompasses complex models that often operate as "black boxes," making their decision-making processes opaque and difficult to interpret. Explainable Artificial Intelligence (XAI), particularly White Box AI, prioritizes transparency by using interpretable models such as decision trees and rule-based systems to provide clear, understandable insights into how AI reaches its conclusions.

Model Interpretability

Artificial Intelligence models often operate as complex black boxes, making decision processes opaque and hindering trust and accountability. Explainable Artificial Intelligence prioritizes model interpretability by providing transparent, understandable insights into the reasoning behind predictions, enhancing usability in critical domains like healthcare and finance.

Post-hoc Explanation

Artificial Intelligence (AI) systems often operate as black boxes, making their decision processes opaque, whereas Explainable Artificial Intelligence (XAI) incorporates post-hoc explanation methods like LIME and SHAP to interpret model predictions after training. These techniques enhance transparency by attributing feature importance and providing insights into complex models, facilitating trust and accountability in AI applications.

Causal Inference in AI

Artificial Intelligence leverages causal inference to model relationships and predict outcomes, yet Explainable Artificial Intelligence enhances transparency by explicitly revealing the causal pathways behind decisions. Emphasizing causal inference in Explainable AI fosters trust and accountability by clarifying how input variables influence predictions through interpretable models.

Local Surrogate Models

Local surrogate models enhance Explainable Artificial Intelligence (XAI) by providing interpretable approximations of complex AI systems at a local level, making individual predictions more transparent. Unlike global AI models, these models focus on explaining specific decisions, improving trust and usability in fields such as healthcare and finance.

Counterfactual Explanations

Counterfactual explanations within Explainable Artificial Intelligence (XAI) clarify AI decision-making by illustrating how slight changes to input data could alter outcomes, enhancing transparency and user understanding. In contrast to traditional Artificial Intelligence models that often operate as opaque "black boxes," counterfactuals provide actionable insights by pinpointing minimal feature adjustments needed to achieve different results.

Feature Attribution

Feature attribution in artificial intelligence identifies the impact of individual input features on model predictions, enhancing transparency and trust in complex algorithms. Explainable artificial intelligence (XAI) advances this by providing interpretable attributions that clarify decision-making processes and improve model accountability.

Transparent AI

Transparent AI in Explainable Artificial Intelligence (XAI) prioritizes clarity by providing interpretable models that reveal decision-making processes, contrasting with traditional Artificial Intelligence systems often seen as opaque black boxes. This transparency enhances trust, accountability, and the ability to diagnose errors, crucial for applications in healthcare, finance, and legal sectors where understanding AI rationale is essential.

Algorithmic Bias Detection

Algorithmic bias detection in Artificial Intelligence systems identifies and mitigates unfair data patterns that can lead to skewed decision-making, whereas Explainable Artificial Intelligence (XAI) enhances transparency by providing interpretable insights into how algorithms reach specific conclusions. Integrating XAI techniques improves trust and accountability by enabling stakeholders to understand, scrutinize, and correct biases embedded within AI models.

Artificial Intelligence vs Explainable Artificial Intelligence Infographic

industrydif.com

industrydif.com