Data represents raw, unprocessed facts collected from various sources, while information is the meaningful interpretation of that data, providing context and relevance. Observability in data systems focuses on monitoring and understanding the state and behavior of the data infrastructure, whereas information observability emphasizes the accuracy, quality, and usability of the interpreted insights derived from that data. Effective management of both data and information observability ensures reliable decision-making and operational efficiency.

Table of Comparison

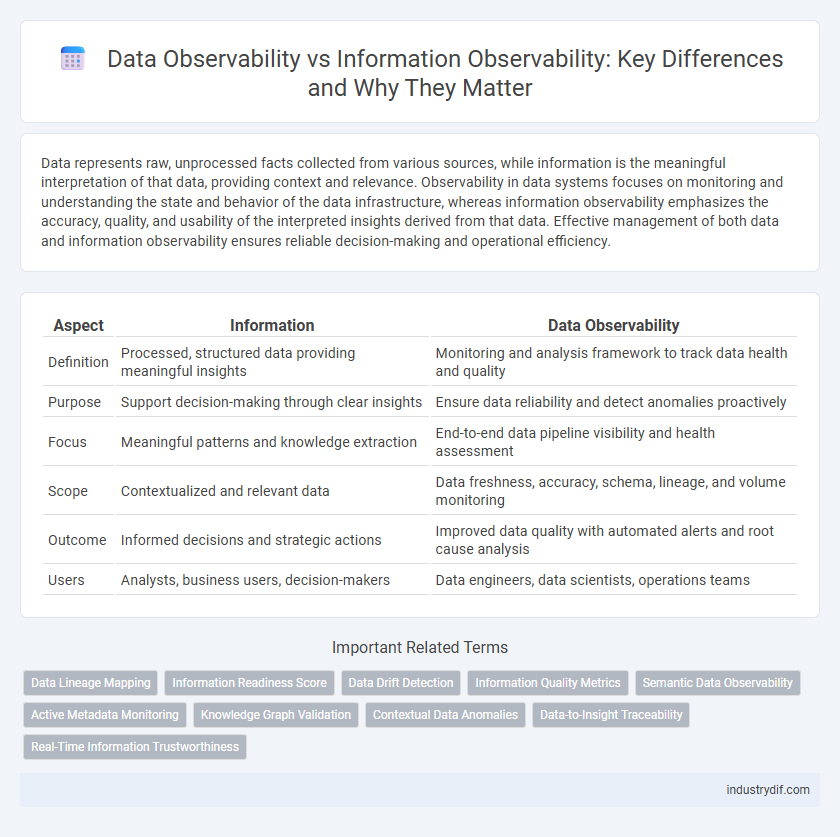

| Aspect | Information | Data Observability |

|---|---|---|

| Definition | Processed, structured data providing meaningful insights | Monitoring and analysis framework to track data health and quality |

| Purpose | Support decision-making through clear insights | Ensure data reliability and detect anomalies proactively |

| Focus | Meaningful patterns and knowledge extraction | End-to-end data pipeline visibility and health assessment |

| Scope | Contextualized and relevant data | Data freshness, accuracy, schema, lineage, and volume monitoring |

| Outcome | Informed decisions and strategic actions | Improved data quality with automated alerts and root cause analysis |

| Users | Analysts, business users, decision-makers | Data engineers, data scientists, operations teams |

Defining Data vs. Information in Modern Enterprises

Data represents raw, unprocessed facts collected from various sources within modern enterprises, such as transaction logs, sensor outputs, or user activities. Information emerges when data is organized, analyzed, and interpreted to provide meaningful insights that support decision-making and strategic planning. Distinguishing between data and information is crucial for establishing effective data observability frameworks that ensure data quality, reliability, and relevance throughout enterprise systems.

The Rise of Data Observability: Why It Matters

Data observability is transforming how organizations manage and trust their data by enabling real-time visibility into data quality, anomalies, and lineage. Unlike traditional information management, data observability provides continuous monitoring and proactive alerting to detect and resolve issues before they impact business decisions. The rise of data observability is crucial as it enhances data reliability, reduces downtime, and supports data-driven strategies essential for competitive advantage.

Information Observability: Ensuring Trustworthy Insights

Information Observability centers on monitoring, measuring, and understanding the quality and reliability of information within systems to ensure trustworthy insights. It involves tracking data lineage, context, and completeness, enabling organizations to detect anomalies and validate data accuracy in real time. This observability framework supports informed decision-making by providing transparency into data transformations and ensuring the integrity of information used for analytics and business intelligence.

Key Metrics in Data vs. Information Observability

Data observability centers on monitoring key metrics such as data freshness, volume, and schema changes to ensure data quality and reliability in pipelines. Information observability emphasizes the contextual relevance, accuracy, and usability of data as it transforms into actionable insights. Key metrics in information observability include trust scores, impact analysis, and lineage tracking to measure the effectiveness of data in decision-making processes.

Core Components of Data Observability Platforms

Data observability platforms integrate core components such as data quality monitoring, lineage tracking, and anomaly detection to ensure reliable data insights. These systems continuously assess data freshness, accuracy, and consistency across complex pipelines. By automating alerting and root cause analysis, data observability enhances visibility and trust in data environments.

Information Quality: Beyond Data Accuracy

Information quality transcends mere data accuracy by encompassing completeness, relevance, and timeliness to ensure actionable insights. High-quality information integrates contextual understanding and metadata, enabling robust decision-making processes. Observability frameworks emphasize continuous monitoring of information flows to detect anomalies and guarantee consistent data integrity across systems.

Challenges in Scaling Observability Across Data and Information

Scaling observability across data and information presents challenges including data heterogeneity, volume, and velocity, which complicate consistent monitoring and analysis. Integrating diverse data sources from structured and unstructured formats requires robust metadata management and real-time processing capabilities. Ensuring data quality, lineage, and context while maintaining performance and scalability demands advanced observability frameworks and cross-functional collaboration.

Real-World Use Cases: Data vs. Information Observability

Data observability enables organizations to monitor data health and detect pipeline issues through metrics, logs, and traces, crucial for maintaining data quality in ETL processes and data warehouses. Information observability extends beyond raw data by focusing on the usability, context, and actionable insights derived from data, enhancing decision-making in sectors like finance, healthcare, and e-commerce. Real-world use cases demonstrate that data observability drives operational reliability, while information observability supports strategic business intelligence and adaptive analytics.

Integrating Observability into Data Pipelines

Integrating observability into data pipelines enhances real-time monitoring, anomaly detection, and data quality assessment, bridging the gap between raw data and actionable information. Data observability tools track data health across ingestions, transformations, and outputs, enabling proactive issue resolution and ensuring data reliability. This integration fosters transparency and operational efficiency by providing a semantic layer that clarifies data lineage and context for data-driven decision-making.

Future Trends in Information and Data Observability

Future trends in information and data observability emphasize automated anomaly detection powered by AI and machine learning, enabling real-time insights and proactive issue resolution. The integration of observability tools with data governance platforms ensures enhanced data quality, compliance, and trust across distributed systems. Emerging standards in metadata management and observability APIs will drive interoperability and scalability in complex data environments.

Related Important Terms

Data Lineage Mapping

Data lineage mapping visually represents the flow and transformation of data across systems, enabling accurate tracking of data origins and dependencies for enhanced data observability. Effective data lineage provides transparency and context, crucial for validating data quality, ensuring compliance, and facilitating root cause analysis in complex data environments.

Information Readiness Score

Information Readiness Score measures the accuracy, completeness, and timeliness of information, enabling organizations to assess data quality and reliability in real-time. Unlike traditional data observability that focuses on data health metrics, this score integrates semantic validation and contextual relevance to ensure actionable insights and informed decision-making.

Data Drift Detection

Data observability enables continuous monitoring of data pipelines to detect anomalies and inconsistencies, while information observability focuses on the quality and context of insights derived from that data. Effective data drift detection relies on data observability tools that track statistical changes over time, alerting teams to shifts that could impact model accuracy and decision-making processes.

Information Quality Metrics

Information quality metrics focus on accuracy, completeness, consistency, and timeliness to ensure data observability translates raw data into actionable insights. Monitoring these metrics enables organizations to maintain high information integrity, supporting better decision-making and operational efficiency.

Semantic Data Observability

Semantic Data Observability enhances traditional data observability by focusing on the meaning and context of data to identify anomalies, data quality issues, and lineage more effectively. This approach leverages metadata, data relationships, and business logic, enabling deeper insights and proactive management of information integrity across complex data ecosystems.

Active Metadata Monitoring

Active metadata monitoring enhances data observability by continuously collecting and analyzing metadata across data pipelines, enabling real-time detection of anomalies and insights into data quality. This approach provides organizations with actionable intelligence to improve data reliability, governance, and compliance throughout the data lifecycle.

Knowledge Graph Validation

Knowledge graph validation enhances information quality by verifying data accuracy, consistency, and completeness within interconnected datasets, enabling robust semantic analysis. Effective observability frameworks monitor real-time data flows and anomalies, ensuring the integrity and reliability of knowledge graphs for advanced decision-making.

Contextual Data Anomalies

Information observability emphasizes understanding contextual data anomalies by analyzing patterns within the specific environment where data is generated, enabling more accurate detection of irregularities. Data observability typically focuses on raw data quality metrics but may overlook contextual nuances necessary for identifying anomalies that impact decision-making processes.

Data-to-Insight Traceability

Data observability focuses on monitoring data quality and system health, while information observability emphasizes the traceability from raw data to actionable insights, enabling organizations to understand how data transformations impact decision-making. Effective data-to-insight traceability ensures transparency and trust by linking data lineage, metadata, and analytics outcomes throughout the data lifecycle.

Real-Time Information Trustworthiness

Real-time information trustworthiness depends on continuous monitoring of data observability metrics such as accuracy, completeness, timeliness, and consistency to ensure reliable decision-making. Effective information observability platforms enable immediate detection of anomalies, data drift, and quality issues, enhancing the integrity of real-time insights.

Information vs Data Observability Infographic

industrydif.com

industrydif.com