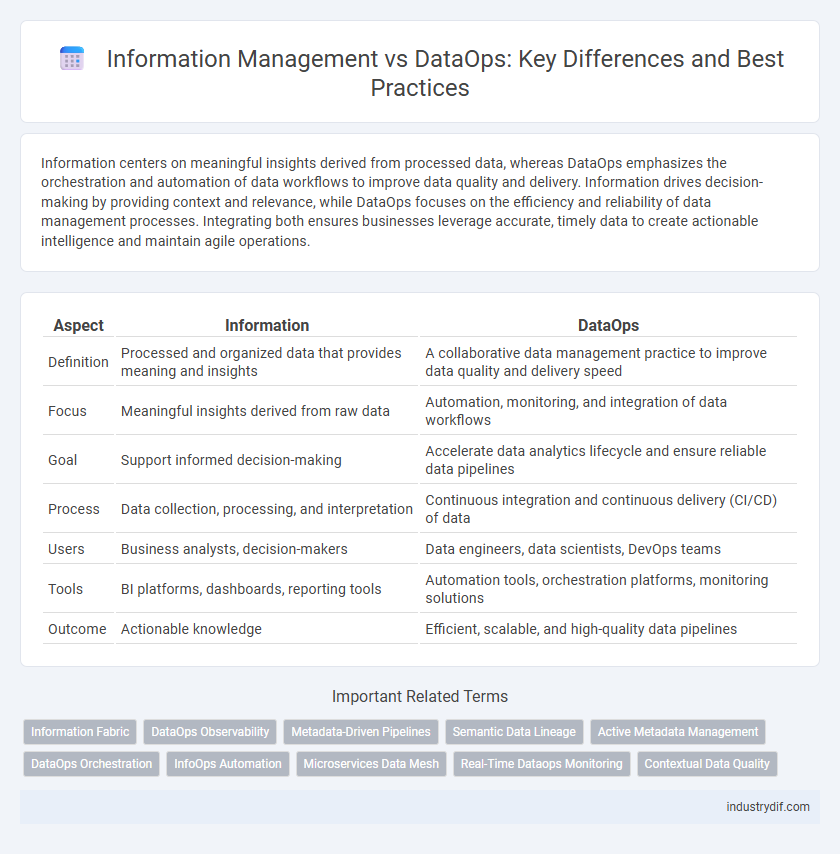

Information centers on meaningful insights derived from processed data, whereas DataOps emphasizes the orchestration and automation of data workflows to improve data quality and delivery. Information drives decision-making by providing context and relevance, while DataOps focuses on the efficiency and reliability of data management processes. Integrating both ensures businesses leverage accurate, timely data to create actionable intelligence and maintain agile operations.

Table of Comparison

| Aspect | Information | DataOps |

|---|---|---|

| Definition | Processed and organized data that provides meaning and insights | A collaborative data management practice to improve data quality and delivery speed |

| Focus | Meaningful insights derived from raw data | Automation, monitoring, and integration of data workflows |

| Goal | Support informed decision-making | Accelerate data analytics lifecycle and ensure reliable data pipelines |

| Process | Data collection, processing, and interpretation | Continuous integration and continuous delivery (CI/CD) of data |

| Users | Business analysts, decision-makers | Data engineers, data scientists, DevOps teams |

| Tools | BI platforms, dashboards, reporting tools | Automation tools, orchestration platforms, monitoring solutions |

| Outcome | Actionable knowledge | Efficient, scalable, and high-quality data pipelines |

Understanding Information and DataOps: Key Definitions

Information represents processed, organized data that conveys meaningful insights and supports decision-making, while DataOps is a collaborative data management practice designed to enhance data quality, integration, and delivery through automation and agile methodologies. Understanding Information requires recognizing its role as actionable knowledge derived from raw data, whereas DataOps focuses on streamlining data workflows to ensure timely, reliable access to that information. Key definitions emphasize information as the end-product utilized by businesses, with DataOps providing the framework that optimizes how data is collected, cleansed, and deployed for strategic use.

The Evolution from Data Management to DataOps

The evolution from traditional data management to DataOps reflects a paradigm shift towards agile, automated, and collaborative data workflows that enhance data quality and accelerate analytics delivery. DataOps integrates principles from DevOps and Agile methodologies, emphasizing continuous integration, continuous delivery, and proactive monitoring within data pipelines. This transformation enables organizations to respond swiftly to changing data requirements and supports scalable, real-time data operations.

Information Management: Core Principles and Practices

Information Management centers on the systematic collection, organization, and governance of data to transform it into actionable insights that support strategic decision-making. Core principles include data quality, metadata management, data security, and compliance, ensuring information accuracy, accessibility, and reliability across the enterprise. Unlike DataOps, which emphasizes agile data pipeline automation and integration, Information Management focuses on overarching policies and frameworks to sustain long-term information value and integrity.

DataOps in Modern Analytics Pipelines

DataOps streamlines modern analytics pipelines by integrating agile development, automated testing, and continuous integration to enhance data quality and delivery speed. It enables seamless collaboration between data engineers, analysts, and operations teams, ensuring reliable and scalable data workflows. Implementing DataOps reduces errors and accelerates insights, making it essential for efficient, real-time data analytics in complex environments.

Comparing Roles: Information Architect vs. DataOps Engineer

An Information Architect designs and organizes information structures to enhance user experience and data accessibility, ensuring coherent content management across digital platforms. A DataOps Engineer focuses on automating data workflows, ensuring data quality, and integrating data pipelines to optimize analytics and operational efficiency. While Information Architects prioritize structuring and accessibility of information, DataOps Engineers emphasize data reliability, scalability, and real-time processing capabilities.

Benefits of DataOps Over Traditional Information Handling

DataOps enhances traditional information handling by enabling continuous data integration, automated quality checks, and faster delivery cycles, resulting in improved data accuracy and reliability. Its collaborative approach reduces bottlenecks and accelerates decision-making by streamlining workflows between data engineers, analysts, and business stakeholders. DataOps also increases scalability and adaptability, supporting real-time analytics and dynamic data environments more effectively than conventional methods.

Challenges in Integrating Information Management and DataOps

Integrating Information Management and DataOps presents challenges such as ensuring consistent data governance frameworks across agile DataOps pipelines and traditional information repositories. Synchronizing metadata standards and maintaining data quality during continuous integration and deployment often complicate workflows. Scalability issues arise when aligning real-time data processing with comprehensive information lifecycle management policies.

Governance and Compliance: Information vs. DataOps Approaches

Information governance emphasizes structured policies and controls to ensure data quality, privacy, and regulatory compliance, prioritizing accountability and risk management throughout the data lifecycle. DataOps integrates governance into agile data workflows by automating compliance checks and monitoring, enabling continuous adherence to regulations while accelerating data delivery. Both approaches aim to balance stringent governance requirements with operational efficiency, but DataOps leverages automation to embed compliance seamlessly within iterative data processes.

Real-World Use Cases: Information Management vs. DataOps

Information management focuses on organizing, storing, and securing enterprise data to ensure accuracy and accessibility, demonstrated by healthcare organizations streamlining patient records. DataOps emphasizes automation, continuous integration, and real-time data processing, with retail companies optimizing supply chain forecasting through agile data pipelines. Real-world use cases highlight information management's role in compliance and governance, while DataOps drives innovation and operational efficiency in dynamic data environments.

Future Trends: Bridging Information and DataOps Strategies

Emerging future trends emphasize the integration of Information management with DataOps strategies to enhance data agility and governance. Automation, AI-driven analytics, and real-time data processing are pivotal in bridging traditional information frameworks with modern DataOps workflows. This convergence drives more efficient data lifecycle management, promoting innovation and faster decision-making across enterprises.

Related Important Terms

Information Fabric

Information Fabric integrates diverse data sources and analytics to create a cohesive, real-time information ecosystem that enhances decision-making and operational efficiency. Unlike DataOps, which emphasizes data pipeline automation and quality, Information Fabric focuses on seamless data accessibility, governance, and contextual intelligence across the enterprise.

DataOps Observability

DataOps Observability integrates real-time monitoring, analytics, and automated alerts to enhance data pipeline reliability and performance, enabling rapid issue detection and resolution. This approach surpasses traditional Information management by providing end-to-end visibility, reducing downtime, and optimizing data quality across complex data workflows.

Metadata-Driven Pipelines

Metadata-driven pipelines in Information Management leverage data about data to automate and optimize workflows, enhancing accuracy and scalability compared to traditional DataOps approaches. By integrating metadata at every pipeline stage, organizations achieve improved data lineage, governance, and real-time adaptability, driving more efficient decision-making processes.

Semantic Data Lineage

Semantic Data Lineage enhances Information management by providing detailed context and meaning to data flows, enabling accurate tracking of data transformations and usage across the lifecycle. Unlike DataOps, which emphasizes operational automation and process efficiency, Semantic Data Lineage focuses on enriching metadata with semantic relationships to improve data governance, compliance, and trustworthiness.

Active Metadata Management

Active Metadata Management enhances Information systems by dynamically cataloging, indexing, and governing metadata to improve data discovery, quality, and lineage. DataOps leverages Active Metadata to automate workflows, enabling agile, continuous integration and delivery of data pipelines that ensure reliable, real-time information processing.

DataOps Orchestration

DataOps Orchestration automates the integration, testing, and deployment of data pipelines, enhancing data quality and accelerating analytics workflows. By coordinating tools and processes across data engineering, it ensures continuous data delivery and real-time insights in dynamic data environments.

InfoOps Automation

InfoOps Automation enhances operational efficiency by integrating real-time data analysis with automated workflows, enabling organizations to manage information lifecycle more effectively than traditional DataOps frameworks. This approach leverages AI-driven insights and automated governance to streamline data quality, security, and compliance processes, optimizing overall information management.

Microservices Data Mesh

Information architecture in Microservices Data Mesh prioritizes decentralized data ownership and domain-oriented design, enabling scalable and agile data management. DataOps complements this by automating data workflows, ensuring continuous integration and delivery of quality data across distributed microservices environments.

Real-Time Dataops Monitoring

Real-Time DataOps monitoring enables continuous tracking, validation, and optimization of data pipelines, ensuring data accuracy, timeliness, and reliability across platforms. Implementing advanced analytics and automated alerts in DataOps reduces latency and accelerates decision-making, differentiating it from traditional information management approaches.

Contextual Data Quality

Information leverages contextual data quality to transform raw data into meaningful insights, ensuring accuracy, relevance, and timeliness tailored to specific use cases. DataOps emphasizes automation and collaboration in managing data pipelines, but without strong contextual data quality governance, the derived information may lack reliability and actionable value.

Information vs DataOps Infographic

industrydif.com

industrydif.com