Big Data involves processing vast, complex datasets that require advanced analytics and machine learning to uncover patterns and insights. Small Data refers to manageable, structured datasets that can be analyzed using traditional methods for quick, specific decision-making. Understanding the differences between Big Data and Small Data helps businesses choose the right tools and strategies for data-driven success.

Table of Comparison

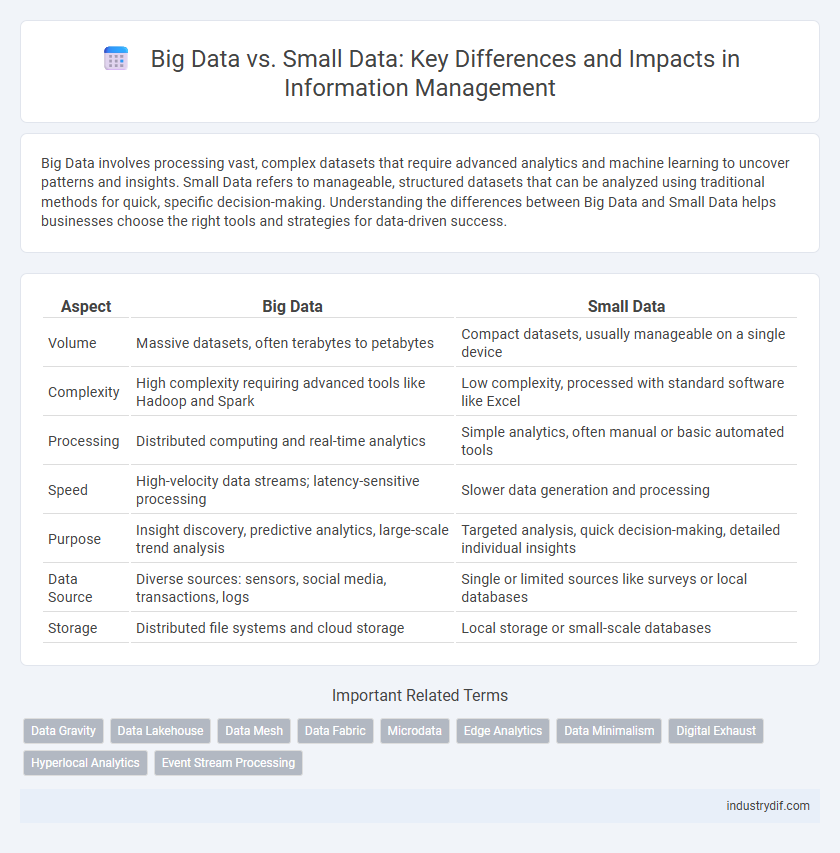

| Aspect | Big Data | Small Data |

|---|---|---|

| Volume | Massive datasets, often terabytes to petabytes | Compact datasets, usually manageable on a single device |

| Complexity | High complexity requiring advanced tools like Hadoop and Spark | Low complexity, processed with standard software like Excel |

| Processing | Distributed computing and real-time analytics | Simple analytics, often manual or basic automated tools |

| Speed | High-velocity data streams; latency-sensitive processing | Slower data generation and processing |

| Purpose | Insight discovery, predictive analytics, large-scale trend analysis | Targeted analysis, quick decision-making, detailed individual insights |

| Data Source | Diverse sources: sensors, social media, transactions, logs | Single or limited sources like surveys or local databases |

| Storage | Distributed file systems and cloud storage | Local storage or small-scale databases |

Understanding Big Data: Definition and Scope

Big Data refers to extremely large datasets characterized by high volume, velocity, and variety, requiring advanced analytics and processing technologies like Hadoop and Spark to extract meaningful insights. Its scope extends across multiple domains, including healthcare, finance, and marketing, enabling predictive modeling and real-time decision-making. Unlike Small Data, which is manageable with traditional tools and focuses on specific, precise datasets, Big Data deals with vast, complex information that demands scalable storage and computational power.

Defining Small Data: Key Characteristics

Small data is characterized by its manageable volume, typically measured in megabytes to gigabytes, enabling straightforward analysis without extensive computing resources. It emphasizes high-quality, structured, and context-rich datasets that provide specific insights tailored to niche applications or individual user experiences. Unlike big data, small data is readily interpretable, allowing for quick decision-making and actionable intelligence in localized and specialized environments.

Big Data vs Small Data: Core Differences

Big Data encompasses massive, complex datasets generated at high velocity from diverse sources, requiring advanced analytics and distributed computing for processing. Small Data refers to manageable, structured datasets that can be easily analyzed with traditional tools and methods, focusing on accuracy and relevance for specific business needs. Core differences include volume, variety, velocity, and the technology needed, highlighting Big Data's reliance on scalable storage and machine learning, whereas Small Data emphasizes direct, actionable insights from concise information.

Use Cases for Big Data in Industry

Big Data drives transformative use cases in industries such as healthcare, where predictive analytics improve patient outcomes by analyzing vast datasets. In retail, Big Data enables personalized marketing and inventory optimization through real-time consumer behavior insights. Manufacturing benefits from Big Data by enhancing predictive maintenance and supply chain efficiency using sensor data and advanced analytics.

Small Data Applications and Benefits

Small data applications enhance decision-making by delivering precise, actionable insights from manageable datasets, enabling businesses to tailor customer experiences and optimize operations efficiently. These applications require less storage and computational power, making real-time analysis accessible for smaller enterprises and improving agility. Benefits include increased data accuracy, faster processing times, and the ability to address specific problems with targeted, relevant information.

Data Volume, Variety, and Velocity: A Comparative Analysis

Big Data encompasses vast volumes of structured and unstructured data from diverse sources, enabling comprehensive analytics across high-variety datasets, while Small Data involves limited, well-defined datasets with lower complexity. The velocity of Big Data demands real-time or near-real-time processing using advanced technologies like stream analytics and distributed computing, contrasting with Small Data's slower ingestion rates manageable through traditional databases. This comparative analysis highlights the scalability challenges and opportunities in handling data volume, variety, and velocity unique to Big Data versus the simplicity and specificity of Small Data.

Privacy and Security Concerns in Big and Small Data

Big Data presents significant privacy risks due to the vast volume and variety of data collected, increasing the likelihood of unauthorized access and data breaches. Small Data, while more manageable in size, still requires stringent security measures to protect sensitive information from targeted cyber threats. Both Big and Small Data demand robust encryption, access controls, and compliance with data protection regulations to ensure privacy and maintain user trust.

Tools and Technologies for Managing Big Data

Big Data management relies on advanced tools such as Apache Hadoop, Spark, and Kafka to process vast volumes of diverse, high-velocity data efficiently. These technologies enable distributed storage and parallel processing, ensuring scalability and real-time analytics crucial for big data environments. In contrast, small data management often uses traditional database systems like MySQL or Microsoft Access, which are optimized for structured, manageable datasets.

Efficient Solutions for Small Data Analytics

Small data analytics delivers efficient solutions by focusing on manageable datasets that require less computational power and storage compared to big data. This approach leverages precise, high-quality information enabling faster decision-making and lower costs in data processing. Techniques such as descriptive statistics, traditional machine learning, and data visualization optimize analytics for small data environments.

Choosing Between Big Data and Small Data for Business

Big Data offers extensive datasets with high volume, velocity, and variety, enabling businesses to identify complex patterns and drive predictive analytics. Small Data provides focused, easily manageable datasets that support quick decision-making with high accuracy in specific contexts. Selecting between Big Data and Small Data depends on business goals, resource availability, and the need for granular insights versus broad trend analysis.

Related Important Terms

Data Gravity

Big Data, characterized by vast volumes and high velocity, generates substantial Data Gravity that attracts applications, services, and analytics to centralized locations due to the complexity and cost of moving large datasets. Small Data, while more manageable in size and easier to process locally, exerts less Data Gravity, enabling faster decision-making at the edge but limiting centralized insights gained from aggregated datasets.

Data Lakehouse

Data Lakehouse integrates the scale and flexibility of Big Data with the structure and management of Small Data, enabling unified analytics and improved data governance. This architecture supports diverse workloads by combining data warehouse schema management with data lake scalability, enhancing performance and reducing data redundancy.

Data Mesh

Data Mesh revolutionizes data architecture by decentralizing big data management into domain-oriented, self-serve data pipelines, contrasting traditional centralized small data systems that often limit scalability. Emphasizing data as a product, Data Mesh enhances data accessibility, quality, and ownership across organizations, optimizing analytics and decision-making processes.

Data Fabric

Data Fabric integrates big data and small data sources into a unified architecture, enabling seamless data access, governance, and analytics across diverse environments. It enhances data agility by automating data discovery, integration, and enrichment, supporting real-time decision-making in complex data ecosystems.

Microdata

Microdata represents a subset of big data, emphasizing fine-grained, highly detailed information at the individual or entity level, enabling precise analysis and personalized insights. Unlike big data's extensive volume and variety, microdata's structured format facilitates efficient querying and targeted decision-making in data-driven environments.

Edge Analytics

Edge analytics processes big data locally on devices or edge servers, reducing latency and bandwidth usage while enabling real-time insights. Small data complements this by focusing on specific, high-value datasets that drive immediate decision-making without overwhelming system resources.

Data Minimalism

Big Data involves analyzing vast and complex datasets to uncover hidden patterns and trends, while Small Data emphasizes simplicity and relevance by focusing on concise, high-quality information that drives precise decision-making. Data minimalism prioritizes extracting actionable insights from minimal datasets, reducing noise and enhancing efficiency in data processing and interpretation.

Digital Exhaust

Digital exhaust generates vast volumes of Big Data through user interactions, device sensors, and online activities, creating complex datasets that require advanced analytics and storage solutions. Small Data, in contrast, consists of concise, specific information sets derived from digital exhaust used for targeted decision-making and immediate business insights.

Hyperlocal Analytics

Hyperlocal analytics leverages small data collected from specific geographic locations to deliver precise, actionable insights tailored to local consumer behaviors, contrasting with big data's broad, aggregated datasets typically used for macro-level trend analysis. By focusing on context-rich, granular information, hyperlocal analytics enhances decision-making in retail, urban planning, and personalized marketing at the neighborhood level.

Event Stream Processing

Event Stream Processing (ESP) excels in handling real-time Big Data by continuously analyzing and processing vast streams of events for immediate insights, unlike Small Data, which typically involves static, historical datasets better suited for batch analysis. The scalability and speed of ESP enable enterprises to detect patterns and anomalies instantly, driving timely decision-making across applications like fraud detection, IoT monitoring, and financial trading.

Big Data vs Small Data Infographic

industrydif.com

industrydif.com