Artificial intelligence (AI) relies on traditional computing architectures to process data and perform tasks through algorithms and neural networks, enabling diverse applications from image recognition to natural language processing. Neuromorphic computing imitates the human brain's neural structure by integrating neurons and synapses in hardware, aiming to achieve greater efficiency and adaptability in processing complex sensory information. This emerging paradigm promises lower power consumption and faster real-time learning compared to conventional AI models, potentially transforming scientific research and technology development.

Table of Comparison

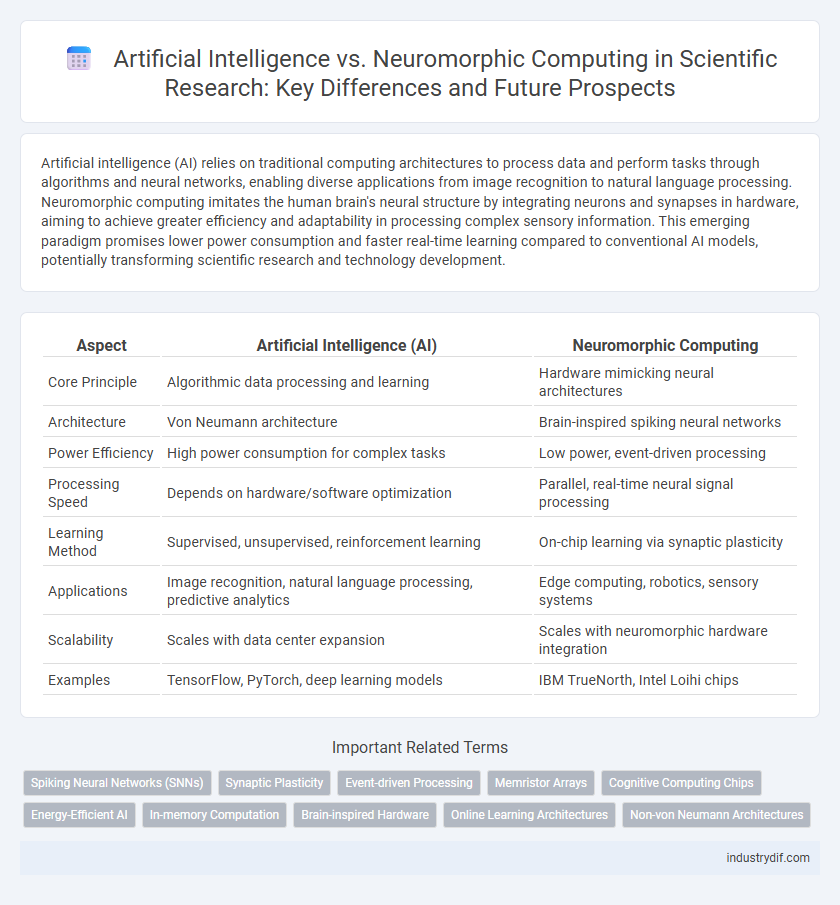

| Aspect | Artificial Intelligence (AI) | Neuromorphic Computing |

|---|---|---|

| Core Principle | Algorithmic data processing and learning | Hardware mimicking neural architectures |

| Architecture | Von Neumann architecture | Brain-inspired spiking neural networks |

| Power Efficiency | High power consumption for complex tasks | Low power, event-driven processing |

| Processing Speed | Depends on hardware/software optimization | Parallel, real-time neural signal processing |

| Learning Method | Supervised, unsupervised, reinforcement learning | On-chip learning via synaptic plasticity |

| Applications | Image recognition, natural language processing, predictive analytics | Edge computing, robotics, sensory systems |

| Scalability | Scales with data center expansion | Scales with neuromorphic hardware integration |

| Examples | TensorFlow, PyTorch, deep learning models | IBM TrueNorth, Intel Loihi chips |

Introduction to Artificial Intelligence and Neuromorphic Computing

Artificial Intelligence (AI) involves the development of algorithms and models that enable machines to perform tasks requiring human-like intelligence, such as learning, reasoning, and decision-making. Neuromorphic computing, inspired by the structure and function of biological neural networks, aims to create hardware systems that mimic the brain's efficiency and adaptability for processing information. Integrating AI with neuromorphic architectures offers promising avenues for enhancing computational speed, energy efficiency, and cognitive capabilities in machine intelligence.

Fundamental Principles of Artificial Intelligence

Artificial Intelligence (AI) relies on algorithmic models and data-driven learning processes, utilizing artificial neural networks inspired by biological brain structures but implemented on traditional semiconductor hardware. These models emphasize pattern recognition, optimization, and probabilistic inference through calculating vast datasets to extract meaningful representations. Core principles involve supervised, unsupervised, and reinforcement learning paradigms that enable machines to generalize knowledge and adapt to new information by updating parameterized functions.

Neuromorphic Computing: Biological Inspiration and Design

Neuromorphic computing mimics the architecture and dynamics of biological neural systems, utilizing spiking neural networks and event-driven processing to achieve efficient, low-power computation. By emulating synaptic plasticity and neuronal communication, neuromorphic hardware offers adaptive learning capabilities that surpass traditional artificial intelligence models based on static algorithms. The use of memristors and silicon neurons enables hardware-level parallelism and fault tolerance, paving the way for brain-inspired computing systems with enhanced cognitive functions.

Architecture Comparison: Traditional AI vs. Neuromorphic Systems

Traditional AI architectures rely on von Neumann designs using separate memory and processing units, leading to latency and energy inefficiencies. Neuromorphic systems emulate the brain's neural networks through integrated memory and computation, enabling parallel processing and lower power consumption. This architectural divergence results in neuromorphic computing offering superior performance in tasks requiring real-time learning and adaptation.

Computational Models and Learning Algorithms

Artificial Intelligence primarily relies on digital computational models such as deep neural networks that utilize backpropagation-based learning algorithms to optimize performance across diverse tasks. Neuromorphic computing, inspired by the architecture of biological neural systems, employs spiking neural networks and event-driven processing to achieve energy-efficient, real-time learning through local plasticity rules like spike-timing-dependent plasticity. Comparing these paradigms reveals that AI models excel in large-scale data-driven pattern recognition, whereas neuromorphic approaches offer advantages in adaptive learning and low-power hardware implementation for sensory-motor integration.

Energy Efficiency and Scalability in Computing Paradigms

Artificial Intelligence (AI) systems typically rely on traditional von Neumann architectures, which face significant energy consumption challenges and limited scalability due to the separation of memory and processing units. Neuromorphic computing emulates neural networks using specialized hardware circuits, drastically enhancing energy efficiency by enabling parallel data processing and on-chip learning. This paradigm shift allows neuromorphic systems to scale more effectively for complex, large-scale tasks while consuming significantly less power compared to conventional AI architectures.

Real-World Applications: Use Cases and Performance

Artificial Intelligence excels in diverse real-world applications such as natural language processing, image recognition, and predictive analytics, leveraging vast datasets and cloud computing for high accuracy. Neuromorphic computing mimics neural structures to optimize energy efficiency and real-time processing, proving advantageous in robotics, autonomous systems, and edge devices where low latency is critical. Comparative performance shows neuromorphic systems often outperform traditional AI in power consumption and processing speed for specific sensory and adaptive tasks, yet AI maintains broader versatility across complex data environments.

Hardware Implementations and Technological Advancements

Artificial Intelligence hardware implementations primarily rely on traditional silicon-based processors, such as GPUs and TPUs, optimized for parallel processing and deep learning algorithms. Neuromorphic computing advances leverage brain-inspired architectures using memristors and spiking neural networks, enabling energy-efficient computation and real-time sensory data processing. Technological innovations in neuromorphic chips, like IBM's TrueNorth and Intel's Loihi, demonstrate reduced power consumption and enhanced adaptability compared to conventional AI accelerators.

Challenges and Limitations in Both Approaches

Artificial Intelligence faces significant challenges including vast computational resource demands and difficulties in generalizing learning from limited data, while Neuromorphic Computing struggles with hardware scalability and emulating the brain's complex synaptic plasticity. Both approaches encounter limitations in energy efficiency, with AI models often requiring intensive power consumption and neuromorphic systems yet to achieve widespread practical implementation. Addressing these issues is crucial to advancing cognitive computing efficiency and real-world applicability.

Future Directions and Convergence Opportunities

Future directions in artificial intelligence emphasize enhancing algorithmic efficiency and scalability through advanced machine learning models, while neuromorphic computing focuses on mimicking biological neural networks to achieve low-power, high-speed processing. Convergence opportunities arise from integrating AI's data-driven adaptability with neuromorphic architectures' inherent parallelism and energy efficiency, potentially transforming edge computing and real-time data analysis. Leveraging neuromorphic hardware to accelerate AI inference and developing hybrid systems may drive breakthroughs in autonomous systems and brain-inspired technologies.

Related Important Terms

Spiking Neural Networks (SNNs)

Spiking Neural Networks (SNNs) represent a paradigm shift from traditional artificial intelligence by mimicking the temporal dynamics and event-driven processing of biological neurons, enabling energy-efficient and real-time computation. Neuromorphic computing architectures leveraging SNNs offer significant advantages in low-power consumption and unsupervised learning capabilities compared to conventional deep learning models reliant on static activation functions and gradient-based optimization.

Synaptic Plasticity

Synaptic plasticity in artificial intelligence is primarily modeled through weight adjustments in neural networks, enabling learning via backpropagation algorithms. Neuromorphic computing, inspired by biological neural systems, utilizes hardware-based synaptic plasticity with memristive devices to emulate real-time adaptive learning and energy-efficient processing.

Event-driven Processing

Artificial Intelligence primarily relies on continuous, clock-driven processing for tasks such as pattern recognition and decision-making. Neuromorphic computing, inspired by biological neural networks, utilizes event-driven processing to enhance energy efficiency and real-time responsiveness by activating computation only upon receiving specific input signals.

Memristor Arrays

Memristor arrays play a critical role in advancing neuromorphic computing by enabling energy-efficient, parallel data processing that mimics neural synapses, contrasting with traditional artificial intelligence architectures reliant on von Neumann systems. These arrays facilitate real-time learning and adaptation, significantly reducing latency and power consumption compared to conventional AI hardware, thereby enhancing scalability in brain-inspired cognitive tasks.

Cognitive Computing Chips

Cognitive computing chips in artificial intelligence utilize traditional von Neumann architectures optimized for parallel processing and machine learning algorithms, enabling efficient handling of structured data and pattern recognition. Neuromorphic computing chips mimic neural architectures through spiking neural networks and event-driven processing, offering lower power consumption and real-time adaptation for sensory and cognitive tasks.

Energy-Efficient AI

Neuromorphic computing mimics the brain's architecture to achieve significant reductions in energy consumption compared to traditional artificial intelligence models based on deep learning algorithms. This energy-efficient AI approach leverages event-driven processing and spiking neural networks, enabling scalable, low-power solutions for real-time cognitive tasks in edge computing and IoT devices.

In-memory Computation

In-memory computation in artificial intelligence leverages traditional silicon-based architectures to process data within memory units, enhancing speed and reducing energy consumption compared to conventional von Neumann systems. Neuromorphic computing mimics neural structures by integrating memory and processing elements at the hardware level, enabling highly efficient, parallel data processing that significantly outperforms AI models in pattern recognition and sensory data tasks.

Brain-inspired Hardware

Artificial Intelligence relies on algorithmic processing for complex tasks, while neuromorphic computing emulates brain-inspired hardware architectures using spiking neural networks to achieve energy-efficient and real-time computation. Brain-inspired hardware leverages memristors and silicon neurons to replicate synaptic plasticity, providing significant advantages in sensory processing and adaptive learning compared to conventional AI systems.

Online Learning Architectures

Artificial Intelligence leverages deep learning algorithms for pattern recognition and decision-making, whereas Neuromorphic Computing mimics the brain's architecture using spiking neural networks to enable efficient event-driven processing. Online learning architectures in AI rely on continuous data ingestion and adaptive model updates, while neuromorphic systems facilitate real-time learning with low power consumption through synaptic plasticity mechanisms.

Non-von Neumann Architectures

Non-von Neumann architectures, exemplified by neuromorphic computing, mimic the brain's parallel and event-driven processing to overcome the bottlenecks of traditional von Neumann systems in artificial intelligence tasks. These architectures leverage spiking neural networks and localized memory to achieve higher energy efficiency and speed in pattern recognition and sensory data processing compared to conventional AI models relying on sequential processing units.

Artificial Intelligence vs Neuromorphic Computing Infographic

industrydif.com

industrydif.com