Data analysis involves processing and interpreting large datasets to uncover patterns, trends, and insights essential for scientific research. Explainable Artificial Intelligence (XAI) enhances this process by providing transparent, interpretable models that help scientists understand how AI systems reach their conclusions. Combining data analysis with XAI ensures both accuracy and accountability in scientific decision-making.

Table of Comparison

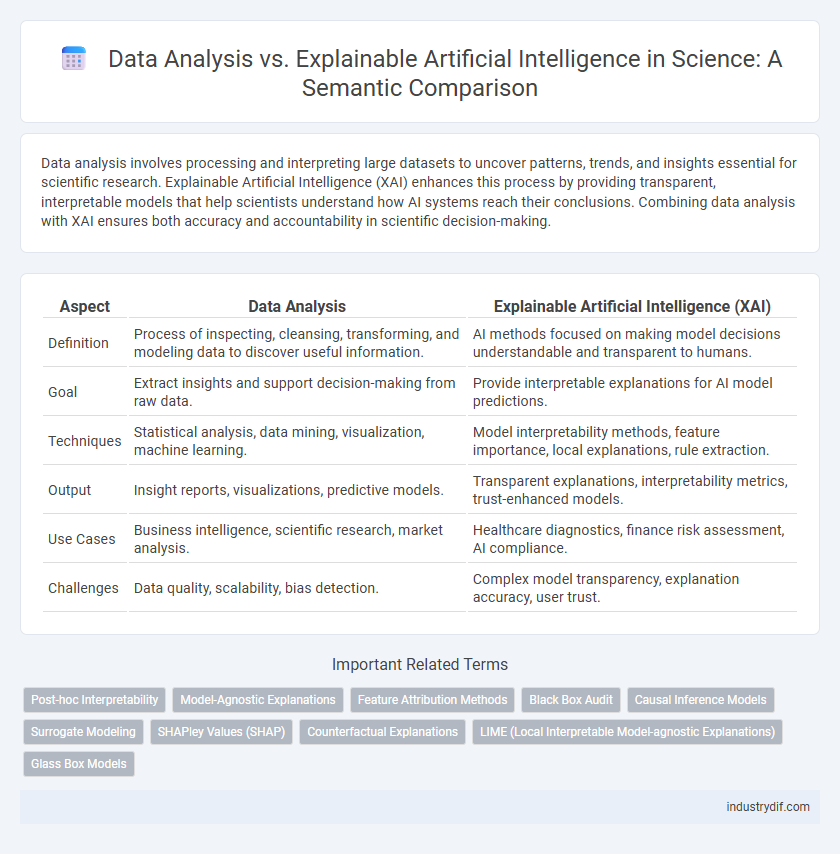

| Aspect | Data Analysis | Explainable Artificial Intelligence (XAI) |

|---|---|---|

| Definition | Process of inspecting, cleansing, transforming, and modeling data to discover useful information. | AI methods focused on making model decisions understandable and transparent to humans. |

| Goal | Extract insights and support decision-making from raw data. | Provide interpretable explanations for AI model predictions. |

| Techniques | Statistical analysis, data mining, visualization, machine learning. | Model interpretability methods, feature importance, local explanations, rule extraction. |

| Output | Insight reports, visualizations, predictive models. | Transparent explanations, interpretability metrics, trust-enhanced models. |

| Use Cases | Business intelligence, scientific research, market analysis. | Healthcare diagnostics, finance risk assessment, AI compliance. |

| Challenges | Data quality, scalability, bias detection. | Complex model transparency, explanation accuracy, user trust. |

Defining Data Analysis in Scientific Research

Data analysis in scientific research involves systematically applying statistical and logical techniques to interpret raw data, uncover patterns, and derive meaningful insights that support hypothesis testing and theory development. This process relies on methods such as descriptive statistics, inferential statistics, and data visualization to ensure accuracy and reproducibility of results. Unlike explainable artificial intelligence, which focuses on making machine learning models transparent, data analysis emphasizes validating scientific phenomena through rigorous examination and validation of empirical evidence.

Fundamentals of Explainable Artificial Intelligence (XAI)

Explainable Artificial Intelligence (XAI) focuses on creating transparent models that provide interpretable insights into complex data-driven decisions, enhancing trust and accountability in AI systems. Unlike traditional data analysis, which primarily emphasizes extracting patterns and statistical relationships, XAI prioritizes understanding the reasoning behind AI outputs through techniques such as feature importance, rule extraction, and model-agnostic interpretation methods. Core principles of XAI include fidelity, interpretability, and usability, ensuring models not only perform accurately but also offer clear explanations accessible to both experts and non-experts.

Key Differences Between Data Analysis and XAI

Data analysis primarily focuses on extracting patterns and insights from raw data using statistical and computational techniques, whereas Explainable Artificial Intelligence (XAI) emphasizes transparency and interpretability in AI models, ensuring that decision-making processes are understandable to humans. Unlike traditional data analysis, XAI integrates model explainability as a fundamental component, enabling trust and accountability in AI-driven predictions. Key differences include XAI's use of interpretable models or post-hoc explanation methods, contrasting with data analysis' broader scope of exploratory and confirmatory testing without inherent requirements for model transparency.

Methodologies Employed in Data Analysis

Data analysis methodologies primarily include statistical techniques, machine learning algorithms, and data mining processes that extract meaningful patterns from complex datasets. Techniques such as regression analysis, clustering, and principal component analysis facilitate quantifying relationships and reducing dimensionality, enhancing model interpretability. These methods provide a foundation that complements explainable artificial intelligence by enabling transparent and interpretable insights from raw data.

Core Techniques in Explainable AI

Core techniques in Explainable Artificial Intelligence (XAI) include model-agnostic methods such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations), which provide local interpretability by approximating complex models with simpler, interpretable ones. Feature importance analysis and attention mechanisms help identify key variables influencing model predictions, enhancing transparency in deep learning architectures. Counterfactual explanations and rule-based approaches further contribute to understanding decision boundaries and reasoning processes within AI systems.

Applications of Data Analysis in Scientific Studies

Data analysis plays a crucial role in scientific studies by enabling researchers to uncover patterns, validate hypotheses, and generate predictive models from complex datasets. Techniques such as statistical testing, machine learning, and data visualization facilitate in-depth understanding and interpretation of experimental results across fields like genomics, climate science, and biomedical research. Explainable Artificial Intelligence complements these efforts by providing transparent models that enhance trust and insight into data-driven scientific conclusions.

Role of XAI in Enhancing Scientific Discoveries

Explainable Artificial Intelligence (XAI) significantly enhances scientific discoveries by providing transparent insights into complex machine learning models, enabling researchers to validate and interpret results more effectively. Unlike traditional data analysis, which often relies on statistical correlations, XAI uncovers causal relationships and model decision pathways, fostering deeper understanding and trust in AI-driven findings. This interpretability accelerates hypothesis generation and experimental design, driving innovation across scientific disciplines.

Interpretability and Transparency in AI Models

Data analysis techniques provide insights into patterns and relationships within datasets, but often lack inherent interpretability when applied to complex AI models. Explainable Artificial Intelligence (XAI) emphasizes transparency by offering interpretable outputs, enabling users to understand model decisions through techniques such as feature importance, rule extraction, and visualizations. Incorporating XAI methods enhances trust and accountability by revealing the internal mechanics of AI models, which raw data analysis alone cannot achieve.

Challenges in Merging Data Analysis with XAI

Merging data analysis with explainable artificial intelligence (XAI) presents challenges such as ensuring transparency while maintaining model accuracy and handling the complexity of interpreting high-dimensional data. Balancing the trade-off between explainability and predictive performance often requires advanced techniques like interpretable surrogate models or feature attribution methods. Moreover, integrating diverse data sources demands robust frameworks to harmonize data preprocessing with XAI's explainability constraints.

Future Trends: Integrating Data Analysis and Explainable AI

Future trends in data analysis and explainable artificial intelligence (XAI) emphasize the integration of complex data processing with transparent model interpretability to enhance decision-making in scientific research. Advances in hybrid models combining machine learning algorithms with rule-based explanations enable more accurate predictions while providing clear insights into underlying processes. This convergence is driving innovation in fields such as healthcare, climate science, and genomics by facilitating trust, reproducibility, and actionable knowledge from large datasets.

Related Important Terms

Post-hoc Interpretability

Post-hoc interpretability in Explainable Artificial Intelligence (XAI) enables the analysis of complex AI models by providing human-understandable explanations after model training, contrasting traditional data analysis that relies on direct inspection of structured datasets. Techniques such as feature importance ranking, SHAP values, and LIME bridge the gap between opaque model decisions and actionable insights, enhancing trust and transparency in AI-driven data analysis workflows.

Model-Agnostic Explanations

Model-agnostic explanations in explainable artificial intelligence (XAI) enable interpretation of complex black-box models by providing post-hoc insights applicable across various algorithms, enhancing transparency and trust in data analysis. Techniques such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) quantify feature importance, offering robust, model-independent explanations critical for validating predictive outcomes in scientific research.

Feature Attribution Methods

Feature attribution methods in data analysis quantify the contribution of individual variables to model predictions, enhancing interpretability and trust in complex algorithms. Explainable Artificial Intelligence (XAI) leverages these techniques, such as SHAP and LIME, to provide transparent insights into black-box models, enabling informed decision-making in scientific research.

Black Box Audit

Data analysis techniques provide quantitative insights by examining structured datasets, whereas explainable artificial intelligence (XAI) emphasizes transparency in black box models through interpretable outputs and audit trails. Black box audit in XAI systematically evaluates model behavior, ensuring trustworthiness and compliance by revealing hidden decision-making processes within complex algorithms.

Causal Inference Models

Causal inference models in data analysis enable the identification of cause-and-effect relationships beyond mere correlations, enhancing the interpretability of explainable artificial intelligence (XAI) systems. These models provide transparent frameworks that improve decision-making by revealing underlying mechanisms driving observed data patterns.

Surrogate Modeling

Surrogate modeling in explainable artificial intelligence (XAI) provides interpretable approximations of complex machine learning models to enhance transparency and trust. Data analysis leverages surrogate models to extract meaningful insights by simplifying model behavior without compromising predictive performance.

SHAPley Values (SHAP)

SHAPley Values (SHAP) enhance data analysis by providing transparent and interpretable explanations for complex machine learning models, quantifying each feature's contribution to individual predictions. This explainable artificial intelligence technique bridges the gap between model accuracy and interpretability, enabling more trustworthy and actionable insights in scientific research.

Counterfactual Explanations

Counterfactual explanations in explainable artificial intelligence (XAI) provide transparent insights by illustrating how minimal changes to input data alter model predictions, enhancing interpretability beyond traditional data analysis methods. Unlike conventional data analysis that focuses on identifying patterns and correlations, counterfactuals offer actionable explanations that directly address causality and decision boundaries within AI systems.

LIME (Local Interpretable Model-agnostic Explanations)

LIME (Local Interpretable Model-agnostic Explanations) enhances data analysis by providing transparent, interpretable insights into complex machine learning models, enabling researchers to understand feature contributions at a local prediction level. This method bridges the gap between predictive accuracy and model interpretability, crucial for validating scientific hypotheses and ensuring trustworthy AI-driven decisions.

Glass Box Models

Glass Box Models provide transparent decision-making processes in Explainable Artificial Intelligence, enabling stakeholders to understand and validate the underlying algorithms driving predictions. Unlike traditional data analysis methods, these models offer interpretable insights by illustrating how input variables directly influence outputs, facilitating trust in AI systems for critical scientific applications.

Data Analysis vs Explainable Artificial Intelligence Infographic

industrydif.com

industrydif.com