Containerization allows developers to package applications with their dependencies into isolated units, ensuring consistent performance across different environments. Cloud-native architectures leverage containerization but emphasize microservices, automation, and scalability to optimize application deployment in dynamic cloud environments. Choosing between containerization and cloud-native approaches depends on an organization's need for agility, scalability, and resource management.

Table of Comparison

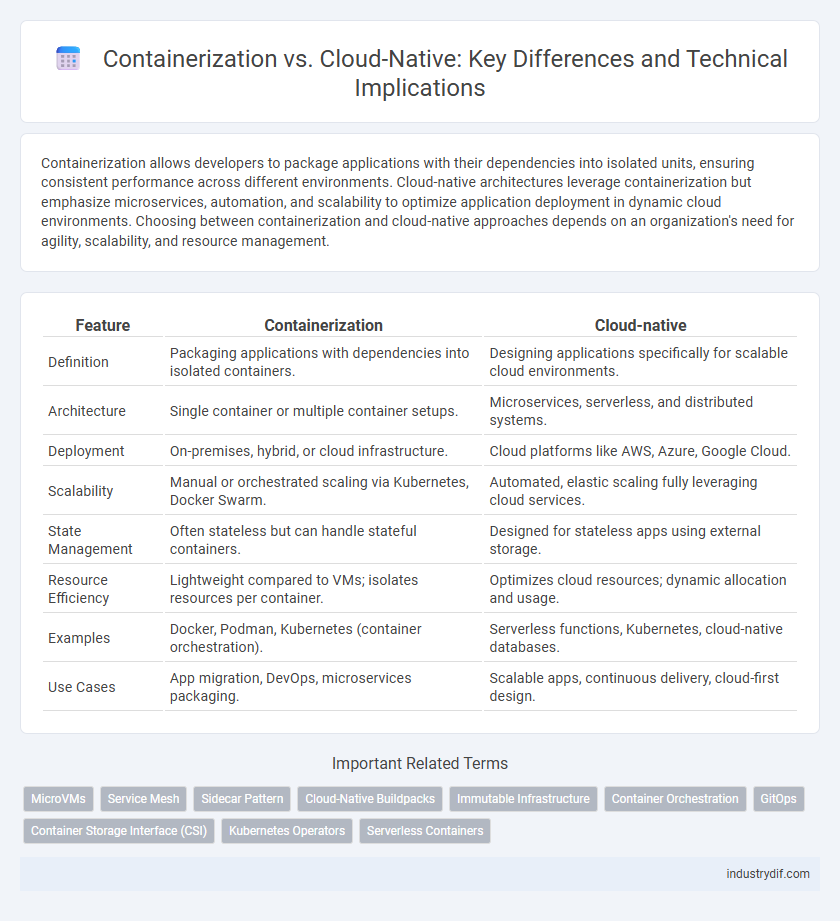

| Feature | Containerization | Cloud-native |

|---|---|---|

| Definition | Packaging applications with dependencies into isolated containers. | Designing applications specifically for scalable cloud environments. |

| Architecture | Single container or multiple container setups. | Microservices, serverless, and distributed systems. |

| Deployment | On-premises, hybrid, or cloud infrastructure. | Cloud platforms like AWS, Azure, Google Cloud. |

| Scalability | Manual or orchestrated scaling via Kubernetes, Docker Swarm. | Automated, elastic scaling fully leveraging cloud services. |

| State Management | Often stateless but can handle stateful containers. | Designed for stateless apps using external storage. |

| Resource Efficiency | Lightweight compared to VMs; isolates resources per container. | Optimizes cloud resources; dynamic allocation and usage. |

| Examples | Docker, Podman, Kubernetes (container orchestration). | Serverless functions, Kubernetes, cloud-native databases. |

| Use Cases | App migration, DevOps, microservices packaging. | Scalable apps, continuous delivery, cloud-first design. |

Defining Containerization and Cloud-native

Containerization encapsulates applications and their dependencies into isolated, lightweight containers that ensure consistency across multiple computing environments. Cloud-native architecture leverages microservices, dynamic orchestration, and continuous delivery, enabling applications to fully exploit the scalability and flexibility of cloud platforms. Defining containerization involves virtualization at the operating system level, while cloud-native emphasizes designing applications optimized for distributed cloud infrastructure.

Key Differences Between Containerization and Cloud-native

Containerization involves encapsulating applications and their dependencies into isolated, portable containers, enabling consistent deployment across various environments. Cloud-native architecture builds on containerization by integrating microservices, dynamic orchestration with Kubernetes, and continuous integration/continuous deployment (CI/CD) pipelines to fully leverage cloud infrastructure's scalability and resilience. Key differences include containerization's focus on application packaging and portability, while cloud-native emphasizes designing and operating applications optimized for distributed, scalable cloud environments.

Core Technologies in Containerization

Containerization leverages core technologies such as Docker, Kubernetes, and container runtimes like containerd and CRI-O to create isolated environments for applications. These technologies enable consistent deployment, scalability, and resource efficiency by packaging applications and their dependencies into lightweight, portable containers. Kubernetes orchestrates container clusters, providing automated scaling, self-healing, and service discovery, which are essential for modern containerized workloads.

Fundamental Principles of Cloud-native Architecture

Cloud-native architecture is built on principles of microservices, containerization, and dynamic orchestration that ensure scalability, resilience, and continuous delivery. Containerization encapsulates applications and their dependencies, promoting consistency across hybrid and multi-cloud environments without the overhead of traditional virtual machines. Cloud-native environments leverage orchestration tools like Kubernetes to automate deployment, scaling, and management, enabling rapid innovation and efficient resource utilization.

Benefits of Adopting Containerization

Containerization boosts application portability by encapsulating dependencies and configurations into lightweight, isolated environments, enabling seamless deployment across various infrastructure platforms. It enhances resource efficiency through optimized utilization, reducing overhead compared to traditional virtual machines, and accelerates development cycles with consistent environments that simplify testing and scaling. By supporting microservices architecture, containerization facilitates modular application design, improving maintainability and enabling faster updates in dynamic cloud-native ecosystems.

Advantages of Cloud-native Development

Cloud-native development leverages microservices architecture, enabling rapid scaling and continuous integration/continuous deployment (CI/CD) pipelines for faster software iteration. It offers enhanced resilience through automated recovery and fault tolerance built into the platform. Cloud-native applications optimize resource utilization with dynamic orchestration using Kubernetes and other container orchestration tools.

Common Use Cases: Containerization vs Cloud-native

Containerization excels in application portability, microservices deployment, and development environment consistency, supporting rapid scaling and efficient resource utilization. Cloud-native architectures leverage managed services, serverless functions, and continuous integration/continuous deployment (CI/CD) pipelines to optimize scalability, resilience, and developer productivity. Common use cases for containerization include legacy app modernization and hybrid cloud deployments, while cloud-native approaches dominate real-time data processing, scalable web applications, and dynamic resource management.

Integration Challenges and Solutions

Containerization and cloud-native architectures often face integration challenges such as managing orchestration tools like Kubernetes, ensuring seamless interoperability between microservices, and maintaining consistent security policies across hybrid environments. Solutions include implementing standardized APIs, using service meshes like Istio for traffic management and security, and adopting CI/CD pipelines tailored for container deployments to automate integration processes. Effective monitoring platforms like Prometheus and Grafana address visibility issues and streamline troubleshooting in complex containerized cloud-native ecosystems.

Security Considerations in Both Approaches

Containerization enhances application security by isolating processes within lightweight, portable environments, reducing attack surfaces through kernel-level isolation and limiting resource access. Cloud-native architectures leverage microservices, dynamic orchestration, and automated security policies to provide real-time threat detection, vulnerability management, and seamless scalability while maintaining compliance with industry standards. Both approaches require robust identity and access management (IAM), continuous monitoring, and regular patching to mitigate risks associated with misconfigurations and zero-day exploits.

Future Trends in Containerization and Cloud-native

Future trends in containerization and cloud-native technologies emphasize enhanced orchestration with Kubernetes advancements, increased adoption of serverless architectures, and integration of AI-driven automation for optimized resource management. Security improvements are driven by zero-trust models and runtime protection, while edge computing expands cloud-native deployments beyond centralized data centers. Hybrid and multi-cloud strategies remain crucial, enabling flexible, resilient infrastructure aligned with evolving enterprise digital transformation goals.

Related Important Terms

MicroVMs

MicroVMs offer lightweight virtualization with rapid startup times and minimal resource overhead, bridging the gap between containerization and traditional VMs for cloud-native applications. These tiny virtual machines enhance isolation and security compared to containers while maintaining cloud-native scalability and automation benefits.

Service Mesh

Service Mesh provides a dedicated infrastructure layer that manages service-to-service communication within containerized environments, enhancing security, observability, and traffic control. Unlike traditional containerization, Cloud-native architectures leverage Service Mesh to enable dynamic microservices orchestration, seamless load balancing, and resilient fault tolerance across distributed systems.

Sidecar Pattern

The Sidecar Pattern in containerization enables microservices to extend functionality by deploying helper containers alongside primary application containers, improving observability, configuration, and communication without modifying core services. In cloud-native architectures, this pattern enhances scalability and resilience by isolating cross-cutting concerns such as logging, security, and service discovery, facilitating seamless integration within orchestration platforms like Kubernetes.

Cloud-Native Buildpacks

Cloud-Native Buildpacks automate the transformation of application source code into standardized container images, streamlining deployment in cloud-native environments. Unlike traditional containerization, they abstract underlying infrastructure complexities by integrating build, dependency management, and security scanning into a unified, repeatable build process.

Immutable Infrastructure

Containerization leverages immutable infrastructure by packaging applications and their dependencies into isolated, read-only containers that ensure consistent environments across development and production. In contrast, cloud-native architectures extend immutable principles through dynamic orchestration and automated scaling, enabling stateless services that rapidly deploy updates without configuration drift.

Container Orchestration

Container orchestration automates deployment, scaling, and management of containerized applications, enhancing efficiency compared to traditional containerization techniques. Cloud-native architectures leverage container orchestration platforms like Kubernetes to optimize resource allocation, ensure high availability, and support microservices-based development.

GitOps

GitOps streamlines containerization by automating deployment and management through declarative infrastructure as code, enabling continuous delivery in cloud-native environments. This approach enhances consistency, scalability, and observability across Kubernetes clusters, driving efficient operations and rapid application updates.

Container Storage Interface (CSI)

Container Storage Interface (CSI) standardizes the integration of storage systems with container orchestration platforms, enhancing cloud-native workflows by enabling dynamic provisioning and consistent management of persistent storage. While containerization focuses on packaging applications, CSI bridges the gap between ephemeral containers and durable storage, crucial for stateful applications in cloud-native environments.

Kubernetes Operators

Kubernetes Operators extend container orchestration by automating the management of cloud-native applications, enabling seamless lifecycle operations such as updates, backups, and scaling. Unlike traditional containerization, Operators leverage Kubernetes APIs to embed domain-specific knowledge, enhancing the deployment and maintenance of complex stateful workloads in cloud-native environments.

Serverless Containers

Serverless containers combine the scalability and management ease of serverless computing with the isolation and portability of containerization, eliminating the need for developers to manage underlying infrastructure. This approach accelerates deployment cycles and optimizes resource utilization by automatically scaling container instances based on demand in cloud-native environments.

Containerization vs Cloud-native Infographic

industrydif.com

industrydif.com