HTTP is a widely adopted protocol designed for stateless communication over the web, using plain-text messages that are easy to debug and compatible with many platforms. gRPC operates on top of HTTP/2, enabling efficient binary serialization with Protocol Buffers, which results in lower latency and better performance for microservices and real-time applications. Choosing between HTTP and gRPC depends on factors like the need for streaming data, strict API contracts, and the overall complexity of the client-server interaction.

Table of Comparison

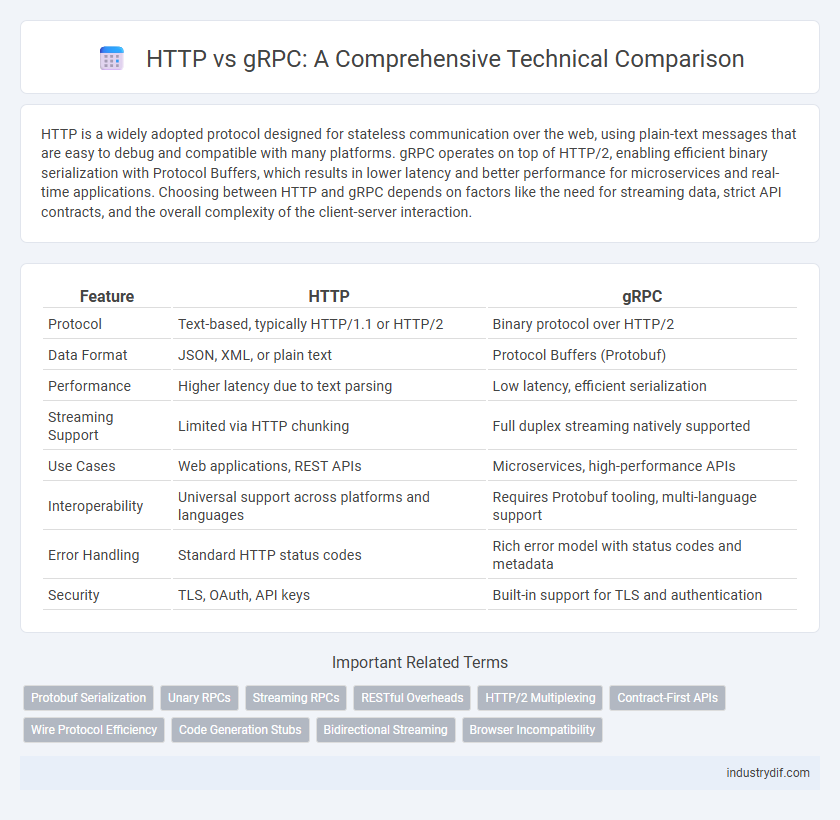

| Feature | HTTP | gRPC |

|---|---|---|

| Protocol | Text-based, typically HTTP/1.1 or HTTP/2 | Binary protocol over HTTP/2 |

| Data Format | JSON, XML, or plain text | Protocol Buffers (Protobuf) |

| Performance | Higher latency due to text parsing | Low latency, efficient serialization |

| Streaming Support | Limited via HTTP chunking | Full duplex streaming natively supported |

| Use Cases | Web applications, REST APIs | Microservices, high-performance APIs |

| Interoperability | Universal support across platforms and languages | Requires Protobuf tooling, multi-language support |

| Error Handling | Standard HTTP status codes | Rich error model with status codes and metadata |

| Security | TLS, OAuth, API keys | Built-in support for TLS and authentication |

Overview of HTTP and gRPC Protocols

HTTP is a stateless, text-based protocol commonly used for web communication, emphasizing simplicity and wide compatibility with RESTful APIs. gRPC is a high-performance, open-source RPC framework leveraging HTTP/2 for multiplexed streams and Protocol Buffers for efficient serialization. Unlike HTTP/1.1, gRPC supports bi-directional streaming and built-in authentication, enabling low-latency, scalable microservices communication.

Key Differences Between HTTP and gRPC

HTTP operates as a stateless, text-based protocol primarily using REST principles, facilitating simple request-response communication via URLs and methods like GET and POST. gRPC utilizes HTTP/2 for transport and supports bi-directional streaming, multiplexing, and binary serialization with Protocol Buffers, which enhances performance and reduces latency in microservices and real-time applications. Unlike HTTP, gRPC enforces strict API contracts through Interface Definition Language (IDL), enabling stronger type safety and easier client-server code generation.

Performance and Latency Comparison

gRPC leverages HTTP/2 protocol enabling multiplexed streams and header compression, resulting in significantly lower latency compared to traditional HTTP/1.1 communication. Benchmarks show gRPC can reduce round-trip time by up to 50%, enhancing performance in microservices and real-time applications. HTTP remains widely compatible but incurs higher overhead due to verbose headers and lack of efficient streaming support.

Data Serialization: JSON vs Protocol Buffers

HTTP primarily uses JSON for data serialization, which is human-readable but tends to be bulkier and slower to parse compared to binary formats. gRPC employs Protocol Buffers, a compact, efficient binary serialization format that significantly reduces message size and improves transmission speed. Protocol Buffers also enforce strict schema definitions, enabling faster serialization and deserialization, which is crucial for high-performance, low-latency applications.

API Design and Implementation Approaches

HTTP APIs primarily follow REST principles, leveraging stateless operations and standard HTTP verbs for resource manipulation, which simplifies integration across diverse clients. gRPC employs protocol buffers and HTTP/2 for efficient binary serialization and low-latency communication, ideal for microservices requiring high performance and bi-directional streaming. API design with HTTP emphasizes human readability and broad compatibility, whereas gRPC focuses on strong typing, contract-first development, and optimized payload size for scalable service-to-service communication.

Streaming and Real-Time Communication Capabilities

gRPC offers superior streaming and real-time communication capabilities compared to HTTP/1.1 by supporting bidirectional streaming, allowing simultaneous data exchange between client and server. HTTP/2, underlying gRPC, enables multiplexing multiple streams over a single connection, reducing latency and improving throughput in real-time applications. While HTTP/1.1 relies mainly on request-response patterns, gRPC's efficient protocol buffers and persistent connections optimize performance for dynamic, low-latency streaming scenarios.

Security Mechanisms in HTTP and gRPC

HTTP relies on TLS/SSL protocols to secure data in transit, providing encryption, authentication, and integrity checks for client-server communication. gRPC, built on HTTP/2, inherits TLS for encryption but further enhances security through strong identity verification using mutual TLS (mTLS) and token-based authentication mechanisms such as OAuth 2.0. Both protocols support robust transport security, but gRPC's integration with HTTP/2 allows for more efficient and secure multiplexed connections, reducing latency and improving overall security posture in microservices environments.

Tooling and Ecosystem Support

gRPC offers robust tooling support including auto-generated client and server code, extensive libraries for multiple languages, and integrated load balancing, enabling efficient microservices communication. HTTP/1.1 and HTTP/2 maintain widespread ecosystem support across browsers, proxies, and firewalls, ensuring compatibility for RESTful APIs and web applications. gRPC leverages HTTP/2 features for improved performance but requires specialized tooling, while HTTP benefits from mature, well-established tools and debugging utilities across diverse platforms.

Scalability and Deployment Considerations

HTTP's stateless nature allows straightforward horizontal scaling through load balancers and caching, benefiting from widespread infrastructure support and simpler debugging. gRPC, designed for high-performance communication with multiplexed HTTP/2 streams, excels in microservices environments by reducing latency and improving throughput but requires more complex deployment strategies due to HTTP/2 dependencies and tighter coupling with server implementations. Both protocols support scalability, yet HTTP's ubiquitous tooling favors simpler deployment, while gRPC's efficiency suits large-scale, performance-critical applications where infrastructure supports HTTP/2 and protobuf serialization.

Use Cases: Choosing Between HTTP and gRPC

HTTP is ideal for web applications requiring broad compatibility and easy integration with browsers, REST APIs, and simple request-response patterns. gRPC excels in microservices architectures needing high performance, efficient binary serialization, low latency, and support for streaming data between client and server. Choosing between HTTP and gRPC depends on factors like interoperability, performance requirements, and the complexity of communication patterns in distributed systems.

Related Important Terms

Protobuf Serialization

Protobuf serialization in gRPC enables efficient binary data encoding that reduces message size and accelerates transmission compared to HTTP's typically larger JSON or XML payloads. This compact, schema-driven serialization improves performance and bandwidth utilization, making gRPC ideal for low-latency microservices communication.

Unary RPCs

HTTP relies on textual protocols like REST over TCP, resulting in higher latency and larger payloads for unary RPCs, while gRPC uses HTTP/2 with binary framing, enabling efficient multiplexing, lower latency, and compact message sizes in unary RPC communication. gRPC's support for protocol buffers and built-in streaming mechanisms further optimize unary RPCs by reducing serialization overhead and improving performance compared to stateless HTTP requests.

Streaming RPCs

HTTP/2-based gRPC excels in Streaming RPCs by enabling efficient bidirectional communication with lower latency and multiplexing multiple streams over a single connection, unlike traditional HTTP/1.1 which handles streaming less efficiently via chunked transfer encoding. This results in improved real-time data transfer and resource utilization in microservices and distributed systems leveraging gRPC streaming capabilities.

RESTful Overheads

RESTful HTTP incurs significant overhead due to verbose headers and text-based payloads which increase latency and bandwidth usage. gRPC uses efficient binary serialization with HTTP/2 multiplexing, drastically reducing payload size and improving performance in microservices communication.

HTTP/2 Multiplexing

HTTP/2 multiplexing enables multiple simultaneous streams over a single TCP connection, significantly reducing latency and improving resource utilization compared to HTTP/1.x. gRPC, built on HTTP/2, leverages this multiplexing to efficiently handle concurrent remote procedure calls with lower overhead and enhanced performance.

Contract-First APIs

Contract-first APIs in HTTP typically utilize OpenAPI specifications to define RESTful endpoints, ensuring clear, language-agnostic service contracts and broad interoperability. gRPC, leveraging Protocol Buffers, offers a strongly-typed, binary contract-first approach that enhances performance and facilitates automatic code generation for multi-language client and server stubs.

Wire Protocol Efficiency

gRPC uses Protocol Buffers, a binary serialization format that significantly reduces message size and parsing time compared to HTTP/1.1's text-based JSON format, resulting in better wire protocol efficiency. This binary communication minimizes network latency and bandwidth usage, making gRPC more suitable for high-performance microservices and real-time applications.

Code Generation Stubs

gRPC relies on Protocol Buffers to generate strongly-typed code stubs across multiple programming languages, enabling seamless serialization and remote procedure call implementation. HTTP APIs typically require manual or third-party tools for generating client libraries, often resulting in less consistent and type-safe code compared to gRPC's automated stub generation.

Bidirectional Streaming

gRPC enables efficient bidirectional streaming by allowing both client and server to send and receive continuous streams of messages concurrently over a single HTTP/2 connection, reducing latency and improving real-time communication. HTTP/1.1 lacks native support for bidirectional streaming, requiring workarounds like WebSockets, which introduces added complexity and overhead compared to gRPC's built-in streaming capabilities.

Browser Incompatibility

HTTP/1.1 and HTTP/2 protocols are natively supported by all modern browsers, enabling seamless communication for web applications, whereas gRPC relies on HTTP/2 frames that browsers cannot fully interpret or initiate directly, leading to compatibility issues. To overcome browser incompatibility, gRPC-Web proxies translate gRPC calls into standard HTTP requests, allowing browser clients to interact with gRPC services without native support.

HTTP vs gRPC Infographic

industrydif.com

industrydif.com