A load balancer primarily distributes network traffic across multiple servers to ensure reliability and performance, while a service mesh handles communication between microservices by providing features like traffic management, security, and observability. Load balancers operate at the transport and network layers, managing inbound requests, whereas service meshes function at the application layer, enabling fine-grained control over service-to-service communication. Understanding these differences helps optimize infrastructure by deploying load balancers for external traffic distribution and service meshes for internal microservice management.

Table of Comparison

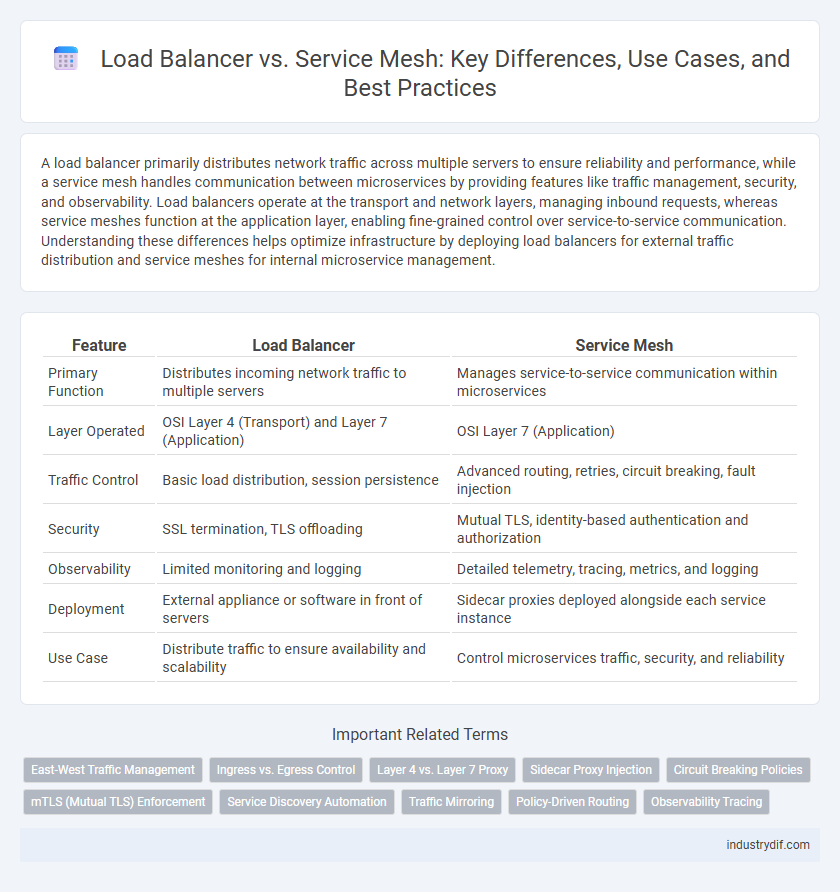

| Feature | Load Balancer | Service Mesh |

|---|---|---|

| Primary Function | Distributes incoming network traffic to multiple servers | Manages service-to-service communication within microservices |

| Layer Operated | OSI Layer 4 (Transport) and Layer 7 (Application) | OSI Layer 7 (Application) |

| Traffic Control | Basic load distribution, session persistence | Advanced routing, retries, circuit breaking, fault injection |

| Security | SSL termination, TLS offloading | Mutual TLS, identity-based authentication and authorization |

| Observability | Limited monitoring and logging | Detailed telemetry, tracing, metrics, and logging |

| Deployment | External appliance or software in front of servers | Sidecar proxies deployed alongside each service instance |

| Use Case | Distribute traffic to ensure availability and scalability | Control microservices traffic, security, and reliability |

Overview of Load Balancer and Service Mesh

Load balancers distribute network traffic across multiple servers to ensure high availability and improve application responsiveness, primarily managing Layer 4 and Layer 7 traffic. Service meshes provide a dedicated infrastructure layer for managing service-to-service communication, including features like traffic routing, load balancing, security, and observability within microservices architectures. While load balancers handle external traffic distribution, service meshes focus on internal service communication and management in complex distributed systems.

Core Functions and Architecture

Load balancers primarily distribute incoming network traffic across multiple servers to ensure reliability and optimal resource use, operating at the transport and network layers. Service mesh provides granular control over service-to-service communication within microservices architectures, managing traffic routing, security policies, and observability through sidecar proxies at the application layer. While load balancers handle external client-to-server load distribution, service meshes enable fine-grained, internal service communication and resilience features.

Traffic Management Capabilities

Load balancers efficiently distribute incoming network traffic across multiple servers to optimize resource use, minimize response time, and prevent overload, primarily operating at the transport and network layers. Service meshes provide more granular traffic management by enabling features like dynamic routing, retries, circuit breaking, and telemetry at the application layer within microservices architectures. Unlike load balancers, service meshes offer fine-grained control over service-to-service communication, enhancing observability and security through built-in policies and real-time traffic shaping.

Scalability and Performance Impact

Load balancers distribute incoming traffic across multiple servers, enhancing scalability by preventing any single server from becoming a bottleneck, thus improving overall system performance. Service meshes manage inter-service communication in microservices architectures, optimizing scalability through fine-grained traffic control, dynamic routing, and observability, which reduces latency and increases system resilience. The performance impact of load balancers is generally limited to network-level load distribution, whereas service meshes introduce additional processing overhead due to features like encryption and telemetry but enable more sophisticated traffic management and security at scale.

Observability and Monitoring Features

Load balancers provide basic traffic distribution metrics such as request rates and response times, offering limited observability mainly at the network and transport layers. In contrast, service meshes deliver comprehensive monitoring with deep insights into service-to-service communication, including detailed tracing, latency distribution, and error rates at the application layer. Enhanced observability in service meshes enables granular performance analysis and proactive troubleshooting through integrated telemetry, metrics aggregation, and distributed tracing capabilities.

Security Enhancements and Policy Enforcement

Load balancers primarily enhance security by distributing traffic to prevent overload and mitigate DDoS attacks, while offering basic SSL/TLS termination and access control. Service meshes provide advanced security enhancements through mutual TLS for encrypted service-to-service communication, fine-grained policy enforcement, and dynamic access control within microservices architectures. The integration of service mesh with load balancers creates a robust security framework by combining external traffic management with internal communication protection and policy governance.

Deployment Complexity and Overhead

Load balancers offer straightforward deployment with minimal overhead, primarily handling traffic distribution based on layer 4 or 7 protocols. Service meshes introduce greater complexity by deploying a network of sidecar proxies alongside each service instance, resulting in increased resource consumption and operational challenges. This additional deployment overhead can impact scalability and requires comprehensive configuration management to ensure reliability and security.

Integration with Cloud-Native Ecosystems

Load balancers integrate seamlessly with cloud-native ecosystems by efficiently distributing incoming traffic across microservices, ensuring high availability and scalability. Service meshes provide deeper integration by managing service-to-service communication, offering fine-grained traffic control, observability, and security features like mutual TLS within Kubernetes environments. Both tools complement each other in cloud-native architectures, with load balancers handling edge traffic and service meshes optimizing internal service interactions.

Use Cases and Best Practices

Load balancers optimize traffic distribution across servers, ideal for microservices requiring scalable, high-availability access without deep communication control. Service meshes enhance service-to-service communication with features like secure service discovery, observability, and resilience, suited for complex microservices architectures needing fine-grained policy enforcement. Best practices recommend using load balancers for north-south traffic management and service meshes for east-west service interactions within cloud-native environments.

Choosing Between Load Balancer and Service Mesh

Choosing between a load balancer and a service mesh depends on the complexity and requirements of your network traffic management. Load balancers efficiently distribute incoming traffic across servers to ensure high availability and scalability, making them ideal for handling external traffic and simple routing scenarios. Service meshes provide advanced observability, security, and fine-grained traffic control within microservices architectures, making them suitable for internal service-to-service communication in complex distributed systems.

Related Important Terms

East-West Traffic Management

Load balancers efficiently distribute north-south traffic between clients and servers, while service meshes specialize in managing east-west traffic within microservices architectures by enabling fine-grained control, observability, and security between internal service-to-service communications. Service meshes leverage sidecar proxies to provide dynamic routing, load balancing, failure recovery, and telemetry collection directly at the application layer, enhancing resilience and performance within data center or cloud-native environments.

Ingress vs. Egress Control

Load balancers primarily provide ingress control by distributing incoming traffic across multiple servers to ensure high availability and reliability, while service meshes offer granular egress control by managing and securing outbound service-to-service communications within microservices architectures. Service meshes enable fine-grained policy enforcement and observability on egress traffic, complementing the ingress traffic management capabilities of load balancers.

Layer 4 vs. Layer 7 Proxy

Load balancers operate primarily at Layer 4, managing traffic based on IP addresses and ports to distribute network connections efficiently, while service meshes function at Layer 7, providing advanced application-layer routing, security, and observability through proxy sidecars. Layer 7 proxies enable granular control over HTTP and HTTPS traffic, facilitating features like traffic shaping, retry policies, and service discovery that traditional Layer 4 load balancers cannot natively perform.

Sidecar Proxy Injection

Sidecar proxy injection in service mesh architecture enables dynamic traffic management, observability, and security at the microservice level, contrasting with traditional load balancers that manage traffic primarily at the network edge. This per-instance proxy enhances granular control over east-west traffic, allowing seamless policy enforcement and resilience without modifying application code.

Circuit Breaking Policies

Load balancers manage traffic distribution by directing requests to healthy servers, but lack granular control over microservices communication, whereas service meshes provide advanced circuit breaking policies that enable fine-tuned failure handling and automatic retries at the service-to-service level. Circuit breaking in service meshes minimizes cascading failures and enhances system resilience by dynamically isolating unhealthy service instances based on real-time metrics such as error rates, latency, and request volume.

mTLS (Mutual TLS) Enforcement

Load balancers primarily handle traffic distribution without inherent mTLS enforcement, requiring external configuration to secure communication between services. Service meshes provide built-in mTLS enforcement, automating mutual authentication and encryption at the application layer for enhanced security across microservice architectures.

Service Discovery Automation

Service mesh automates service discovery by dynamically detecting and updating service endpoints through built-in control plane mechanisms, eliminating manual configuration and improving resilience. In contrast, traditional load balancers require static endpoint definitions or external service registries, limiting real-time adaptability and scaling efficiency.

Traffic Mirroring

Traffic mirroring in load balancers captures and duplicates live traffic to a secondary destination for testing without impacting production performance. In contrast, service mesh traffic mirroring provides fine-grained control over service-to-service communication, enabling observability, debugging, and gradual rollout of new features within microservices architectures.

Policy-Driven Routing

Load balancers primarily distribute traffic based on static rules such as IP address, session persistence, or simple health checks, whereas service meshes enable granular, policy-driven routing that includes dynamic criteria like user identity, request content, and real-time telemetry data. Service mesh architectures use sidecar proxies to enforce routing policies, providing advanced features like traffic segmentation, canary deployments, and circuit breaking, which are not typically achievable with traditional load balancers.

Observability Tracing

Load balancers distribute network traffic efficiently but provide limited observability and tracing capabilities compared to service meshes, which offer deep, application-level telemetry and end-to-end tracing across microservices through integrated sidecar proxies. Service meshes enable granular metrics, logs, and distributed tracing data, facilitating enhanced monitoring and debugging in complex microservice architectures beyond traditional load balancing.

Load Balancer vs Service Mesh Infographic

industrydif.com

industrydif.com