Batch processing involves collecting large volumes of data over time and processing them in a single, scheduled run, which is ideal for complex analytics requiring comprehensive datasets. Stream processing handles data continuously and in real-time, enabling immediate analysis and quicker decision-making for applications like fraud detection and live monitoring. Choosing between batch and stream processing depends on factors such as data velocity, latency requirements, and the specific use case needs.

Table of Comparison

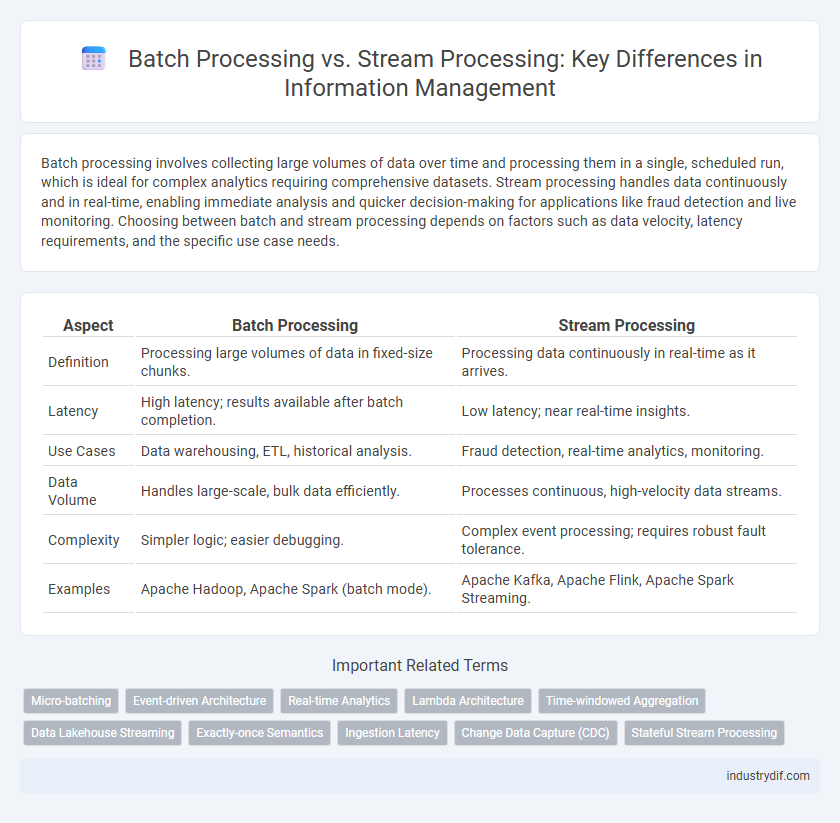

| Aspect | Batch Processing | Stream Processing |

|---|---|---|

| Definition | Processing large volumes of data in fixed-size chunks. | Processing data continuously in real-time as it arrives. |

| Latency | High latency; results available after batch completion. | Low latency; near real-time insights. |

| Use Cases | Data warehousing, ETL, historical analysis. | Fraud detection, real-time analytics, monitoring. |

| Data Volume | Handles large-scale, bulk data efficiently. | Processes continuous, high-velocity data streams. |

| Complexity | Simpler logic; easier debugging. | Complex event processing; requires robust fault tolerance. |

| Examples | Apache Hadoop, Apache Spark (batch mode). | Apache Kafka, Apache Flink, Apache Spark Streaming. |

Introduction to Batch and Stream Processing

Batch processing involves handling large volumes of data collected over a period, executing jobs in scheduled, fixed-size groups to optimize resource usage and throughput. Stream processing analyzes data continuously in real-time, enabling immediate insights and rapid response to events by processing data as it arrives. Both techniques serve distinct purposes: batch processing excels in scenarios requiring intensive computation on historical data, while stream processing suits applications demanding low latency and instantaneous data analysis.

Key Differences Between Batch and Stream Processing

Batch processing handles large volumes of data collected over time and processes them as a single unit, making it ideal for complex, resource-intensive tasks with latency tolerance. Stream processing deals with real-time data, continuously analyzing and responding to input events with minimal delay, suited for time-sensitive applications. Key differences include processing speed, data handling, and application use cases, where batch prioritizes throughput and completeness, and stream targets low latency and real-time insights.

Core Concepts and Terminology

Batch processing refers to the execution of a series of jobs or tasks on a large dataset at once, enabling efficient handling of massive volumes of data with high throughput. Stream processing involves continuous input and real-time processing of data flows, emphasizing low latency and immediate analytics on individual data points or small data windows. Key terminology includes batching, latency, throughput, event time, windowing, and stateful vs stateless processing, which distinguish the operational models and use cases of batch and stream processing systems.

Use Cases: When to Choose Batch vs Stream

Batch processing suits scenarios requiring large-scale data aggregation and historical analysis such as end-of-day reporting, payroll systems, and data warehousing. Stream processing excels in real-time decision-making environments like fraud detection, sensor data monitoring, and live user activity tracking. Selecting between batch and stream processing depends on latency tolerance, data volume, and the need for immediate insights versus comprehensive historical analysis.

Data Latency and Processing Speed

Batch processing handles large volumes of data by processing it in chunks at scheduled intervals, resulting in higher data latency but efficient throughput for complex computations. Stream processing processes data in real-time as it arrives, significantly reducing data latency and enabling faster decision-making with lower processing delay. Trade-offs between these methods depend on the need for immediate insights versus handling extensive data sets efficiently.

Scalability and System Requirements

Batch processing systems require substantial computational resources to handle large volumes of data in scheduled intervals, often necessitating robust storage and powerful CPUs for scalability. Stream processing platforms are designed for real-time data ingestion and analysis, demanding low-latency networks and distributed architectures to scale effectively. Scalability in stream processing typically relies on horizontal scaling to manage continuous data flows, whereas batch processing scalability depends on optimizing job scheduling and resource allocation.

Real-World Industry Applications

Batch processing excels in industries like banking and insurance by handling large volumes of transaction data for end-of-day reports and risk analysis. Stream processing powers real-time analytics in sectors such as e-commerce and telecommunications, enabling immediate fraud detection and personalized customer experiences. Manufacturing leverages both methods for predictive maintenance, combining batch analysis of historical data with real-time sensor monitoring.

Challenges and Limitations

Batch processing struggles with latency issues, making it unsuitable for real-time analytics and time-sensitive applications. Stream processing faces challenges in handling data consistency and fault tolerance due to its continuous, unbounded data flow. Both methods have limitations in scalability and require complex infrastructure to manage large-scale data efficiently.

Batch and Stream Processing Technologies

Batch processing technologies like Apache Hadoop and Apache Spark excel at handling large volumes of data by processing it in fixed-size chunks, enabling efficient analysis and storage. Stream processing technologies such as Apache Kafka, Apache Flink, and Apache Storm facilitate real-time data processing by continuously ingesting and analyzing data streams, supporting low-latency decision-making. Both approaches leverage distributed computing frameworks, but batch processing emphasizes throughput and completeness, while stream processing prioritizes immediacy and event-driven responses.

Future Trends in Data Processing

Future trends in data processing emphasize the integration of batch processing and stream processing to harness real-time analytics alongside large-scale data management. Advances in edge computing, AI-driven data orchestration, and hybrid cloud architectures are driving more efficient, scalable, and low-latency processing frameworks. The rise of serverless computing and Apache Kafka-based platforms further accelerates adaptive processing models that meet the growing demands of IoT and big data applications.

Related Important Terms

Micro-batching

Micro-batching in batch processing divides data into small, manageable chunks processed sequentially, offering lower latency compared to traditional batch jobs. Stream processing, by contrast, handles data continuously in real-time, but micro-batching bridges the gap by enabling near-real-time analysis with higher throughput and fault tolerance.

Event-driven Architecture

Batch processing in event-driven architecture involves collecting and processing large volumes of events at scheduled intervals, optimizing throughput for complex analytical tasks, whereas stream processing handles data in real-time by continuously ingesting and analyzing individual events to support immediate decision-making and low-latency responses in dynamic systems. Event-driven architecture leverages message brokers and event queues to enable asynchronous communication, with stream processing frameworks like Apache Kafka and Apache Flink providing scalable, fault-tolerant solutions for continuous event handling.

Real-time Analytics

Batch processing handles large volumes of data collected over a period, making it suitable for complex, historical data analysis but less effective for real-time analytics. Stream processing enables continuous data ingestion and immediate analysis, providing real-time insights essential for applications like fraud detection, IoT monitoring, and dynamic pricing.

Lambda Architecture

Lambda Architecture combines batch processing and stream processing to achieve low-latency updates and fault tolerance by handling large data sets in batch layers while processing real-time data in speed layers. This hybrid approach leverages distributed computing frameworks like Hadoop for batch processing and Apache Kafka or Apache Storm for stream processing to ensure comprehensive data analytics and scalability.

Time-windowed Aggregation

Batch processing aggregates data over fixed time intervals, enabling comprehensive analysis of complete data sets after collection, while stream processing performs real-time time-windowed aggregation, allowing continuous analysis of data as it arrives with minimal latency. Time-windowed aggregation in stream processing supports sliding or tumbling windows, facilitating dynamic insights and immediate response in use cases such as fraud detection and sensor monitoring.

Data Lakehouse Streaming

Batch processing efficiently handles large volumes of stored data in scheduled intervals, making it ideal for comprehensive historical analysis within a data lakehouse environment. Stream processing enables real-time data ingestion and immediate analytics, supporting dynamic data lakehouse architectures that require low-latency insights and continuous updates.

Exactly-once Semantics

Batch processing guarantees exactly-once semantics by processing large data volumes in discrete, atomic jobs that prevent duplication through checkpointing and commit protocols. Stream processing achieves exactly-once semantics using stateful operators and distributed snapshots, enabling real-time handling of event streams with fault-tolerant recovery mechanisms.

Ingestion Latency

Batch processing exhibits higher ingestion latency as data is collected and processed in large chunks at scheduled intervals, causing delays between data arrival and analysis. Stream processing minimizes ingestion latency by continuously ingesting and analyzing data in real-time, enabling faster decision-making and immediate insights.

Change Data Capture (CDC)

Change Data Capture (CDC) enables real-time data integration by capturing and streaming database changes, making it a core component of stream processing systems that require low-latency data updates. In contrast, batch processing aggregates and processes large volumes of data at scheduled intervals, which can introduce delays in reflecting data changes compared to CDC-powered stream processing architectures.

Stateful Stream Processing

Stateful stream processing enables real-time analytics by maintaining state information across events, unlike batch processing which handles large volumes of data in discrete intervals. This continuous computation model supports dynamic data updates, fault tolerance, and low-latency processing essential for applications such as fraud detection and live monitoring.

Batch Processing vs Stream Processing Infographic

industrydif.com

industrydif.com