Legacy systems often rely on fixed infrastructure and require extensive maintenance, limiting scalability and agility. Serverless computing leverages cloud-based functions to automatically allocate resources, enabling efficient, on-demand scaling without manual intervention. Transitioning to serverless architectures reduces operational costs and accelerates development cycles by abstracting infrastructure complexities.

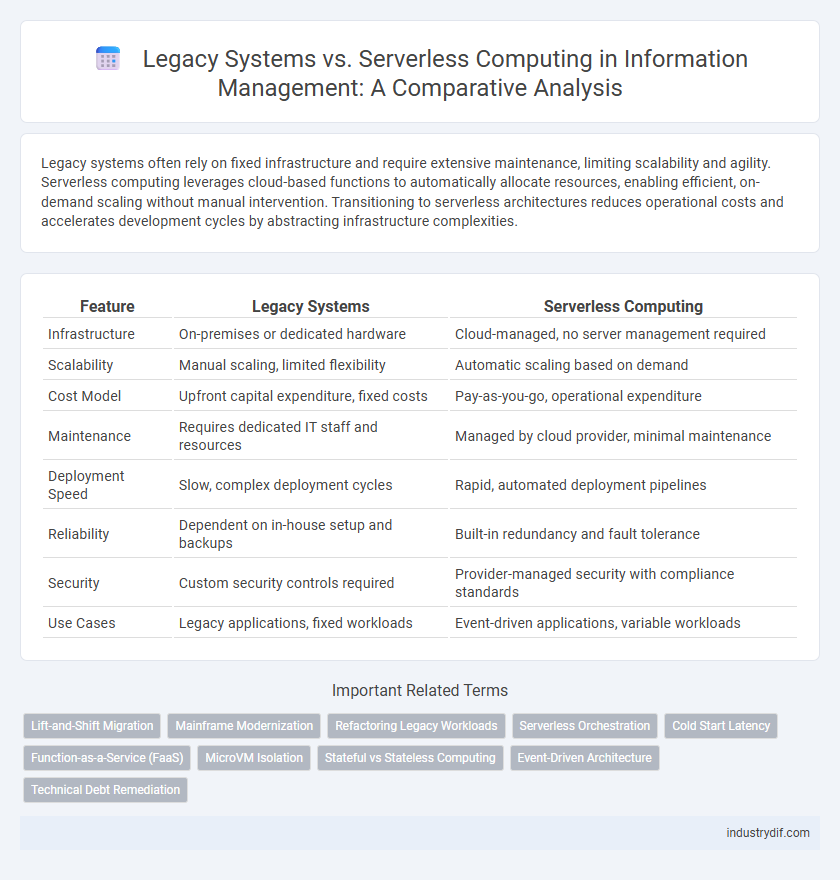

Table of Comparison

| Feature | Legacy Systems | Serverless Computing |

|---|---|---|

| Infrastructure | On-premises or dedicated hardware | Cloud-managed, no server management required |

| Scalability | Manual scaling, limited flexibility | Automatic scaling based on demand |

| Cost Model | Upfront capital expenditure, fixed costs | Pay-as-you-go, operational expenditure |

| Maintenance | Requires dedicated IT staff and resources | Managed by cloud provider, minimal maintenance |

| Deployment Speed | Slow, complex deployment cycles | Rapid, automated deployment pipelines |

| Reliability | Dependent on in-house setup and backups | Built-in redundancy and fault tolerance |

| Security | Custom security controls required | Provider-managed security with compliance standards |

| Use Cases | Legacy applications, fixed workloads | Event-driven applications, variable workloads |

Introduction to Legacy Systems and Serverless Computing

Legacy systems refer to outdated computing infrastructures and software that remain in use due to their critical role in business operations, despite limitations in scalability and maintenance challenges. Serverless computing is a cloud-native execution model where cloud providers dynamically manage server resources, allowing developers to focus on code without server management. This shift from traditional legacy systems to serverless architectures enables improved agility, reduced operational costs, and enhanced scalability.

Key Characteristics of Legacy Systems

Legacy systems typically rely on outdated hardware and software architectures that are rigid, difficult to scale, and costly to maintain. These systems often use monolithic designs with tightly coupled components, resulting in limited flexibility and slow integration with modern applications. Data storage in legacy systems is usually centralized, which can lead to bottlenecks and challenges in handling large-scale, real-time data processing.

Core Features of Serverless Computing

Serverless computing offers automatic scaling, event-driven execution, and seamless resource management, eliminating the need for server provisioning and maintenance inherent in legacy systems. It enables developers to run code in response to events without managing infrastructure, optimizing cost efficiency by charging only for actual compute time. Core features include built-in fault tolerance, stateless function execution, and simplified deployment through managed cloud services like AWS Lambda, Azure Functions, and Google Cloud Functions.

Migration Challenges: Legacy to Serverless

Migrating legacy systems to serverless computing poses significant challenges such as complex code refactoring, dependency management, and data migration across distributed architectures. Incompatible legacy applications often require redesigning to fit event-driven serverless environments, increasing development effort and time. Ensuring security compliance and minimizing downtime during transition further complicate the migration process for enterprises adopting serverless technology.

Performance and Scalability Comparison

Legacy systems often struggle with limited scalability and slower performance due to fixed hardware resources and monolithic architectures. Serverless computing dynamically allocates resources in response to demand, enabling rapid scaling and improved performance without over-provisioning. This elasticity allows serverless models to handle variable workloads efficiently, reducing latency and optimizing resource utilization.

Security Considerations in Both Approaches

Legacy systems often rely on outdated security protocols and onsite hardware, increasing vulnerability to cyberattacks, while serverless computing leverages cloud provider security measures such as automated patching, encryption, and identity access management. However, serverless environments introduce unique risks like function event data exposure and dependency on third-party cloud security. A thorough risk assessment must address legacy systems' rigid architecture and serverless's shared responsibility model to ensure robust cybersecurity.

Cost Analysis: Legacy vs Serverless Models

Legacy systems often incur high upfront capital expenses and ongoing maintenance costs, including hardware upgrades and specialized IT staffing. Serverless computing shifts costs to a usage-based model, significantly reducing expenses by eliminating infrastructure management and allowing scalable resource allocation. This pay-as-you-go pricing improves cost efficiency, especially for applications with variable or unpredictable workloads.

Integration and Interoperability Issues

Legacy systems often face significant integration and interoperability challenges due to outdated protocols and proprietary technologies, hindering seamless data exchange with modern platforms. Serverless computing leverages standardized APIs and cloud-native services, facilitating smoother integration and real-time interoperability across heterogeneous environments. Enterprises must address compatibility gaps and implement middleware solutions to bridge legacy infrastructure with serverless architectures effectively.

Use Cases: When to Choose Legacy or Serverless

Legacy systems are ideal for organizations requiring stable, heavily customized environments with critical data residency and compliance needs. Serverless computing excels in scalable, event-driven applications such as microservices, IoT, and real-time data processing, where rapid deployment and cost efficiency are essential. Selecting between legacy and serverless hinges on workload predictability, integration complexity, and agility requirements.

Future Trends in Enterprise IT Infrastructure

Future trends in enterprise IT infrastructure emphasize a shift from legacy systems to serverless computing, driven by demands for scalability, cost efficiency, and agility. Serverless platforms like AWS Lambda and Azure Functions enable enterprises to deploy applications without managing server infrastructure, promoting faster innovation cycles and reduced operational overhead. As artificial intelligence and edge computing integrate with serverless architectures, enterprises are poised to achieve enhanced performance and real-time data processing capabilities.

Related Important Terms

Lift-and-Shift Migration

Lift-and-shift migration involves rehosting legacy systems onto cloud infrastructure with minimal modifications, enabling faster deployment but often missing out on cloud-native scalability and cost benefits inherent in serverless computing. Serverless computing eliminates server management by executing code in response to events, offering automatic scaling and reduced operational overhead, which legacy systems rehosted via lift-and-shift may not fully leverage.

Mainframe Modernization

Legacy systems, particularly mainframes, often face challenges in scalability and maintenance costs, making mainframe modernization essential for digital transformation. Serverless computing offers a flexible, cost-effective alternative by enabling organizations to run applications without managing infrastructure, thus accelerating modernization efforts and improving operational efficiency.

Refactoring Legacy Workloads

Refactoring legacy workloads involves migrating monolithic applications to serverless architectures to enhance scalability, reduce operational costs, and improve deployment velocity. Leveraging cloud-native services such as AWS Lambda or Azure Functions streamlines workload modernization by decoupling tightly integrated legacy components into event-driven, microservices-based workflows.

Serverless Orchestration

Serverless orchestration enables automated coordination of multiple cloud functions, optimizing legacy systems' rigid workflows by improving scalability and reducing operational overhead. It leverages event-driven architectures and managed services like AWS Step Functions or Azure Logic Apps to streamline complex processes without the need for traditional infrastructure management.

Cold Start Latency

Legacy systems typically experience minimal cold start latency due to always-on server infrastructure, whereas serverless computing often faces noticeable delays during cold starts as functions spin up from idle. Optimizing cold start latency in serverless architectures relies on techniques such as function warm-up, provisioned concurrency, and runtime-specific optimizations.

Function-as-a-Service (FaaS)

Legacy systems often struggle with scalability and maintenance challenges due to their monolithic architectures, while serverless computing, particularly Function-as-a-Service (FaaS), enables developers to deploy discrete functions that automatically scale based on demand, reducing operational overhead. FaaS platforms like AWS Lambda, Azure Functions, and Google Cloud Functions provide event-driven execution with granular billing models, enhancing cost efficiency and agility compared to traditional legacy system deployments.

MicroVM Isolation

MicroVM isolation in serverless computing offers enhanced security and resource efficiency compared to legacy systems, which rely on traditional virtual machines with higher overhead and weaker isolation. This advanced isolation mechanism minimizes attack surfaces and enables rapid scaling, significantly improving workload performance and protection in modern cloud environments.

Stateful vs Stateless Computing

Legacy systems primarily rely on stateful computing, where applications maintain and manage persistent states across sessions, leading to complex infrastructure and higher maintenance overhead. Serverless computing embraces stateless architectures, enabling scalable, event-driven functions that process requests independently without retaining session information, which enhances flexibility and reduces operational costs.

Event-Driven Architecture

Legacy systems often rely on monolithic architectures with tightly coupled components, limiting scalability and real-time responsiveness, whereas serverless computing leverages event-driven architecture to dynamically allocate resources in response to events, enhancing scalability and reducing operational overhead. Event-driven serverless platforms like AWS Lambda or Azure Functions enable rapid processing of asynchronous events, making them ideal for modern applications requiring agility and seamless integration.

Technical Debt Remediation

Legacy systems often accumulate significant technical debt due to outdated codebases and tightly coupled architectures, making remediation costly and complex. Serverless computing reduces technical debt by enabling modular, event-driven functions that simplify maintenance and accelerate iterative updates.

Legacy Systems vs Serverless Computing Infographic

industrydif.com

industrydif.com