Information Technology relies on classical computing systems to process, store, and transfer data using binary code, enabling widespread applications in software development, networking, and data management. Quantum Computing leverages quantum bits or qubits, which enable complex computations at unprecedented speeds by exploiting quantum phenomena like superposition and entanglement. The integration of Quantum Computing promises to revolutionize Information Technology by solving problems beyond the reach of classical computers, particularly in cryptography, optimization, and simulation tasks.

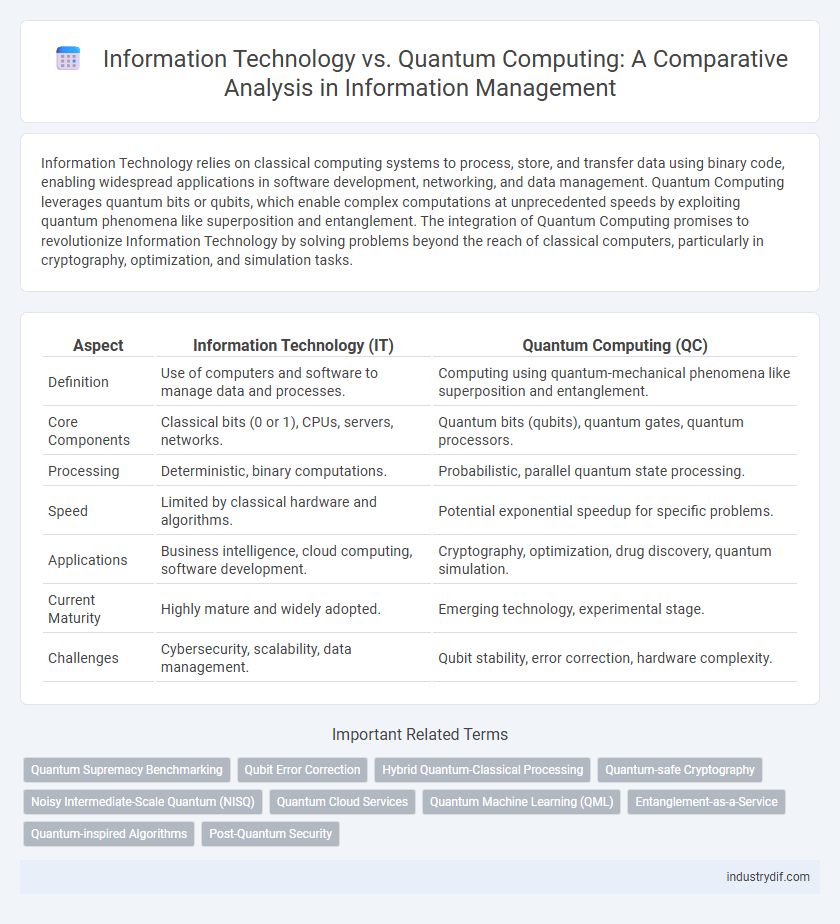

Table of Comparison

| Aspect | Information Technology (IT) | Quantum Computing (QC) |

|---|---|---|

| Definition | Use of computers and software to manage data and processes. | Computing using quantum-mechanical phenomena like superposition and entanglement. |

| Core Components | Classical bits (0 or 1), CPUs, servers, networks. | Quantum bits (qubits), quantum gates, quantum processors. |

| Processing | Deterministic, binary computations. | Probabilistic, parallel quantum state processing. |

| Speed | Limited by classical hardware and algorithms. | Potential exponential speedup for specific problems. |

| Applications | Business intelligence, cloud computing, software development. | Cryptography, optimization, drug discovery, quantum simulation. |

| Current Maturity | Highly mature and widely adopted. | Emerging technology, experimental stage. |

| Challenges | Cybersecurity, scalability, data management. | Qubit stability, error correction, hardware complexity. |

Introduction to Information Technology and Quantum Computing

Information Technology encompasses the use of computer systems, software, and networks to store, retrieve, transmit, and manipulate data effectively, forming the backbone of modern digital communication and business operations. Quantum Computing leverages principles of quantum mechanics such as superposition and entanglement to perform complex computations at speeds unattainable by classical computers, promising breakthroughs in cryptography, optimization, and simulation. Understanding the fundamental differences in data processing between classical bits in Information Technology and quantum bits (qubits) in Quantum Computing is essential for advancing next-generation technologies.

Core Concepts: Classical vs Quantum Computing

Information Technology primarily relies on classical computing, which uses binary bits as the fundamental unit of data, representing information as either 0 or 1. Quantum computing, by contrast, leverages quantum bits or qubits, capable of existing in superposition states that represent both 0 and 1 simultaneously, enabling parallel computation. Core concepts in quantum computing include entanglement and quantum interference, which fundamentally enhance processing power compared to classical systems.

Information Processing Mechanisms

Information Technology relies on classical computing architectures where data is encoded in binary bits and processed through deterministic algorithms on silicon-based processors. Quantum Computing utilizes quantum bits or qubits, exploiting principles like superposition and entanglement to perform parallel information processing with significantly higher computational power. These fundamentally different information processing mechanisms enable quantum systems to solve complex problems exponentially faster than traditional information technology platforms.

Key Differences in Data Storage

Information Technology relies on classical data storage methods such as magnetic disks and solid-state drives, where information is stored in binary bits representing 0s and 1s. Quantum Computing uses qubits that can exist simultaneously in multiple states through superposition, enabling exponentially greater data density and processing potential. Data retrieval in quantum systems exploits quantum entanglement, offering fundamentally different mechanisms compared to traditional IT storage architectures.

Security Implications in IT and Quantum Computing

Information Technology relies heavily on classical encryption methods that face increasing vulnerability risks from advancing quantum computing capabilities. Quantum computing introduces novel security paradigms such as quantum key distribution, which promises theoretically unbreakable encryption based on quantum mechanics principles. The shift toward quantum-resistant algorithms is critical for safeguarding data integrity and confidentiality in future IT infrastructures against quantum attacks.

Computational Speed and Efficiency

Information Technology relies on classical computing architectures that process data sequentially, limiting computational speed and efficiency for complex problems. Quantum Computing harnesses quantum bits (qubits) and superposition, enabling exponential speedup in computations and solving specific algorithms faster than traditional IT. This shift significantly enhances efficiency for tasks like cryptography, optimization, and large-scale simulations, surpassing classical computational limitations.

Real-World Applications: IT vs Quantum Computing

Information Technology underpins everyday digital services including cloud computing, cybersecurity, and data management, driving business operations, communication, and entertainment. Quantum Computing, still in development, promises breakthrough real-world applications such as drug discovery, optimization problems, and cryptographic security by leveraging quantum bits for massively parallel processing. Current IT remains essential for most industries, while quantum computing targets specialized fields requiring complex computational power beyond classical IT capabilities.

Industry Adoption and Current Use Cases

Information Technology (IT) dominates industry adoption with widespread use in cloud computing, cybersecurity, data analytics, and enterprise software solutions, enabling digital transformation across sectors like finance, healthcare, and retail. Quantum computing remains in experimental and early pilot phases, with select industries such as pharmaceuticals, logistics, and materials science exploring quantum algorithms to solve complex optimization, simulation, and cryptographic challenges. Current use cases in quantum computing primarily involve research institutions and specialized companies leveraging quantum processors for drug discovery, supply chain optimization, and quantum-resistant encryption development.

Challenges and Limitations

Information technology faces challenges such as data security vulnerabilities, scalability issues, and energy consumption concerns. Quantum computing encounters limitations including qubit decoherence, error rates, and the need for extremely low temperatures to maintain stability. Both fields must overcome hardware constraints and algorithmic complexities to achieve widespread practical applications.

Future Perspectives in Information Technology and Quantum Computing

Quantum computing promises to revolutionize information technology by enabling exponentially faster data processing and solving complex problems beyond classical computers' reach. Future perspectives highlight integration of quantum algorithms with AI to enhance cybersecurity, optimize logistics, and accelerate scientific research. Continued advancements in quantum hardware and hybrid classical-quantum systems are poised to transform data analytics, cloud computing, and communication networks.

Related Important Terms

Quantum Supremacy Benchmarking

Quantum supremacy benchmarking measures the performance advantage of quantum computers over classical information technology by solving specific problems exponentially faster. These benchmarks highlight quantum processors' ability to perform complex computations that are infeasible for traditional IT systems, marking a pivotal milestone in computational innovation.

Qubit Error Correction

Qubit error correction in quantum computing is essential for maintaining computational accuracy by detecting and correcting errors caused by quantum decoherence and noise, a challenge far less prevalent in classical information technology. Advanced error-correcting codes like surface codes and stabilizer codes enable quantum systems to achieve fault-tolerant operations, bridging the gap between noisy quantum hardware and reliable quantum information processing.

Hybrid Quantum-Classical Processing

Hybrid quantum-classical processing combines classical information technology's robust computational frameworks with quantum computing's superior qubit-based parallelism, enabling enhanced problem-solving capabilities in optimization, cryptography, and machine learning. This integration leverages classical processors for data management and error correction while quantum units perform complex calculations, driving advancements in hybrid algorithms and accelerating innovation in information technology architectures.

Quantum-safe Cryptography

Quantum-safe cryptography employs algorithms resistant to quantum attacks, ensuring data security as quantum computing advances. Integrating post-quantum cryptographic protocols into existing information technology infrastructure mitigates risks posed by quantum decryption capabilities.

Noisy Intermediate-Scale Quantum (NISQ)

Noisy Intermediate-Scale Quantum (NISQ) devices represent a transitional phase in quantum computing characterized by qubits susceptible to noise and limited error correction, offering potential computational advantages over classical information technology in specific tasks like optimization and simulation. While classical information technology relies on stable transistors and binary logic, NISQ systems leverage quantum superposition and entanglement but face challenges in scalability and error mitigation essential for practical quantum advantage.

Quantum Cloud Services

Quantum cloud services are revolutionizing information technology by enabling remote access to powerful quantum processors for complex problem-solving and advanced data analysis. These services leverage quantum computing's unique capabilities, such as superposition and entanglement, to enhance computational speed and security beyond classical IT infrastructures.

Quantum Machine Learning (QML)

Quantum Machine Learning (QML) leverages quantum computing's principles to process and analyze large datasets exponentially faster than classical Information Technology systems, enabling breakthroughs in pattern recognition and optimization problems. Integrating quantum algorithms with machine learning models enhances computational efficiency and accuracy, transforming data-driven decision-making across various industries.

Entanglement-as-a-Service

Entanglement-as-a-Service (EaaS) leverages quantum entanglement to enhance secure communication and data processing beyond classical Information Technology capabilities. By enabling instantaneous correlations between qubits, EaaS offers revolutionary advancements in quantum networks, cryptography, and distributed computing.

Quantum-inspired Algorithms

Quantum-inspired algorithms leverage principles from quantum computing to enhance classical information technology processes, enabling improved optimization, machine learning, and data analysis performance without requiring quantum hardware. These algorithms simulate quantum behaviors such as superposition and entanglement on classical systems, offering practical advantages in solving complex computational problems efficiently.

Post-Quantum Security

Information Technology relies on classical encryption algorithms vulnerable to emerging quantum attacks, necessitating the development of Post-Quantum Security protocols designed to withstand quantum decryption methods. Post-Quantum cryptography employs lattice-based, hash-based, and code-based algorithms to secure data against the immense computational power of quantum computers.

Information Technology vs Quantum Computing Infographic

industrydif.com

industrydif.com