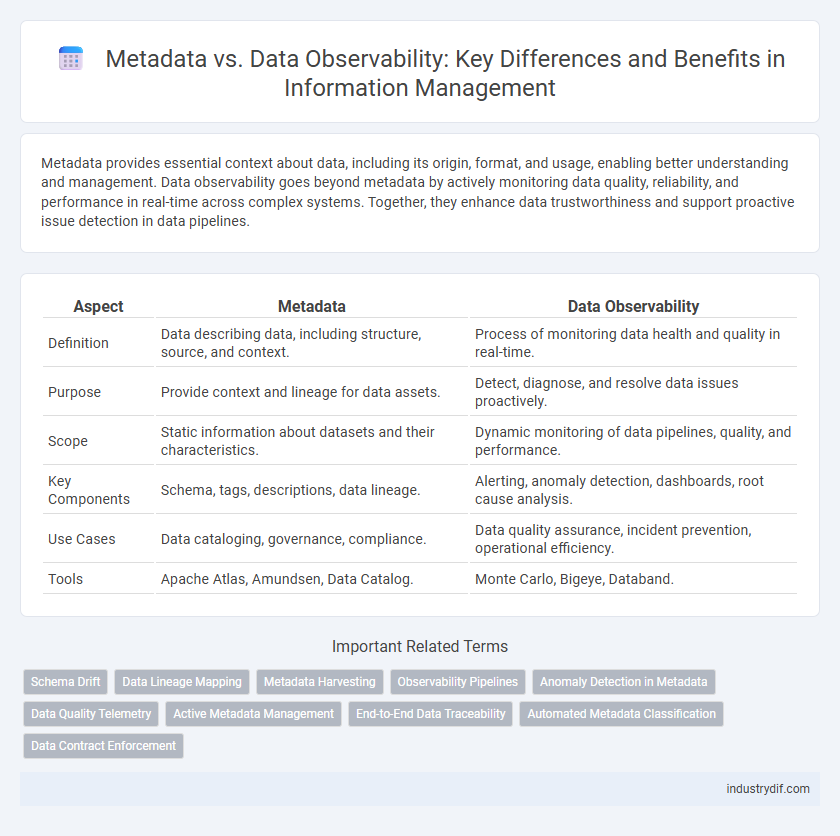

Metadata provides essential context about data, including its origin, format, and usage, enabling better understanding and management. Data observability goes beyond metadata by actively monitoring data quality, reliability, and performance in real-time across complex systems. Together, they enhance data trustworthiness and support proactive issue detection in data pipelines.

Table of Comparison

| Aspect | Metadata | Data Observability |

|---|---|---|

| Definition | Data describing data, including structure, source, and context. | Process of monitoring data health and quality in real-time. |

| Purpose | Provide context and lineage for data assets. | Detect, diagnose, and resolve data issues proactively. |

| Scope | Static information about datasets and their characteristics. | Dynamic monitoring of data pipelines, quality, and performance. |

| Key Components | Schema, tags, descriptions, data lineage. | Alerting, anomaly detection, dashboards, root cause analysis. |

| Use Cases | Data cataloging, governance, compliance. | Data quality assurance, incident prevention, operational efficiency. |

| Tools | Apache Atlas, Amundsen, Data Catalog. | Monte Carlo, Bigeye, Databand. |

Understanding Metadata in Modern Data Systems

Metadata provides critical context by describing the characteristics, origin, and structure of data within modern data systems, enabling improved data discovery and governance. It facilitates efficient data management by capturing information about data lineage, schema, and usage patterns. Understanding metadata enhances data observability by offering insights into data health and quality without accessing the raw data directly.

Defining Data Observability: Key Concepts

Data observability refers to the ability to fully understand the health, quality, and state of data within a system by monitoring data pipelines, data quality metrics, and data anomalies in real time. Unlike metadata, which provides descriptive information about data, data observability focuses on proactive detection of issues such as data delays, schema changes, and completeness problems to ensure reliable data operations. Core components of data observability include metrics like freshness, distribution, volume, and lineage, enabling data teams to maintain trust and optimize data workflows effectively.

Metadata Management: Importance and Practices

Metadata management plays a critical role in ensuring data observability by providing the contextual information needed to interpret data accurately. Effective metadata practices include cataloging, classification, and lineage tracking, which help organizations understand data origin, transformations, and usage across systems. This structured approach to metadata enhances data quality, governance, and helps detect data anomalies early, supporting better decision-making processes.

Data Observability vs Metadata: Core Differences

Data observability focuses on monitoring, analyzing, and understanding the health and quality of data pipelines in real-time, enabling proactive issue detection and resolution. Metadata refers to descriptive information about data, such as schema, lineage, and context, which supports data cataloging and governance. While metadata provides static insights and context, data observability offers dynamic, continuous visibility into data performance and anomalies across complex systems.

How Metadata Supports Data Quality Initiatives

Metadata provides critical context that enhances data quality by enabling accurate data lineage, comprehensive data cataloging, and effective data governance. It supports data quality initiatives by allowing organizations to track data origin, transformations, and usage patterns, ensuring transparency and accountability. Leveraging metadata facilitates automated data validation and monitoring, leading to improved accuracy, consistency, and reliability of data assets.

The Role of Data Observability in Reducing Data Downtime

Data observability enhances data reliability by providing continuous monitoring, anomaly detection, and root cause analysis, which significantly reduces data downtime. Unlike metadata, which offers static descriptive information about data, data observability delivers dynamic insights into data health and behavior across pipelines. This proactive approach enables faster identification and resolution of issues, ensuring consistent data availability and quality.

Metadata Catalogs vs Data Observability Platforms

Metadata catalogs organize and manage detailed information about data assets, enabling efficient data discovery, governance, and lineage tracking within an enterprise. Data observability platforms provide real-time monitoring, anomaly detection, and performance insights on the health and reliability of data pipelines and operational workflows. Combining metadata catalogs with data observability platforms enhances data quality management and accelerates troubleshooting by linking data context with system behavior.

Benefits of Implementing Data Observability

Implementing data observability enhances data reliability by continuously monitoring data quality, lineage, and anomalies, reducing the risk of errors and downtime. It provides real-time insights into data health across complex pipelines, enabling faster issue detection and resolution, which improves operational efficiency. These benefits lead to increased trust in data for analytics, driving better business decisions and compliance adherence.

Integrating Metadata and Data Observability for Better Insights

Integrating metadata and data observability enhances data governance by providing comprehensive context and actionable insights into data quality, lineage, and usage patterns. Metadata catalogs enable efficient data discovery, while data observability tools monitor data health in real-time, together enabling proactive issue detection and resolution. This integration supports advanced analytics and improves decision-making by ensuring data accuracy and reliability across complex data ecosystems.

Future Trends: Metadata and Data Observability Evolution

Metadata and data observability are evolving with increased integration of AI and machine learning to automate anomaly detection and data quality assessments. Future trends emphasize enhanced metadata cataloging combined with real-time observability dashboards to provide comprehensive insights into data lineage, access patterns, and system health. This evolution supports more proactive data governance, enabling organizations to anticipate issues before they impact analytics and decision-making processes.

Related Important Terms

Schema Drift

Schema drift occurs when changes in data structure go undetected, leading to inaccurate analytics and broken pipelines; metadata management tracks schema versions but lacks real-time anomaly detection. Data observability tools monitor schema drift continuously by analyzing data quality, lineage, and freshness metrics to ensure data reliability and timely identification of structural changes.

Data Lineage Mapping

Data lineage mapping is a critical component of data observability that tracks the flow and transformation of data across systems, providing comprehensive metadata that enhances data governance and quality. Unlike static metadata, data lineage offers dynamic, real-time insights into data dependencies and origins, enabling precise root cause analysis and ensuring data reliability.

Metadata Harvesting

Metadata harvesting involves systematically collecting and organizing metadata from diverse data sources to enhance data observability, enabling improved data lineage, quality monitoring, and governance. This process supports real-time tracking and auditing, facilitating proactive issue detection and ensuring data reliability across complex data ecosystems.

Observability Pipelines

Observability pipelines enhance data observability by continuously collecting, processing, and routing telemetry data, enabling real-time monitoring and anomaly detection across complex systems. Unlike static metadata, these pipelines provide dynamic insights into data quality, lineage, and operational health, ensuring robust data reliability and governance.

Anomaly Detection in Metadata

Metadata-driven anomaly detection enhances data observability by identifying unusual patterns in data lineage, schema changes, and access logs, enabling proactive issue resolution before impacting downstream analytics. Leveraging metadata allows for early detection of anomalies that traditional data observability tools might miss, improving the accuracy and reliability of data pipelines.

Data Quality Telemetry

Data quality telemetry leverages metadata to monitor and analyze data health, enabling proactive identification of anomalies and ensuring accuracy, completeness, and consistency across datasets. Unlike traditional metadata management, data observability integrates real-time performance metrics and lineage tracking to provide actionable insights that enhance data reliability and operational efficiency.

Active Metadata Management

Active metadata management enhances data observability by continuously capturing, integrating, and analyzing critical metadata from diverse sources, enabling real-time insights into data health, lineage, and usage patterns. This dynamic approach improves data quality monitoring, accelerates root cause analysis, and fosters proactive decision-making across data ecosystems.

End-to-End Data Traceability

Metadata provides descriptive context and lineage details essential for end-to-end data traceability, enabling precise tracking of data origin, transformations, and usage across systems. Data observability enhances this traceability by continuously monitoring data health, quality, and anomalies in real time to ensure reliability and operational efficiency throughout the data lifecycle.

Automated Metadata Classification

Automated metadata classification enhances data observability by systematically organizing and tagging data assets, enabling real-time insights into data quality, lineage, and usage patterns. This process reduces manual intervention, accelerates anomaly detection, and improves overall data governance effectiveness across complex information systems.

Data Contract Enforcement

Data contract enforcement in metadata management ensures standardized data definitions and schema adherence, which minimizes discrepancies and enhances data quality. Data observability complements this by continuously monitoring data health and lineage, enabling real-time detection of contract violations and fostering trust in data pipelines.

Metadata vs Data Observability Infographic

industrydif.com

industrydif.com