High-performance computing (HPC) delivers massive processing power by leveraging supercomputers and large-scale data centers to solve complex scientific problems rapidly. Edge computing processes data closer to the source, reducing latency and bandwidth usage by enabling real-time analysis on local devices. Choosing between HPC and edge computing depends on the need for centralized computational strength versus immediate, localized data processing in scientific applications.

Table of Comparison

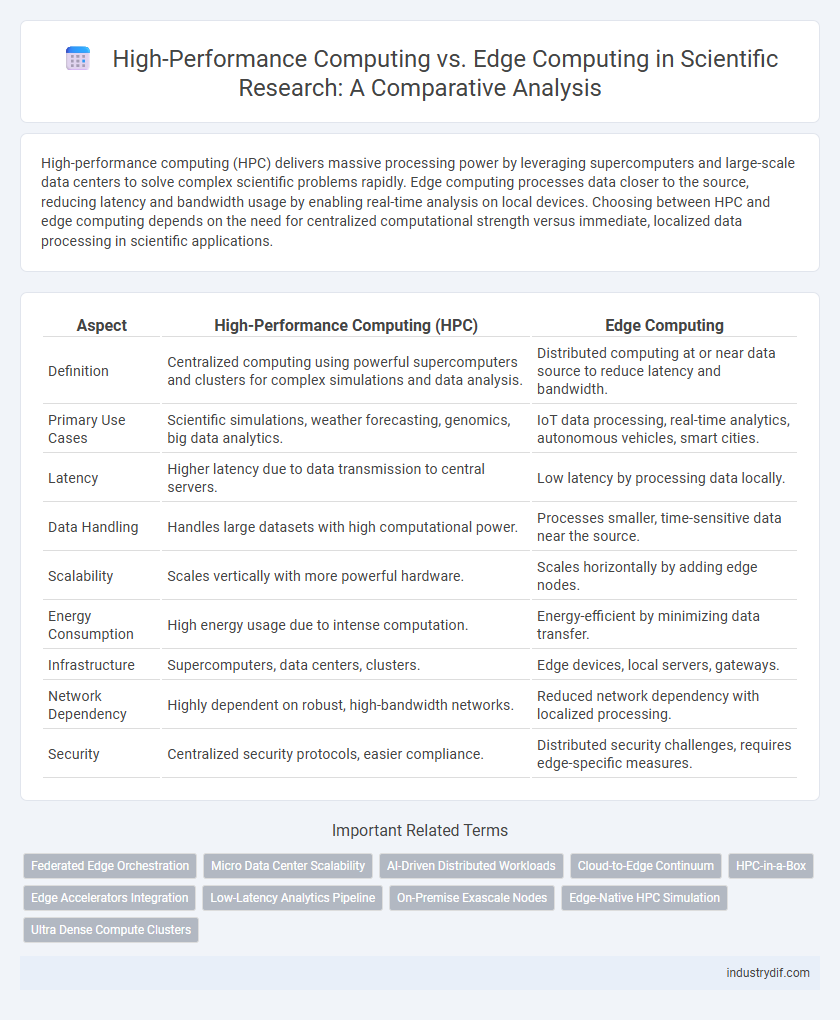

| Aspect | High-Performance Computing (HPC) | Edge Computing |

|---|---|---|

| Definition | Centralized computing using powerful supercomputers and clusters for complex simulations and data analysis. | Distributed computing at or near data source to reduce latency and bandwidth. |

| Primary Use Cases | Scientific simulations, weather forecasting, genomics, big data analytics. | IoT data processing, real-time analytics, autonomous vehicles, smart cities. |

| Latency | Higher latency due to data transmission to central servers. | Low latency by processing data locally. |

| Data Handling | Handles large datasets with high computational power. | Processes smaller, time-sensitive data near the source. |

| Scalability | Scales vertically with more powerful hardware. | Scales horizontally by adding edge nodes. |

| Energy Consumption | High energy usage due to intense computation. | Energy-efficient by minimizing data transfer. |

| Infrastructure | Supercomputers, data centers, clusters. | Edge devices, local servers, gateways. |

| Network Dependency | Highly dependent on robust, high-bandwidth networks. | Reduced network dependency with localized processing. |

| Security | Centralized security protocols, easier compliance. | Distributed security challenges, requires edge-specific measures. |

Defining High-Performance Computing (HPC)

High-Performance Computing (HPC) refers to the use of supercomputers and parallel processing techniques to solve complex computational problems that require immense processing power and high-speed data handling. HPC systems enable scientific simulations, large-scale data analysis, and modeling tasks by leveraging thousands of processors working simultaneously to deliver peak performance. These systems are essential for applications in climate modeling, genomics, and physics that demand extensive computational resources beyond conventional computing capabilities.

Understanding Edge Computing Concepts

Edge computing processes data near the source of generation, reducing latency and bandwidth use compared to centralized high-performance computing (HPC) systems. It enables real-time analytics and rapid decision-making by deploying computational resources at the network's edge, crucial for IoT, autonomous vehicles, and industrial automation. Key edge computing concepts include distributed architecture, data locality, and edge nodes, which collectively optimize performance in latency-sensitive scientific applications.

Core Architectural Differences

High-Performance Computing (HPC) relies on centralized supercomputing clusters with tightly coupled processors, leveraging massive parallelism and high-speed interconnects to solve complex simulations and data-intensive scientific problems. Edge Computing distributes processing power closer to data sources using decentralized nodes with limited computational capacity, optimizing real-time analytics and reducing latency in IoT and sensor networks. The core architectural difference lies in HPC's focus on centralized, high-throughput performance versus Edge Computing's emphasis on localized, low-latency data processing.

Computational Power and Processing Speed

High-performance computing (HPC) delivers unparalleled computational power by leveraging supercomputers and massive parallel processing, enabling complex simulations and large-scale data analysis at extremely high speeds. Edge computing processes data locally near the source, reducing latency and accelerating response times, though it typically offers lower computational power compared to centralized HPC systems. The trade-off between HPC's superior processing speed and edge computing's proximity-driven efficiency is critical for applications requiring either intensive computation or real-time data handling.

Data Latency and Real-Time Analytics

High-Performance Computing (HPC) excels in processing vast datasets with powerful centralized resources, enabling complex simulations and detailed scientific analyses, but it often encounters higher data latency due to data transfer times. Edge Computing minimizes data latency by processing information closer to the data source, facilitating faster real-time analytics critical for time-sensitive scientific experiments. Combining HPC's computational power with Edge Computing's low-latency capabilities can optimize scientific workflows requiring both intensive calculations and immediate data insights.

Scalability and Resource Allocation

High-Performance Computing (HPC) excels in scalability through centralized resource pooling in supercomputers and data centers, enabling massive parallel processing for complex simulations and data analysis. Edge Computing prioritizes localized resource allocation by distributing computational power across network nodes, reducing latency and bandwidth usage for real-time data processing near the source. Scalability in edge environments often requires dynamic resource management protocols to efficiently balance load across heterogeneous devices with limited capacity.

Security Implications in Scientific Research

High-Performance Computing (HPC) environments typically offer centralized architectures with robust security protocols, enabling comprehensive data encryption and controlled access critical for protecting sensitive scientific research data. In contrast, Edge Computing distributes data processing closer to data sources, increasing exposure to potential cyber threats due to a larger attack surface and varying security standards across edge devices. Ensuring data integrity and confidentiality in scientific research requires implementing advanced authentication mechanisms and secure communication protocols tailored to the unique security challenges posed by both HPC and Edge Computing infrastructures.

Energy Efficiency and Environmental Impact

High-performance computing (HPC) centers typically consume vast amounts of electrical power, resulting in significant carbon footprints due to their reliance on centralized data centers and intensive cooling systems. Edge computing reduces energy consumption by processing data closer to its source, minimizing the need for data transmission and lowering latency, which collectively contribute to decreased environmental impact. Optimizing computing resources at the edge supports sustainable technology deployment by reducing greenhouse gas emissions associated with large-scale computing infrastructure.

Use Cases in Scientific Applications

High-performance computing (HPC) excels in complex simulations and large-scale data analysis for climate modeling, genomics, and particle physics by leveraging massive parallel processing capabilities. Edge computing enables real-time data processing in remote scientific environments such as environmental monitoring, autonomous drones, and space exploration, where low latency and bandwidth efficiency are critical. Combining HPC's computational power with edge computing's proximity to data sources enhances adaptive scientific experiments and accelerates insight generation in distributed research infrastructures.

Future Trends: Integrating HPC and Edge Solutions

Future trends in high-performance computing (HPC) emphasize seamless integration with edge computing to address latency and data processing demands in scientific research. Advances in distributed architectures and AI acceleration enable real-time analytics at the edge while leveraging HPC's massive computational power for complex simulations and modeling. This hybrid approach drives innovations in fields like climate modeling, genomics, and autonomous systems by combining scalability with localized intelligence.

Related Important Terms

Federated Edge Orchestration

Federated edge orchestration integrates distributed high-performance computing resources across multiple edge devices to enable efficient, scalable data processing and real-time analytics while preserving data privacy. This approach optimizes workload distribution and resource management, reducing latency and bandwidth usage compared to centralized cloud HPC systems.

Micro Data Center Scalability

High-performance computing (HPC) enables massive parallel processing power ideal for complex simulations and data analysis, but scaling micro data centers at the edge requires balancing computational intensity with limited physical space and energy constraints. Edge computing micro data centers enhance scalability by distributing processing closer to data sources, reducing latency and bandwidth usage while supporting real-time scientific applications in remote or resource-limited environments.

AI-Driven Distributed Workloads

High-Performance Computing (HPC) excels in centralized processing of large-scale AI-driven workloads, enabling complex simulations and data analysis with immense computational power. Edge Computing distributes AI tasks to localized devices, reducing latency and bandwidth use by processing data closer to its source, crucial for real-time applications in scientific research and IoT environments.

Cloud-to-Edge Continuum

High-performance computing (HPC) delivers immense processing power for complex scientific simulations within centralized data centers, while edge computing processes data locally near the source to reduce latency and enhance real-time decision-making. Integrating HPC with edge computing in a cloud-to-edge continuum enables seamless data flow and optimized resource allocation across distributed infrastructures, accelerating scientific discovery through hybrid computational models.

HPC-in-a-Box

High-Performance Computing (HPC) delivers massive parallel processing power typically housed in centralized data centers, while Edge Computing brings computation closer to data sources for real-time processing. HPC-in-a-Box solutions integrate advanced HPC capabilities into compact, portable units enabling localized scientific simulations and data analysis with reduced latency and greater computational efficiency at the edge.

Edge Accelerators Integration

Edge accelerators enhance edge computing performance by providing specialized hardware, such as GPUs, FPGAs, and ASICs, designed to process complex workloads locally with low latency and reduced power consumption. This integration enables real-time data analysis and AI inference at the edge, crucial for applications in autonomous systems, industrial IoT, and smart cities, where bandwidth and connectivity limitations restrict reliance on centralized high-performance computing resources.

Low-Latency Analytics Pipeline

High-performance computing (HPC) systems excel in processing large-scale simulations and complex computations with immense parallel processing power, enabling deep analytics through centralized data centers. Edge computing reduces latency by processing data near its source, facilitating real-time analytics pipelines critical for applications such as autonomous vehicles, predictive maintenance, and IoT sensor networks.

On-Premise Exascale Nodes

High-performance computing (HPC) leverages on-premise exascale nodes to deliver unprecedented computational power for large-scale scientific simulations, data analysis, and complex modeling. Edge computing complements HPC by processing data near its source, reducing latency and bandwidth usage, but on-premise exascale HPC infrastructures remain essential for achieving maximum processing speed and handling extensive datasets in scientific research.

Edge-Native HPC Simulation

Edge-native HPC simulation leverages distributed computing resources at the network edge to reduce latency and enhance real-time data processing capabilities, optimizing performance for applications such as autonomous systems and IoT analytics. By integrating lightweight, scalable algorithms directly on edge devices, this approach addresses bandwidth limitations and supports faster decision-making compared to traditional centralized high-performance computing architectures.

Ultra Dense Compute Clusters

Ultra Dense Compute Clusters in High-Performance Computing (HPC) environments enable massive parallel processing with optimized cooling and energy efficiency, supporting complex simulations and data-intensive tasks. Edge Computing, leveraging ultra dense clusters, enhances real-time data processing near the source, reducing latency and bandwidth usage for applications like autonomous systems and IoT analytics.

High-Performance Computing vs Edge Computing Infographic

industrydif.com

industrydif.com