Artificial Intelligence (AI) encompasses a broad range of technologies designed to simulate human intelligence, including machine learning, natural language processing, and robotics. Generative Pre-trained Transformer (GPT) is a specialized AI model architecture focused on understanding and generating human-like text through deep learning and large-scale pre-training. While AI serves as the overarching field, GPT represents a cutting-edge approach within natural language processing that enables advanced conversational agents and content creation.

Table of Comparison

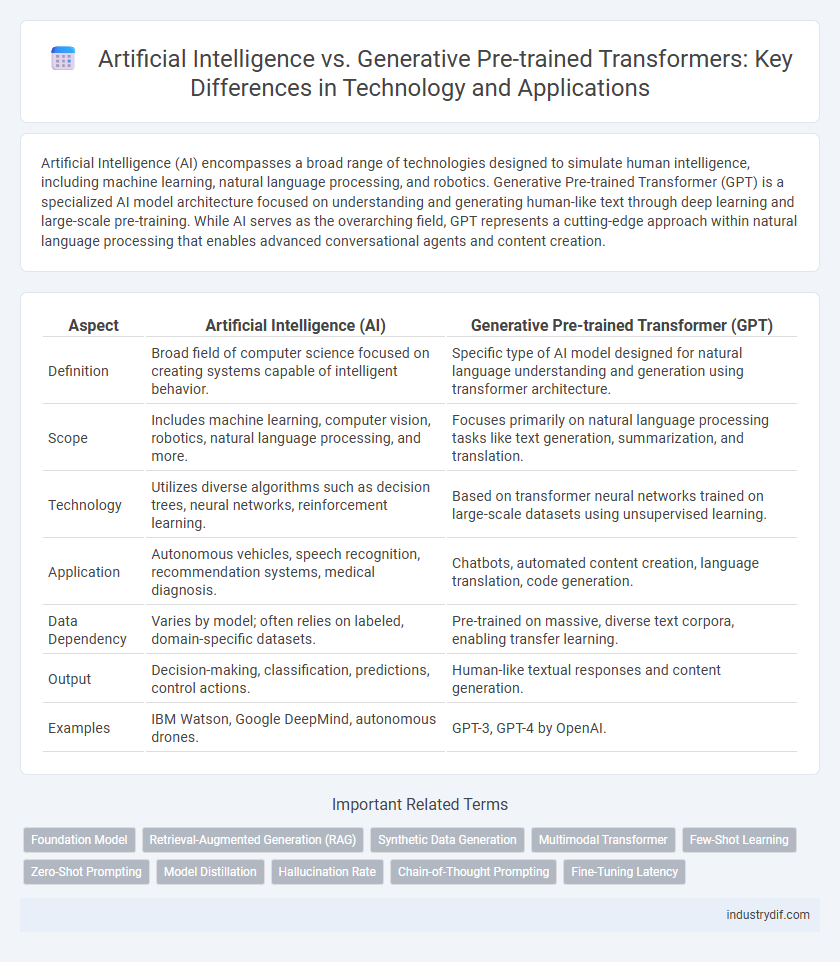

| Aspect | Artificial Intelligence (AI) | Generative Pre-trained Transformer (GPT) |

|---|---|---|

| Definition | Broad field of computer science focused on creating systems capable of intelligent behavior. | Specific type of AI model designed for natural language understanding and generation using transformer architecture. |

| Scope | Includes machine learning, computer vision, robotics, natural language processing, and more. | Focuses primarily on natural language processing tasks like text generation, summarization, and translation. |

| Technology | Utilizes diverse algorithms such as decision trees, neural networks, reinforcement learning. | Based on transformer neural networks trained on large-scale datasets using unsupervised learning. |

| Application | Autonomous vehicles, speech recognition, recommendation systems, medical diagnosis. | Chatbots, automated content creation, language translation, code generation. |

| Data Dependency | Varies by model; often relies on labeled, domain-specific datasets. | Pre-trained on massive, diverse text corpora, enabling transfer learning. |

| Output | Decision-making, classification, predictions, control actions. | Human-like textual responses and content generation. |

| Examples | IBM Watson, Google DeepMind, autonomous drones. | GPT-3, GPT-4 by OpenAI. |

Defining Artificial Intelligence: Scope and Capabilities

Artificial Intelligence (AI) encompasses a broad range of technologies designed to perform tasks that typically require human intelligence, such as machine learning, natural language processing, and computer vision. Generative Pre-trained Transformers (GPT) are a specific subset of AI models focused on understanding and generating human-like text based on vast datasets. While AI's scope includes various applications from robotics to data analytics, GPT excels in language generation, demonstrating advanced capabilities in contextual comprehension and content creation.

Introduction to Generative Pre-trained Transformers

Generative Pre-trained Transformers (GPT) represent a specialized subset of artificial intelligence centered on natural language processing and generation through deep learning architectures. Leveraging extensive pre-training on vast text corpora, GPT models excel in understanding context and producing coherent, human-like text across diverse applications. Their transformer-based architecture facilitates parallel processing and attention mechanisms that significantly enhance language modeling tasks compared to traditional AI approaches.

Key Differences Between AI and GPT Architectures

Artificial Intelligence (AI) encompasses a broad range of technologies designed to simulate human intelligence, while Generative Pre-trained Transformers (GPT) are a specific type of AI model optimized for natural language processing tasks using deep learning and transformer architectures. AI systems can include rule-based algorithms, decision trees, and reinforcement learning, whereas GPT models rely on large-scale unsupervised learning and attention mechanisms to generate coherent text from vast datasets. The primary distinction lies in AI's general scope versus GPT's specialized design for language generation and understanding through pre-training and fine-tuning phases.

Core Use Cases: AI Systems vs. GPT Models

Artificial Intelligence systems excel in automating complex decision-making processes, optimizing supply chains, and enhancing predictive analytics across various industries. Generative Pre-trained Transformer models specialize in natural language understanding, content generation, and conversational AI, powering applications like chatbots, language translation, and content creation. Core use cases differentiate AI's broad problem-solving capabilities from GPT's focus on language-based tasks leveraging deep learning and large-scale pre-training.

Training Methods: Traditional AI vs. Generative Pre-training

Traditional AI training methods rely on rule-based programming and supervised learning with labeled datasets to perform specific tasks, emphasizing explicit algorithmic instructions. Generative Pre-trained Transformers (GPT), however, utilize unsupervised pre-training on massive text corpora, learning language patterns and context before fine-tuning for downstream applications. This shift enables GPT models to generate coherent and contextually relevant responses without task-specific programming, enhancing adaptability and scalability in natural language processing.

Processing Natural Language: AI Approaches vs. GPT

Artificial Intelligence (AI) employs various techniques such as rule-based systems, machine learning, and deep learning to process natural language, enabling tasks like sentiment analysis and language translation. Generative Pre-trained Transformer (GPT), a specific AI model, leverages transformer architecture and unsupervised pre-training on massive text corpora to generate coherent and context-aware language outputs. Unlike traditional AI methods, GPT excels in understanding context, generating human-like text, and performing zero-shot learning tasks without task-specific training.

Advantages and Limitations of AI and GPT Models

Artificial Intelligence encompasses a broad range of technologies capable of performing complex tasks, including machine learning, natural language processing, and computer vision, offering adaptability across diverse applications but often requiring significant computational resources. Generative Pre-trained Transformers (GPT) specialize in producing coherent, human-like text by leveraging vast datasets and deep neural networks, excelling in language generation yet facing challenges such as contextual understanding and susceptibility to biased outputs. While AI systems provide versatile problem-solving capabilities, GPT models are optimized for language-centric tasks but must be carefully managed to mitigate limitations like data dependency and interpretability issues.

Impact on Industry: AI Applications vs. GPT Solutions

Artificial Intelligence drives industry transformation through automated data analysis, predictive maintenance, and intelligent decision-making, enhancing operational efficiency across sectors like manufacturing, finance, and healthcare. Generative Pre-trained Transformer models specifically revolutionize natural language processing tasks, enabling advanced applications such as content creation, customer support automation, and personalized marketing strategies. The convergence of AI and GPT technologies accelerates innovation by combining broad AI capabilities with sophisticated language generation, resulting in customized, scalable solutions for diverse industrial challenges.

Security and Ethical Considerations in AI and GPT

Artificial Intelligence (AI) and Generative Pre-trained Transformer (GPT) models both present unique security and ethical challenges, including data privacy risks, bias propagation, and misuse potential. Implementing robust security measures and ethical guidelines is critical to prevent unauthorized data access, mitigate algorithmic biases, and ensure responsible AI usage. Continuous monitoring and transparent governance frameworks are essential to address evolving threats and maintain public trust in AI and GPT technologies.

Future Trends: Convergence of AI and Generative Transformer Technologies

Artificial Intelligence and Generative Pre-trained Transformers (GPT) are increasingly converging, driving advances in natural language understanding, content creation, and decision-making automation. Future trends highlight the integration of AI's broader cognitive capabilities with GPT's sophisticated language modeling to enhance human-computer interaction and predictive analytics. This convergence will accelerate innovation in personalized AI applications, multimodal learning, and real-time adaptive systems across industries.

Related Important Terms

Foundation Model

Artificial Intelligence encompasses a broad range of technologies enabling machines to perform tasks requiring human intelligence, while Generative Pre-trained Transformers (GPT) represent a specialized subset of foundation models designed for advanced natural language processing through extensive pre-training on vast datasets. Foundation models like GPT leverage deep learning architectures to generalize across diverse tasks by extracting semantic patterns, positioning them as a transformative shift within the AI landscape toward more adaptable and scalable solutions.

Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) integrates large-scale external knowledge bases with Generative Pre-trained Transformers (GPT), enhancing AI models by combining retrieval mechanisms and generative capabilities for more accurate and context-aware responses. This hybrid approach leverages real-time information retrieval to overcome limitations of fixed training data, significantly improving performance in complex tasks such as question answering and information synthesis.

Synthetic Data Generation

Artificial Intelligence encompasses a broad range of techniques for synthetic data generation, while Generative Pre-trained Transformers (GPT) leverage deep learning architectures to create highly realistic, contextually accurate synthetic datasets. GPT models, trained on vast corpora, excel in producing textual synthetic data that enhances machine learning model training, data augmentation, and simulation environments within AI applications.

Multimodal Transformer

Artificial Intelligence encompasses a broad range of technologies designed to perform tasks that typically require human intelligence, whereas Generative Pre-trained Transformers (GPT) represent a specialized subset of AI models focused on natural language processing and generation. Multimodal Transformers extend the capabilities of GPT by integrating and processing multiple data modalities, such as text, images, and audio, enabling more comprehensive understanding and generation of context-rich outputs.

Few-Shot Learning

Artificial Intelligence encompasses various methodologies enabling machines to mimic human cognition, with Few-Shot Learning allowing models to generalize from minimal training examples. Generative Pre-trained Transformers (GPT) excel in Few-Shot Learning by leveraging large-scale unsupervised training on diverse datasets, enabling rapid adaptation to new tasks with limited samples.

Zero-Shot Prompting

Zero-shot prompting enables Artificial Intelligence models, particularly Generative Pre-trained Transformers (GPT), to perform tasks without prior task-specific training by leveraging extensive pre-trained knowledge. This capability allows GPT models to understand and generate responses based on novel prompts, demonstrating advanced generalization and reasoning skills directly from natural language input.

Model Distillation

Model distillation in Artificial Intelligence compresses large Generative Pre-trained Transformer (GPT) models into smaller, efficient versions without sacrificing significant performance. This technique transfers knowledge from the GPT's complex layers to a compact model, enhancing deployment on resource-constrained devices.

Hallucination Rate

Artificial Intelligence encompasses a broad range of technologies, while Generative Pre-trained Transformers (GPT) are specialized AI models designed for natural language processing with a higher susceptibility to hallucination errors, where the model generates plausible but incorrect information. The hallucination rate in GPT models can vary based on training data quality and prompt specificity, making error mitigation techniques essential for reliable AI outputs in critical applications.

Chain-of-Thought Prompting

Artificial Intelligence encompasses broad computational methods for simulating human cognition, whereas Generative Pre-trained Transformer (GPT) specifically utilizes deep learning architectures with vast text corpora for language generation. Chain-of-Thought Prompting enhances GPT models by enabling step-by-step reasoning through intermediate logical steps, significantly improving performance on complex problem-solving and multi-hop question answering tasks.

Fine-Tuning Latency

Fine-tuning latency in Artificial Intelligence models varies significantly between traditional AI frameworks and Generative Pre-trained Transformers (GPTs), with GPT architectures typically exhibiting longer adaptation times due to their extensive parameter sets and complex layer structures. Optimization techniques such as parameter-efficient fine-tuning and low-rank adaptation mitigate latency by updating only subsets of model weights, enhancing real-time applicability in resource-constrained environments.

Artificial Intelligence vs Generative Pre-trained Transformer Infographic

industrydif.com

industrydif.com