Big Data processes vast amounts of information from diverse sources, enabling complex analytics and decision-making, while TinyML specializes in deploying low-power machine learning models on compact IoT devices for real-time, edge-based inference. The key difference lies in their operational scale; Big Data relies on significant cloud resources, whereas TinyML emphasizes local processing with minimal energy consumption. This contrast highlights the complementary roles of centralized data analysis versus decentralized, efficient on-device intelligence in modern technical ecosystems.

Table of Comparison

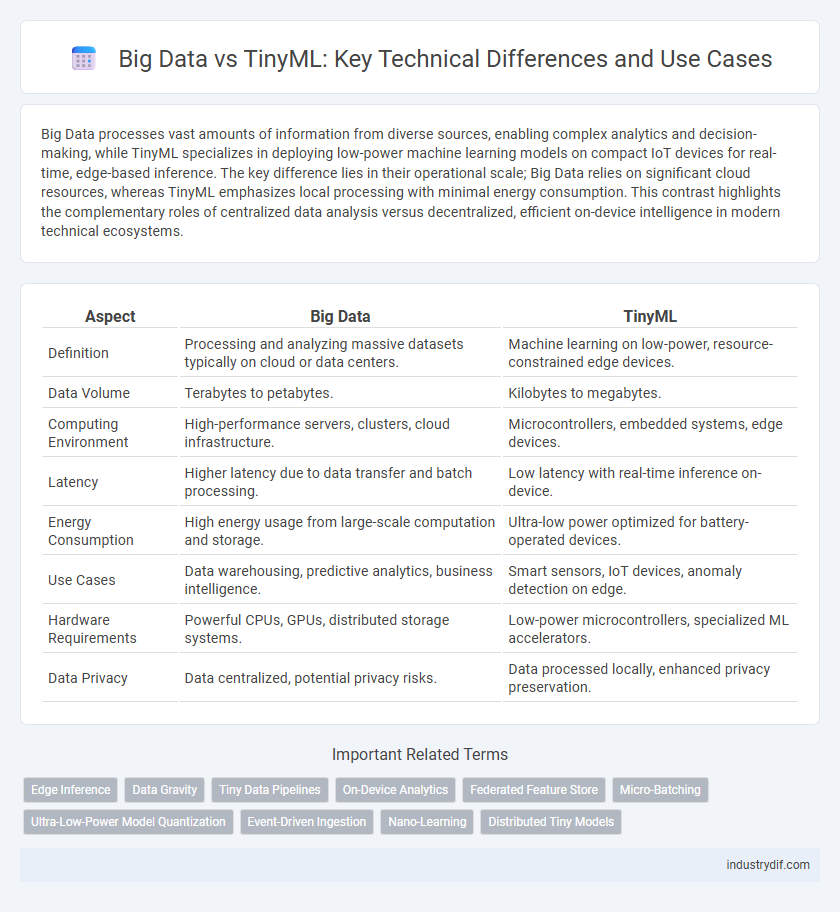

| Aspect | Big Data | TinyML |

|---|---|---|

| Definition | Processing and analyzing massive datasets typically on cloud or data centers. | Machine learning on low-power, resource-constrained edge devices. |

| Data Volume | Terabytes to petabytes. | Kilobytes to megabytes. |

| Computing Environment | High-performance servers, clusters, cloud infrastructure. | Microcontrollers, embedded systems, edge devices. |

| Latency | Higher latency due to data transfer and batch processing. | Low latency with real-time inference on-device. |

| Energy Consumption | High energy usage from large-scale computation and storage. | Ultra-low power optimized for battery-operated devices. |

| Use Cases | Data warehousing, predictive analytics, business intelligence. | Smart sensors, IoT devices, anomaly detection on edge. |

| Hardware Requirements | Powerful CPUs, GPUs, distributed storage systems. | Low-power microcontrollers, specialized ML accelerators. |

| Data Privacy | Data centralized, potential privacy risks. | Data processed locally, enhanced privacy preservation. |

Understanding Big Data: Concepts and Applications

Big Data involves the processing and analysis of massive datasets that exceed traditional data handling capacities, utilizing technologies such as Hadoop and Apache Spark for scalable storage and parallel computation. It powers applications in diverse fields including finance, healthcare, and marketing by enabling predictive analytics, customer segmentation, and fraud detection. Understanding foundational concepts like volume, velocity, and variety is crucial to leveraging Big Data's potential for informed decision-making and operational efficiency.

Introduction to TinyML: Powering AI on the Edge

TinyML enables AI models to run directly on low-power edge devices, significantly reducing latency and reliance on cloud connectivity compared to traditional Big Data approaches. This technology leverages optimized machine learning algorithms tailored for microcontrollers, allowing real-time data processing and decision-making in constrained environments. By minimizing data transfer and energy consumption, TinyML enhances efficiency and privacy in edge computing applications.

Key Differences Between Big Data and TinyML

Big Data involves processing and analyzing massive, complex datasets typically stored in cloud or data centers, requiring high computational power and advanced algorithms for data mining, machine learning, and statistical analysis. TinyML focuses on executing machine learning models on resource-constrained edge devices like microcontrollers, emphasizing low latency, minimal energy consumption, and on-device inferencing without relying on continuous internet connectivity. The primary differences lie in data volume, processing location, computational requirements, and application scope, with Big Data targeting large-scale analytics and TinyML enabling real-time intelligence on embedded systems.

Data Processing: Centralized vs Distributed Models

Big Data relies on centralized data processing architectures that aggregate massive datasets in data centers for intensive analytics, enabling complex queries and large-scale machine learning. TinyML employs distributed data processing directly on edge devices, minimizing latency and bandwidth usage by performing inference locally on microcontrollers. The centralized model prioritizes raw data accumulation and heavy computation, whereas the distributed TinyML model emphasizes real-time, resource-efficient processing with constrained hardware.

Scalability and Resource Management in Big Data and TinyML

Big Data systems excel in scalability by leveraging distributed computing frameworks like Hadoop and Spark, enabling the processing of petabytes of data across numerous nodes with dynamic resource allocation. TinyML focuses on efficient resource management by optimizing machine learning models to run on low-power, memory-constrained edge devices, ensuring minimal energy consumption and reduced latency. While Big Data prioritizes massive scalability through cloud and data center infrastructures, TinyML emphasizes localized, resource-efficient inference to meet constraints of embedded environments.

Real-Time Analytics: Big Data Versus Ultra-Low Latency in TinyML

Big Data analytics processes vast datasets in distributed systems, optimizing insights over prolonged periods but often with latency constraints unsuitable for real-time applications. TinyML enables ultra-low latency inference directly on edge devices by leveraging compact machine learning models, facilitating instantaneous decision-making in resource-constrained environments. This real-time processing capability positions TinyML as a critical innovation for applications demanding immediate analytics, contrasting with Big Data's bulk data-driven approach.

Power Consumption and Hardware Requirements

Big Data analytics requires substantial processing power and storage capabilities, demanding high-performance servers with significant energy consumption often measured in kilowatts. TinyML operates on ultra-low-power microcontrollers, consuming milliwatts of power, enabling edge devices to perform machine learning tasks without relying on cloud infrastructure or large-scale hardware. This results in reduced latency and energy costs, making TinyML ideal for real-time, resource-constrained applications.

Security and Privacy Considerations

Big Data systems process vast volumes of information, often centralized in cloud environments, raising concerns about data breaches and unauthorized access due to the aggregation of sensitive user data. TinyML operates on edge devices, minimizing data transfer and reducing exposure to network-based attacks, thus enhancing privacy by keeping data localized and processed in real-time. Security in TinyML is strengthened through lightweight encryption and hardware-based protections, whereas Big Data environments require robust, scalable solutions like multi-factor authentication and continuous monitoring to safeguard extensive datasets.

Use Cases: From Industrial IoT to Personalized Devices

Big Data analytics drives industrial IoT applications by processing massive datasets from sensors to optimize manufacturing, predictive maintenance, and supply chain management. TinyML enables real-time, on-device intelligence in personalized devices such as wearables and smart home gadgets, enhancing user experience with low latency and energy efficiency. Combining Big Data's scalability and TinyML's edge computing addresses diverse use cases, from large-scale industrial systems to individualized, context-aware applications.

Future Trends: Bridging Big Data and TinyML Technologies

Emerging trends in Big Data and TinyML are converging to enable edge computing with enhanced data processing capabilities, reducing latency and bandwidth requirements. Future architectures leverage hybrid systems that integrate large-scale cloud analytics with real-time, on-device machine learning models for optimized decision-making. Advances in federated learning, low-power AI accelerators, and scalable data pipelines will drive the seamless fusion of Big Data infrastructures and TinyML applications.

Related Important Terms

Edge Inference

Edge inference in TinyML enables real-time data processing directly on low-power devices, reducing latency and bandwidth usage compared to traditional Big Data approaches that rely on centralized cloud computing. This localized processing enhances privacy and operational efficiency, making TinyML ideal for applications where immediate decision-making and minimal data transmission are critical.

Data Gravity

Big Data relies on centralized data storage and processing due to significant Data Gravity, causing latency and bandwidth challenges when moving massive datasets. TinyML minimizes Data Gravity impact by enabling on-device machine learning, reducing data transfer and enabling real-time analytics at the edge.

Tiny Data Pipelines

Tiny data pipelines in TinyML enable efficient on-device machine learning by processing minimal datasets locally, reducing latency and bandwidth consumption compared to traditional Big Data pipelines. These lightweight, resource-constrained pipelines optimize memory and compute resources, making edge AI applications more scalable and responsive.

On-Device Analytics

On-device analytics in TinyML enables real-time data processing and decision-making directly on embedded devices, reducing latency and preserving privacy compared to cloud-dependent Big Data approaches. This localized computation minimizes data transmission costs and enhances efficiency in applications with limited connectivity or stringent security requirements.

Federated Feature Store

Federated Feature Store in TinyML enables decentralized data processing and real-time analytics at the edge, reducing latency and bandwidth requirements compared to traditional Big Data centralized systems. This approach enhances privacy and scalability by allowing local model training on distributed devices while synchronizing shared feature sets across the network.

Micro-Batching

Micro-batching in Big Data frameworks processes data in small chunks to optimize latency and throughput, while TinyML leverages micro-batching to efficiently run machine learning models on resource-constrained edge devices, balancing performance and power consumption. The implementation of micro-batching in TinyML enables near real-time inference by aggregating micro-scale data streams without overwhelming limited computational resources.

Ultra-Low-Power Model Quantization

Ultra-low-power model quantization in TinyML enables efficient edge inference by compressing deep learning models to fit constrained memory and energy budgets, contrasting with Big Data approaches that rely on extensive cloud computations and large-scale data storage. This technique reduces bit-width precision and prunes parameters, facilitating real-time analytics on microcontrollers without sacrificing significant accuracy.

Event-Driven Ingestion

Event-driven ingestion in Big Data systems enables real-time processing of massive data streams by capturing and analyzing events as they occur, supporting scalable storage and analytics. TinyML leverages event-driven ingestion at the edge, optimizing resource-constrained devices to process sensor data locally with minimal latency and reduced bandwidth consumption.

Nano-Learning

Nano-learning, a subset of TinyML, enables on-device processing of micro-datasets with minimal latency and power consumption, contrasting with Big Data's reliance on extensive cloud-based analytics requiring substantial computational resources. This shift towards localized, real-time decision-making in Nano-learning optimizes performance for edge devices, enhancing IoT and embedded system applications.

Distributed Tiny Models

Distributed Tiny Models in TinyML enable real-time, edge-level data processing with minimal latency, contrasting with Big Data's centralized, large-scale data analytics requiring substantial computational resources. Leveraging distributed architectures, TinyML optimizes energy efficiency and privacy by performing localized inference across multiple microcontrollers, transforming IoT and edge applications.

Big Data vs TinyML Infographic

industrydif.com

industrydif.com