Web servers require dedicated infrastructure to host applications, managing server configurations and scaling manually, which can increase operational complexity. Serverless architecture eliminates the need for server management by running code on-demand through cloud platforms, offering automatic scaling and reducing resource wastage. This approach enhances agility and cost-efficiency, especially for applications with variable or unpredictable traffic patterns.

Table of Comparison

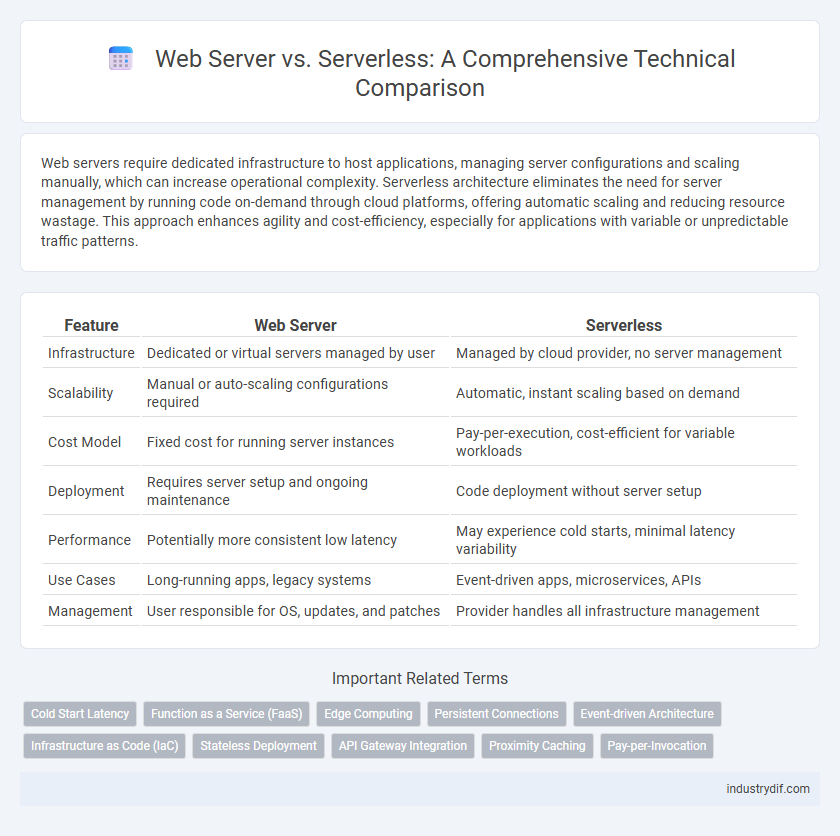

| Feature | Web Server | Serverless |

|---|---|---|

| Infrastructure | Dedicated or virtual servers managed by user | Managed by cloud provider, no server management |

| Scalability | Manual or auto-scaling configurations required | Automatic, instant scaling based on demand |

| Cost Model | Fixed cost for running server instances | Pay-per-execution, cost-efficient for variable workloads |

| Deployment | Requires server setup and ongoing maintenance | Code deployment without server setup |

| Performance | Potentially more consistent low latency | May experience cold starts, minimal latency variability |

| Use Cases | Long-running apps, legacy systems | Event-driven apps, microservices, APIs |

| Management | User responsible for OS, updates, and patches | Provider handles all infrastructure management |

Understanding Traditional Web Servers

Traditional web servers operate by hosting applications on dedicated hardware or virtual machines, managing HTTP requests, and maintaining persistent server instances. These servers require manual scaling and server maintenance, including updates and resource allocation, to handle varying traffic loads effectively. Understanding the roles of Apache, Nginx, and IIS highlights their use in serving static content, managing dynamic requests, and deploying web applications within a controlled infrastructure.

What is Serverless Architecture?

Serverless architecture is a cloud computing execution model where the cloud provider dynamically manages the allocation of machine resources, allowing developers to run code without provisioning or managing servers. It eliminates the need for server infrastructure management by automatically scaling functions in response to demand, enabling efficient resource utilization and cost-effectiveness. Services like AWS Lambda, Azure Functions, and Google Cloud Functions exemplify serverless platforms, facilitating event-driven, scalable, and stateless application development.

Core Components: Web Server vs Serverless

Web servers rely on dedicated infrastructure, including hardware, operating systems, and server software like Apache or Nginx, to manage HTTP requests and deliver web content. Serverless computing abstracts server management, leveraging cloud provider-managed functions and event-driven execution without the need for persistent server components. Core components in serverless environments include function runtimes, API gateways, and event triggers that dynamically allocate resources based on demand.

Scalability: Web Server and Serverless Comparison

Web servers require manual scaling through the addition of physical or virtual machines, often resulting in increased latency during traffic spikes. Serverless architectures automatically scale functions in response to demand, providing seamless handling of concurrent requests without pre-provisioning resources. This automatic, event-driven scalability in serverless environments reduces operational overhead and improves cost efficiency compared to traditional web server setups.

Deployment Workflow Differences

Web server deployment requires provisioning infrastructure, configuring environments, and managing server maintenance, which can involve manual interventions and longer setup times. Serverless deployment automates scaling and infrastructure management through cloud provider platforms, allowing developers to deploy functions directly without handling underlying servers. This results in faster iteration cycles and simplified operational overhead compared to traditional web server workflows.

Cost Optimization: Server-Based vs Serverless

Server-based architectures incur fixed costs related to purchasing, maintaining, and scaling physical or virtual servers, often leading to underutilized resources during low traffic periods. Serverless computing operates on a pay-as-you-go model, charging exclusively for actual usage, which significantly reduces expenses during traffic fluctuations and idle times. Cost optimization in serverless environments is achieved through automatic scaling and eliminated infrastructure management, resulting in more efficient allocation of IT budgets compared to traditional web server deployments.

Performance Benchmarks: Latency and Throughput

Web server performance benchmarks indicate latency typically ranges from 50 to 200 milliseconds depending on server load and network conditions, whereas serverless architectures often achieve lower cold start latency between 20 to 100 milliseconds but may exhibit increased latency under high concurrent invocations. Throughput in web servers is primarily limited by hardware capacity and thread management, enabling thousands of requests per second on optimized infrastructure, while serverless models scale automatically with cloud provider limits, often supporting tens of thousands of requests per second but with variability based on function cold starts and concurrent execution limits. Performance analysis highlights web servers as consistent performers with predictable latency, while serverless offers dynamic scalability at the cost of occasional latency spikes.

Security Implications in Both Approaches

Web servers require continuous patch management and firewall configurations to mitigate risks like DDoS attacks and unauthorized access, increasing the security management overhead. Serverless architectures offer inherent isolation and automatic scaling, reducing exposure to certain vulnerabilities, but introduce concerns such as function-level access control and potential data leakage through ephemeral storage. Effective security in both models demands robust identity and access management, thorough monitoring, and reliable encryption practices to protect data integrity and privacy.

Use Cases Suitable for Serverless and Web Servers

Serverless architectures excel in event-driven applications, microservices, and APIs requiring rapid scaling with variable workloads, such as real-time data processing and IoT backend services. Traditional web servers are more suitable for monolithic applications, legacy systems, and scenarios demanding full control over the infrastructure, including customized middleware and persistent connections. Choosing the right model depends on workload patterns, deployment complexity, and operational control needs.

Future Trends in Web Hosting Technologies

Web server architecture continues evolving with a strong shift towards serverless computing, driven by scalability, cost efficiency, and reduced operational complexity. Serverless platforms like AWS Lambda and Azure Functions enable event-driven execution, automatically handling resource allocation and scaling, making them ideal for microservices and real-time applications. Emerging trends emphasize edge computing integration and hybrid models that combine traditional web servers with serverless frameworks to optimize latency and resource utilization.

Related Important Terms

Cold Start Latency

Cold start latency in serverless architectures can significantly impact performance due to the time required to initialize functions before handling requests, whereas traditional web servers maintain persistent processes that minimize startup delays. This difference often results in faster initial response times for web servers compared to serverless environments, which may introduce latency spikes during scale-up events.

Function as a Service (FaaS)

Function as a Service (FaaS) enables developers to deploy discrete functions without managing underlying web server infrastructure, allowing automatic scaling and pay-as-you-go billing. Unlike traditional web servers that require provisioning and maintenance, serverless FaaS platforms handle event-driven execution and resource allocation dynamically, optimizing cost-efficiency and scalability for microservices and APIs.

Edge Computing

Edge computing enhances web server efficiency by processing data closer to the user, reducing latency and bandwidth usage, while serverless architectures dynamically allocate resources on demand without managing underlying infrastructure. Combining edge computing with serverless models offers scalable, low-latency solutions optimized for real-time applications and distributed workloads.

Persistent Connections

Web servers maintain persistent connections through protocols like HTTP/1.1 and HTTP/2, enabling efficient reuse of TCP connections and reducing latency for repeated client requests. Serverless architectures typically establish ephemeral connections triggered per function invocation, which limits persistent connection use but scales automatically with event-driven workloads.

Event-driven Architecture

Web servers rely on continuously running processes to handle requests, while serverless architectures utilize event-driven functions that automatically scale based on demand, reducing resource consumption and operational overhead. Event-driven architecture in serverless environments enables precise execution of code in response to triggers such as HTTP requests, database changes, or message queue events, optimizing responsiveness and cost efficiency.

Infrastructure as Code (IaC)

Infrastructure as Code (IaC) enables automated provisioning and management of both web server environments and serverless architectures through declarative templates, reducing manual configuration errors. Serverless platforms abstract underlying infrastructure, allowing IaC tools like Terraform and AWS CloudFormation to focus on deploying functions and event-driven resources instead of traditional server instances.

Stateless Deployment

Serverless architecture enables stateless deployment by abstracting infrastructure management, allowing functions to execute independently without maintaining server state between requests. Web server models require explicit state management, often through session storage or databases, increasing complexity in scaling and resilience.

API Gateway Integration

API Gateway integration with a traditional web server requires managing server instances, load balancing, and scaling to handle API requests, whereas serverless architectures allow event-driven execution without provisioning or maintaining servers, automatically scaling based on demand. This eliminates idle resource costs and simplifies deployment by abstracting infrastructure management through native cloud provider services like AWS Lambda or Azure Functions.

Proximity Caching

Proximity caching in web servers reduces latency by storing frequently accessed data closer to the client, improving response times and bandwidth efficiency. Serverless architectures leverage distributed caching layers within edge locations to dynamically scale and deliver content with minimal overhead, optimizing real-time data access.

Pay-per-Invocation

Pay-per-invocation pricing in serverless architectures charges only for actual function executions, enhancing cost efficiency compared to traditional web servers that incur fixed resource costs regardless of traffic. This model optimizes operational expenses by aligning expenses directly with workload, reducing overhead from idle server capacity.

Web Server vs Serverless Infographic

industrydif.com

industrydif.com