On-premise computing offers full control over data and infrastructure by housing servers within a company's physical location, ensuring low latency and enhanced security. Edge computing processes data closer to the source, such as IoT devices, minimizing latency and reducing bandwidth usage by handling real-time analytics locally. Both approaches optimize performance and data management, but edge computing is especially suited for applications requiring immediate processing at the data generation point.

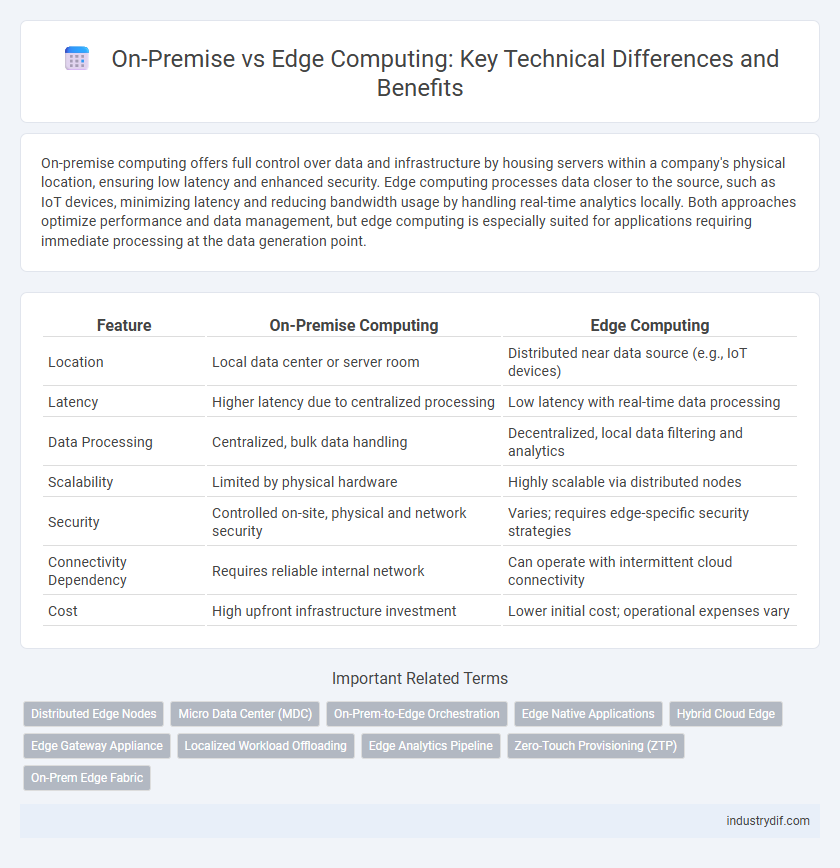

Table of Comparison

| Feature | On-Premise Computing | Edge Computing |

|---|---|---|

| Location | Local data center or server room | Distributed near data source (e.g., IoT devices) |

| Latency | Higher latency due to centralized processing | Low latency with real-time data processing |

| Data Processing | Centralized, bulk data handling | Decentralized, local data filtering and analytics |

| Scalability | Limited by physical hardware | Highly scalable via distributed nodes |

| Security | Controlled on-site, physical and network security | Varies; requires edge-specific security strategies |

| Connectivity Dependency | Requires reliable internal network | Can operate with intermittent cloud connectivity |

| Cost | High upfront infrastructure investment | Lower initial cost; operational expenses vary |

Introduction to On-Premise and Edge Computing

On-premise computing involves hosting data and applications within a company's local servers and infrastructure, providing direct control over security and data management. Edge computing processes data closer to the source or end-user device, reducing latency and bandwidth usage by handling computation near data generation points. Both approaches serve specific technical needs, balancing control, performance, and scalability depending on organizational priorities and application requirements.

Core Architecture: On-Premise vs Edge Computing

On-premise computing architecture centralizes data processing within an organization's local data centers, ensuring full control over infrastructure, security protocols, and compliance requirements. Edge computing distributes processing tasks closer to data sources at the network edge, reducing latency and bandwidth usage while enabling real-time analytics and responsiveness for IoT and AI applications. The core architectural difference lies in centralized resource management versus decentralized, localized data processing, impacting system scalability, maintenance complexity, and operational cost.

Deployment Scenarios in Industrial Settings

On-premise computing in industrial settings involves deploying servers and infrastructure directly within the facility, ensuring complete control over data security and low-latency processing for mission-critical applications. Edge computing enhances this by distributing compute resources closer to data sources such as IoT sensors and machinery, enabling real-time analytics and predictive maintenance with reduced network dependency. Deployment scenarios often combine both approaches to optimize operational efficiency, balancing centralized control with the agility of localized data processing.

Data Processing and Latency Considerations

On-premise computing centralizes data processing within an organization's local infrastructure, ensuring high control and security but often introducing higher latency due to data traversal to core systems. Edge computing processes data closer to the source, significantly reducing latency and enabling real-time analytics, which is critical for applications requiring immediate decision-making, such as IoT and autonomous systems. Latency-sensitive tasks benefit from edge computing's proximity to data generation points, while on-premise solutions suit workloads demanding stringent data governance and integration with existing enterprise systems.

Security and Compliance in Technical Environments

On-premise computing offers direct control over data security and compliance by housing servers within the organization's physical environment, reducing exposure to external threats and meeting strict regulatory requirements. Edge computing distributes data processing closer to data sources, enhancing real-time security measures but introducing complexities in maintaining compliance across decentralized nodes. Implementing robust encryption, access controls, and continuous monitoring is critical for both models to ensure adherence to standards like GDPR, HIPAA, and PCI DSS in technical environments.

Scalability and Resource Optimization

On-premise computing offers centralized control but often faces scalability constraints due to fixed hardware resources and physical infrastructure limits. Edge computing enhances scalability by distributing workloads across multiple edge devices, optimizing resource utilization and reducing latency for real-time data processing. Organizations achieve better resource optimization by leveraging edge computing's ability to dynamically allocate compute power closer to data sources, minimizing bandwidth use and improving system responsiveness.

Maintenance and Operational Overhead

On-premise computing requires significant maintenance efforts, including hardware upgrades, software patching, and infrastructure monitoring, leading to higher operational overhead. Edge computing reduces this burden by decentralizing processing closer to data sources, minimizing latency and the need for centralized data center maintenance. Automated updates and remote management tools further decrease maintenance complexity and operational costs in edge environments.

Integration with Legacy Systems

On-Premise computing offers seamless integration with legacy systems due to direct control over hardware and network infrastructure, enabling tailored configurations and compatibility with existing enterprise software. Edge computing, while enhancing real-time data processing by deploying resources closer to data sources, may face challenges integrating legacy systems that rely on centralized data centers, often requiring middleware or protocol translation layers. Hybrid architectures combining on-premise stability with edge computing's low latency can optimize legacy system performance while supporting modern IoT and AI workloads.

Cost Analysis: On-Premise vs Edge Computing

On-premise computing involves significant upfront capital expenses for hardware, infrastructure, and ongoing maintenance, often resulting in higher total cost of ownership compared to edge computing. Edge computing reduces latency and bandwidth costs by processing data locally, minimizing data transfer to central servers and enabling cost-efficient scalability for IoT and real-time applications. Operational expenses for edge deployments tend to be lower due to decentralized management and reduced cloud service fees, making edge computing a more cost-effective solution for distributed data processing needs.

Future Trends in Industrial Computing

Future trends in industrial computing emphasize the integration of on-premise infrastructure with edge computing to maximize real-time data processing and reduce latency. Industries are increasingly adopting hybrid models that combine centralized on-premise data centers with distributed edge nodes for enhanced operational efficiency and predictive maintenance. Advances in 5G, AI-powered analytics, and IoT sensors will drive the shift toward edge computing, enabling smarter, faster decision-making in manufacturing and automation environments.

Related Important Terms

Distributed Edge Nodes

Distributed edge nodes in edge computing reduce latency by processing data closer to the source, enhancing real-time analytics and operational efficiency compared to centralized on-premise data centers. This decentralized architecture supports scalable IoT deployments and improves fault tolerance by minimizing reliance on a single data center.

Micro Data Center (MDC)

Micro Data Centers (MDCs) enhance edge computing by delivering localized processing power, low-latency data handling, and improved security compared to traditional on-premise data centers. Their compact design supports rapid deployment in remote or constrained environments, optimizing real-time analytics and reducing bandwidth costs.

On-Prem-to-Edge Orchestration

On-Prem-to-Edge orchestration enables seamless management and coordination of workloads between centralized on-premise data centers and distributed edge devices, optimizing latency and bandwidth efficiency. Leveraging containerization and automation tools, this hybrid approach ensures real-time data processing while maintaining data sovereignty and compliance.

Edge Native Applications

Edge native applications leverage localized data processing and low-latency capabilities of edge computing to enhance real-time decision-making and operational efficiency beyond traditional on-premise data centers. These applications are specifically designed to run on distributed edge environments, enabling improved scalability and reduced bandwidth usage compared to centralized on-premise infrastructures.

Hybrid Cloud Edge

Hybrid Cloud Edge combines on-premise infrastructure with edge computing to optimize data processing by reducing latency and improving bandwidth efficiency while maintaining data security. This architecture enables seamless integration between centralized cloud resources and distributed edge devices, enhancing real-time analytics and operational agility.

Edge Gateway Appliance

Edge gateway appliances enhance edge computing by processing data locally, reducing latency and bandwidth usage compared to traditional on-premise servers. These devices enable real-time analytics and secure data transmission at the network perimeter, optimizing performance for IoT and industrial applications.

Localized Workload Offloading

Localized workload offloading in edge computing significantly reduces latency and bandwidth usage by processing data closer to the source, unlike on-premise solutions that centralize computation within a fixed facility. This decentralized approach enhances real-time analytics and improves system resilience by distributing computing resources across multiple edge nodes.

Edge Analytics Pipeline

Edge analytics pipelines process data locally on edge devices, reducing latency and bandwidth usage by filtering and analyzing information in real-time before sending relevant insights to centralized systems. This enables faster decision-making and improves performance for applications requiring immediate data processing compared to traditional on-premise setups that rely heavily on central data centers.

Zero-Touch Provisioning (ZTP)

Zero-Touch Provisioning (ZTP) streamlines device deployment by automating configuration and onboarding processes, significantly reducing manual intervention in both on-premise and edge computing environments. In edge computing, ZTP enhances scalability and responsiveness by enabling rapid, remote provisioning of distributed devices, whereas on-premise setups benefit from centralized control and security during initial device configuration.

On-Prem Edge Fabric

On-Prem Edge Fabric integrates localized computing resources directly within enterprise premises, enabling low-latency data processing and enhanced security compared to traditional cloud setups. By distributing workloads closer to data sources, it optimizes bandwidth usage and supports real-time analytics crucial for industrial IoT and mission-critical applications.

On-Premise vs Edge Computing Infographic

industrydif.com

industrydif.com