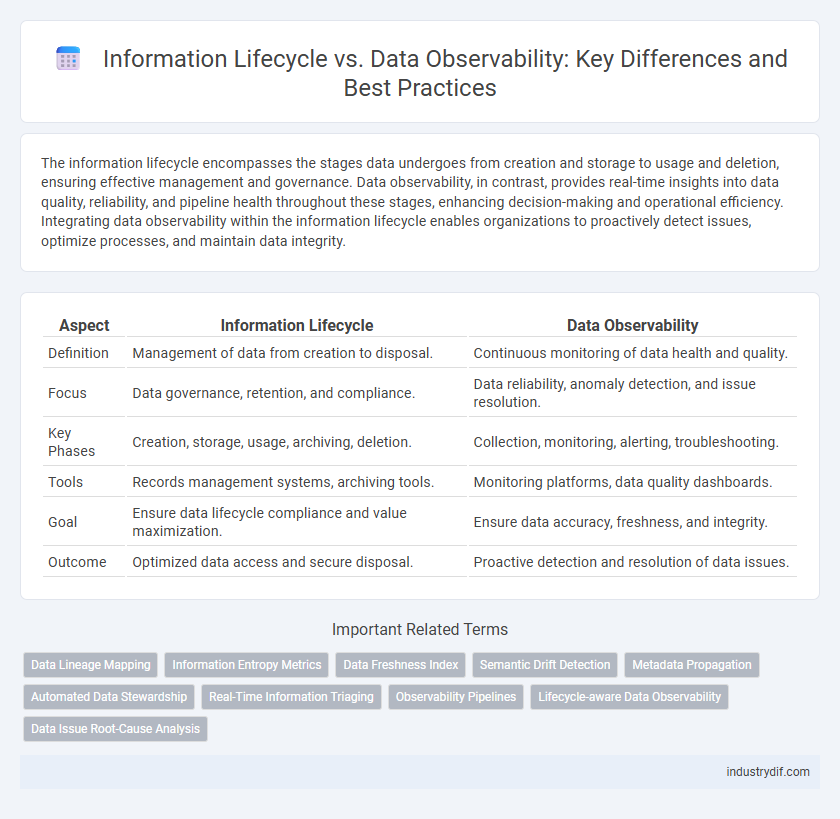

The information lifecycle encompasses the stages data undergoes from creation and storage to usage and deletion, ensuring effective management and governance. Data observability, in contrast, provides real-time insights into data quality, reliability, and pipeline health throughout these stages, enhancing decision-making and operational efficiency. Integrating data observability within the information lifecycle enables organizations to proactively detect issues, optimize processes, and maintain data integrity.

Table of Comparison

| Aspect | Information Lifecycle | Data Observability |

|---|---|---|

| Definition | Management of data from creation to disposal. | Continuous monitoring of data health and quality. |

| Focus | Data governance, retention, and compliance. | Data reliability, anomaly detection, and issue resolution. |

| Key Phases | Creation, storage, usage, archiving, deletion. | Collection, monitoring, alerting, troubleshooting. |

| Tools | Records management systems, archiving tools. | Monitoring platforms, data quality dashboards. |

| Goal | Ensure data lifecycle compliance and value maximization. | Ensure data accuracy, freshness, and integrity. |

| Outcome | Optimized data access and secure disposal. | Proactive detection and resolution of data issues. |

Understanding the Information Lifecycle

Understanding the Information Lifecycle involves managing data from its creation and initial storage through its various stages of use, maintenance, and eventual archiving or deletion. Data Observability complements this lifecycle by continuously monitoring data quality, freshness, and lineage to ensure reliability and informed decision-making. Effective integration of Information Lifecycle management and Data Observability enhances data governance, reduces risks, and improves operational efficiency.

Key Stages of the Information Lifecycle

The Information Lifecycle encompasses key stages such as data creation, storage, usage, archiving, and deletion, ensuring data flows seamlessly through its entire lifespan. Data Observability complements this lifecycle by continuously monitoring data quality, integrity, and lineage to detect anomalies at each stage. Integrating observability tools into the lifecycle enhances data reliability, governance, and operational efficiency.

Defining Data Observability in Modern Systems

Data observability in modern systems refers to the comprehensive ability to understand the health and state of data by monitoring its quality, lineage, and infrastructure metrics throughout the entire data lifecycle. It integrates real-time analytics, automated anomaly detection, and metadata management to ensure data reliability and operational efficiency. Emphasizing continuous visibility and proactive issue resolution, data observability supports improved decision-making and compliance in complex, distributed data environments.

Core Pillars of Data Observability

Data observability pivots on three core pillars: metrics, metadata, and lineage, forming a comprehensive framework for monitoring data quality throughout the information lifecycle. Metrics track real-time data health and detect anomalies early, while metadata provides contextual insights into data origins, transformations, and usage patterns. Lineage maps data flow across systems, ensuring transparency and accountability in data governance and facilitating faster issue resolution.

Comparisons: Information Lifecycle vs Data Observability

Information lifecycle encompasses the stages of data creation, storage, usage, archival, and deletion, ensuring data is managed efficiently throughout its existence. Data observability focuses on monitoring, health checks, and quality assessment of data in real-time, enabling prompt detection of anomalies and ensuring data reliability. While information lifecycle emphasizes process management and governance over time, data observability centers on continuous insight and operational visibility into data systems.

Benefits of Managing the Information Lifecycle

Effective management of the information lifecycle ensures data accuracy, compliance, and accessibility throughout its existence, reducing risks and operational costs. Integrating data observability enhances visibility into data quality and system health, enabling proactive issue detection and faster resolution. Organizations gain improved decision-making capabilities and increased trust in their data assets by controlling each phase from creation to archival.

Advantages of Implementing Data Observability

Implementing data observability enhances the information lifecycle by providing continuous monitoring, anomaly detection, and faster root cause analysis, ensuring data reliability and quality throughout. This proactive approach reduces downtime and mitigates risks associated with corrupted or missing data, leading to more accurate insights and decision-making. Data observability tools also streamline compliance and governance by maintaining transparent data lineage and audit trails.

Challenges in Information Lifecycle Management

Challenges in Information Lifecycle Management include data silos, inconsistent data quality, and difficulties in tracking data provenance, which hinder effective data governance. Managing data growth and ensuring compliance with evolving regulatory requirements further complicate lifecycle processes. Integrating advanced Data Observability tools helps address these issues by providing real-time monitoring, anomaly detection, and enhanced data lineage visibility.

Integrating Data Observability into the Information Lifecycle

Integrating data observability into the information lifecycle enhances data quality, reliability, and governance by continuously monitoring data health across ingestion, storage, processing, and consumption stages. This integration enables early detection of anomalies, reducing data downtime and ensuring accurate insights for analytics and decision-making. By embedding observability tools within the lifecycle, organizations achieve proactive data management and streamline compliance with regulatory requirements.

Future Trends: Merging Information Lifecycle and Data Observability

Emerging future trends in information management emphasize the convergence of Information Lifecycle and Data Observability to enhance data accuracy, governance, and usability. The integration leverages real-time monitoring, automated metadata capture, and adaptive data policies to optimize data quality throughout its lifecycle stages--from creation and storage to usage and archiving. Advancements in AI-driven analytics and machine learning are driving proactive anomaly detection and predictive insights, fostering a more resilient and transparent information ecosystem.

Related Important Terms

Data Lineage Mapping

Data lineage mapping plays a crucial role in the information lifecycle by visually tracing the origin, movement, and transformation of data across systems, ensuring accurate data governance and compliance. Integrating data observability tools enhances lineage mapping by continuously monitoring data quality and detecting anomalies throughout its lifecycle stages, increasing transparency and operational efficiency.

Information Entropy Metrics

Information entropy metrics measure the uncertainty and disorder within data throughout the information lifecycle, providing critical insights into data quality and transformation stages. These metrics enhance data observability by quantifying information loss, enabling more precise monitoring, anomaly detection, and efficient data management across collection, processing, storage, and analysis phases.

Data Freshness Index

The Information Lifecycle encompasses stages from data creation and storage to archiving and deletion, emphasizing the consistent update and accuracy of data assets. The Data Freshness Index within Data Observability specifically measures the time lag between data generation and its availability for analysis, ensuring timely insights and operational efficiency.

Semantic Drift Detection

Semantic drift detection plays a crucial role in the information lifecycle by identifying changes in data meaning over time, ensuring the accuracy and relevance of analytical insights. Integrating semantic drift detection within data observability frameworks enhances monitoring capabilities, helping organizations maintain data integrity and avoid erroneous conclusions from evolving data semantics.

Metadata Propagation

Metadata propagation ensures consistent context and traceability throughout the information lifecycle, enabling precise data quality monitoring and root cause analysis in data observability. Effective metadata management bridges gaps between data ingestion, transformation, and consumption, enhancing transparency and reliability across information systems.

Automated Data Stewardship

Automated data stewardship integrates seamlessly with the information lifecycle, enhancing data quality and governance through continuous monitoring, anomaly detection, and real-time metadata updates. This synergy between data observability tools and lifecycle management reduces manual intervention, ensuring compliance and accelerating decision-making processes.

Real-Time Information Triaging

Real-time information triaging is crucial in the information lifecycle, enabling immediate identification, categorization, and prioritization of data anomalies to maintain data quality and operational efficiency. Data observability tools provide continuous monitoring and alerting, ensuring that critical information is accurately processed and swiftly addressed throughout the entire data pipeline.

Observability Pipelines

Observability pipelines streamline the Information Lifecycle by continuously collecting, transforming, and routing data to ensure real-time visibility and quality across distributed systems. Integrating observability pipelines enhances data reliability and accelerates anomaly detection within complex data environments essential for maintaining information integrity.

Lifecycle-aware Data Observability

Lifecycle-aware data observability integrates monitoring and quality checks throughout every phase of the information lifecycle, from data ingestion and transformation to storage and usage, ensuring continuous accuracy and reliability. This approach enables proactive detection of anomalies and lineage tracking, enhancing data governance and operational efficiency in dynamic data environments.

Data Issue Root-Cause Analysis

Data issue root-cause analysis in the information lifecycle centers on identifying, tracing, and resolving anomalies within data from ingestion to consumption phases. Data observability platforms enhance this process by continuously monitoring metrics, logs, and traces, enabling rapid detection and accurate diagnosis of data quality problems at various lifecycle stages.

Information Lifecycle vs Data Observability Infographic

industrydif.com

industrydif.com