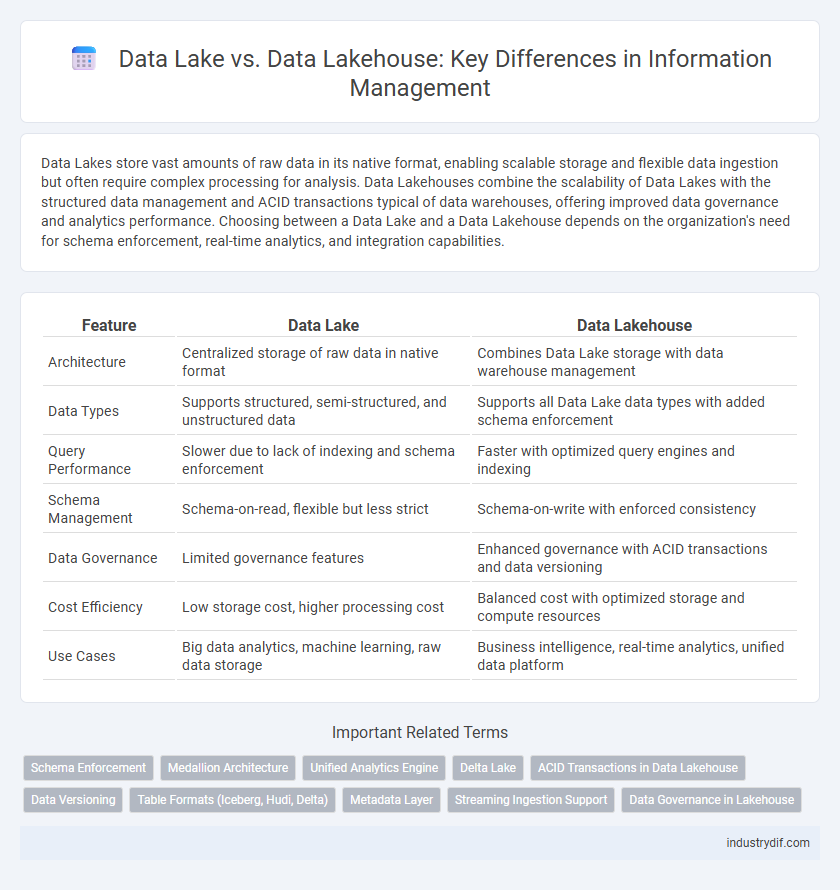

Data Lakes store vast amounts of raw data in its native format, enabling scalable storage and flexible data ingestion but often require complex processing for analysis. Data Lakehouses combine the scalability of Data Lakes with the structured data management and ACID transactions typical of data warehouses, offering improved data governance and analytics performance. Choosing between a Data Lake and a Data Lakehouse depends on the organization's need for schema enforcement, real-time analytics, and integration capabilities.

Table of Comparison

| Feature | Data Lake | Data Lakehouse |

|---|---|---|

| Architecture | Centralized storage of raw data in native format | Combines Data Lake storage with data warehouse management |

| Data Types | Supports structured, semi-structured, and unstructured data | Supports all Data Lake data types with added schema enforcement |

| Query Performance | Slower due to lack of indexing and schema enforcement | Faster with optimized query engines and indexing |

| Schema Management | Schema-on-read, flexible but less strict | Schema-on-write with enforced consistency |

| Data Governance | Limited governance features | Enhanced governance with ACID transactions and data versioning |

| Cost Efficiency | Low storage cost, higher processing cost | Balanced cost with optimized storage and compute resources |

| Use Cases | Big data analytics, machine learning, raw data storage | Business intelligence, real-time analytics, unified data platform |

Understanding Data Lakes: Definition and Key Features

A Data Lake is a centralized repository that stores vast amounts of raw, unstructured, or semi-structured data in its native format, enabling scalability and flexibility for big data analytics. It supports diverse data types including logs, sensor data, and social media feeds without requiring predefined schemas. Key features include schema-on-read, high storage capacity, and cost-effectiveness for handling large-scale data environments.

What is a Data Lakehouse? An Emerging Architecture

A Data Lakehouse is an emerging architecture that combines the scalability and low-cost storage of a data lake with the data management and performance features of a data warehouse. It supports structured, semi-structured, and unstructured data in a single platform, enabling unified analytics and real-time data processing. This architecture enhances data governance, schema enforcement, and ACID transactions, addressing limitations of traditional data lakes.

Core Differences Between Data Lakes and Data Lakehouses

Data lakes primarily store raw, unstructured data in its native format, enabling flexible data ingestion but often requiring complex processing for analysis. Data lakehouses combine the scalability and low-cost storage of data lakes with the data management and transactional capabilities of data warehouses, supporting ACID transactions and schema enforcement. This hybrid approach enhances data reliability, governance, and performance for diverse analytics workloads compared to traditional data lakes.

Data Storage: Schema-on-Read vs Schema-on-Write

Data lakes store raw, unstructured data using a schema-on-read approach, allowing flexibility during data retrieval but requiring complex processing to interpret the data. Data lakehouses implement schema-on-write, imposing structure and organization at the time of data ingestion, which enhances data quality, consistency, and query performance. This fundamental difference impacts how organizations manage large volumes of data while balancing agility and reliability in analytics workflows.

Data Management: Reliability, Security, and Governance

Data Lakehouses combine the scalability of Data Lakes with the structured management features of Data Warehouses, enhancing reliability through ACID transactions and schema enforcement. Security is improved with fine-grained access controls, encryption, and auditing capabilities, aligning with enterprise governance standards. Governance benefits from unified metadata management and compliance tools, enabling better data lineage and quality assurance across varied data environments.

Performance and Query Optimization in Data Lakes vs Lakehouses

Data lakehouses deliver superior performance and query optimization compared to traditional data lakes by integrating data warehouse capabilities directly on top of data lakes, enabling faster and more efficient SQL-based queries. They leverage metadata management, indexing, and transaction support, which enhances data consistency and reduces query latency. In contrast, data lakes often struggle with slower query performance due to their lack of structured schema enforcement and limited optimization features.

Cost Efficiency: Infrastructure and Scalability Considerations

Data lakes offer cost efficiency through scalable storage of raw data on low-cost infrastructure but often incur additional expenses in data processing and management. Data lakehouses combine the storage benefits of data lakes with the performance optimization and data management features of data warehouses, reducing the total cost of ownership by streamlining analytics workflows. Scalability in lakehouses supports flexible resource allocation that enhances infrastructure utilization, leading to better cost control compared to traditional data lakes.

Integration with BI and Machine Learning Workflows

Data Lakehouses combine the scalable storage of Data Lakes with the structured management of Data Warehouses, enabling seamless integration with Business Intelligence (BI) tools and Machine Learning (ML) frameworks. They support direct querying and data governance, streamlining access to clean, organized data for advanced analytics and real-time insights. Unlike traditional Data Lakes, Data Lakehouses provide enhanced schema enforcement and transactional capabilities that improve the reliability and efficiency of ML workflows and BI reporting.

Industry Use Cases: When to Choose Data Lake or Lakehouse

Data lakes excel in industries with vast, unstructured data like media streaming and IoT, enabling flexible storage and schema-on-read capabilities ideal for raw data exploration. Data lakehouses suit sectors requiring both robust data warehousing and advanced analytics, such as finance and healthcare, offering ACID transactions and BI tool integration. Choosing between data lakes and lakehouses hinges on the need for data governance, real-time analytics, and the level of data structure required by the industry use case.

Future Trends: The Evolution of Data Architectures

Data Lakehouses combine the scalability and flexibility of data lakes with the structured data management and performance of data warehouses, shaping the future of data architectures. Emerging trends emphasize unified governance, real-time analytics, and support for machine learning workflows, driving organizations toward integrated data platforms. Advanced metadata management and AI-powered optimization in Data Lakehouses enable more efficient data processing and enhanced decision-making capabilities.

Related Important Terms

Schema Enforcement

Data lakes provide flexible storage of raw data without strict schema enforcement, enabling storage of diverse data types but complicating data governance and quality control. Data lakehouses combine the scalability of data lakes with built-in schema enforcement, improving data consistency and query performance by applying structured data management principles.

Medallion Architecture

Data Lakehouse integrates the schema enforcement and performance optimization of Data Lakes with the ACID transactions and BI support of Data Warehouses, leveraging Medallion Architecture's multilayered approach to organize data into Bronze, Silver, and Gold tables for quality refinement and faster analytics. This architecture improves data governance and scalability by systematically transforming raw data in the Bronze layer into cleansed and enriched data at the Silver layer, culminating in business-ready datasets in the Gold layer suitable for advanced analytics and machine learning.

Unified Analytics Engine

Data Lakehouse integrates the data management capabilities of data lakes with the structured data handling of data warehouses, offering a unified analytics engine that supports both batch and real-time processing. This architecture enhances data governance, reduces latency, and enables advanced analytics by combining schema enforcement, metadata management, and machine learning workflows in a single platform.

Delta Lake

Data Lakehouses, exemplified by Delta Lake, combine the scalable storage of Data Lakes with the transactional capabilities and data management features of data warehouses, enabling ACID transactions and schema enforcement on large-scale data. Delta Lake optimizes data reliability and query performance by providing upserts, versioning, and unified batch and streaming processing, making it a superior solution for modern analytics compared to traditional Data Lakes.

ACID Transactions in Data Lakehouse

Data Lakehouses enhance traditional Data Lakes by supporting ACID transactions, which ensure atomicity, consistency, isolation, and durability for reliable data manipulation and integrity. This transactional capability enables concurrent reads and writes, making Data Lakehouses suitable for complex analytic workloads and real-time data updates.

Data Versioning

Data Lakehouses integrate data versioning capabilities by combining the flexible storage of data lakes with the transactional consistency of data warehouses, enabling efficient tracking of changes and data lineage. This contrasts with traditional data lakes, which often lack built-in version control, making data governance and reproducibility more challenging.

Table Formats (Iceberg, Hudi, Delta)

Data Lakehouse combines the scalability of Data Lakes with structured data management using table formats like Apache Iceberg, Apache Hudi, and Delta Lake, which enable ACID transactions, schema enforcement, and efficient upserts and deletes. These table formats improve data reliability and performance by supporting incremental data processing and optimized query execution over traditional Data Lakes, which often lack native support for such advanced data management features.

Metadata Layer

A Data Lake utilizes a basic metadata layer primarily for data cataloging and indexing, often leading to challenges in data governance and query performance. In contrast, a Data Lakehouse integrates an advanced metadata layer that supports transactional capabilities, schema enforcement, and real-time data indexing, enhancing data reliability and query efficiency across diverse data types.

Streaming Ingestion Support

Data Lakes primarily handle batch ingestion, lacking native support for real-time streaming data, which can limit timely analytics. In contrast, Data Lakehouses integrate streaming ingestion capabilities through architectures like Delta Lake or Apache Hudi, enabling efficient, low-latency processing of continuous data streams.

Data Governance in Lakehouse

Data Lakehouse architecture enhances data governance by integrating robust metadata management, schema enforcement, and fine-grained access controls, ensuring data quality, security, and compliance across diverse data types. This unified governance framework in Lakehouses supports auditable data lineage and real-time policy enforcement, overcoming traditional data lake challenges.

Data Lake vs Data Lakehouse Infographic

industrydif.com

industrydif.com