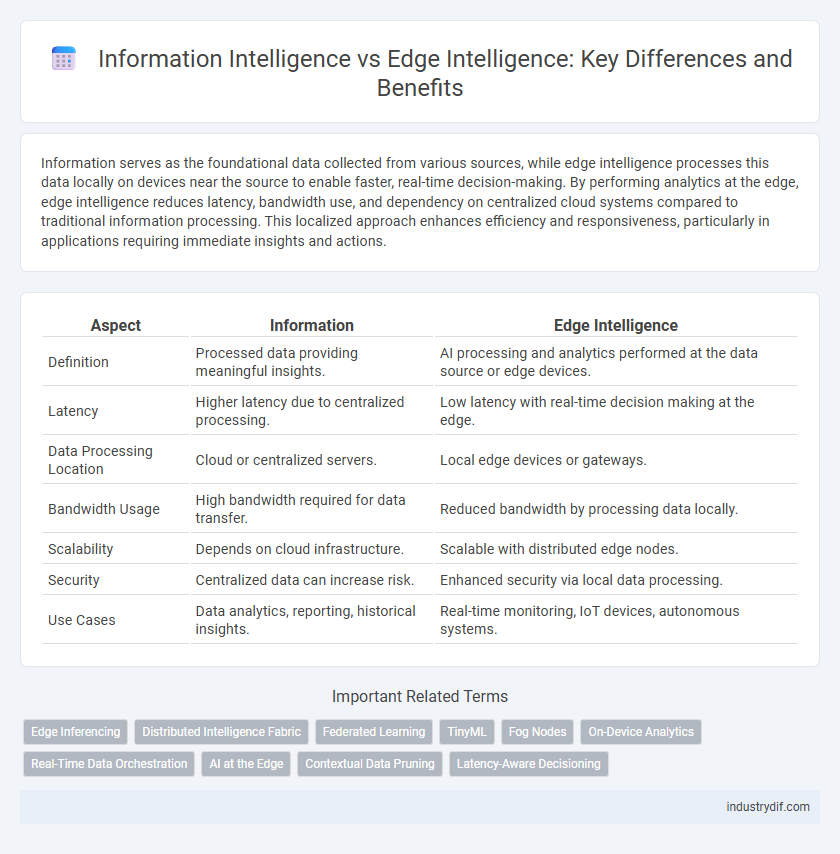

Information serves as the foundational data collected from various sources, while edge intelligence processes this data locally on devices near the source to enable faster, real-time decision-making. By performing analytics at the edge, edge intelligence reduces latency, bandwidth use, and dependency on centralized cloud systems compared to traditional information processing. This localized approach enhances efficiency and responsiveness, particularly in applications requiring immediate insights and actions.

Table of Comparison

| Aspect | Information | Edge Intelligence |

|---|---|---|

| Definition | Processed data providing meaningful insights. | AI processing and analytics performed at the data source or edge devices. |

| Latency | Higher latency due to centralized processing. | Low latency with real-time decision making at the edge. |

| Data Processing Location | Cloud or centralized servers. | Local edge devices or gateways. |

| Bandwidth Usage | High bandwidth required for data transfer. | Reduced bandwidth by processing data locally. |

| Scalability | Depends on cloud infrastructure. | Scalable with distributed edge nodes. |

| Security | Centralized data can increase risk. | Enhanced security via local data processing. |

| Use Cases | Data analytics, reporting, historical insights. | Real-time monitoring, IoT devices, autonomous systems. |

Defining Information Intelligence

Information intelligence involves the systematic collection, processing, and analysis of data to generate actionable insights that improve decision-making and operational efficiency. It relies heavily on centralized data storage and advanced analytics techniques such as machine learning and data mining. In contrast, edge intelligence pushes computation and data processing closer to the data source, enabling real-time analytics and reducing latency in distributed environments.

Understanding Edge Intelligence

Edge Intelligence refers to the integration of artificial intelligence (AI) capabilities directly within edge devices, enabling real-time data processing and analysis without relying on centralized cloud systems. Unlike traditional information systems that transmit data to centralized locations for processing, Edge Intelligence reduces latency and enhances security by localizing computation at the data source. This approach optimizes resource utilization and supports applications requiring immediate insights, such as autonomous vehicles, smart surveillance, and industrial IoT.

Key Differences: Information vs Edge Intelligence

Information refers to data that is collected, processed, and stored for analysis, traditionally managed in centralized data centers or cloud environments. Edge Intelligence integrates data processing and artificial intelligence at the edge of the network, enabling real-time decision-making and reducing latency by analyzing data closer to the source. Key differences include centralized versus decentralized processing, latency levels, and the capability for autonomous, on-site analytics provided by Edge Intelligence.

Data Processing Location: Centralized vs Decentralized

Information processing in centralized systems primarily occurs in data centers or the cloud, where vast amounts of data are aggregated for analysis and decision-making, offering powerful computational resources but suffering from latency and bandwidth limitations. Edge Intelligence decentralizes data processing by performing computations directly on devices or local edge servers near the data source, reducing latency and enhancing real-time responsiveness. This decentralized approach supports applications like IoT, autonomous vehicles, and smart cities by minimizing dependence on central servers and improving privacy and reliability.

Latency and Real-time Decision Making

Information processing in centralized systems typically faces higher latency due to data transmission delays to cloud servers, which can hinder real-time decision making. Edge intelligence minimizes latency by processing data locally on edge devices, enabling instantaneous insights and faster response times. This localized computation facilitates real-time analytics, essential for applications requiring immediate actions such as autonomous vehicles and industrial automation.

Scalability and Network Efficiency

Edge intelligence enhances scalability by processing data locally on devices or edge nodes, reducing the demand on centralized information systems and minimizing network latency. This decentralized approach improves network efficiency through decreased bandwidth usage and faster real-time decision-making. Centralized information systems, in contrast, often face bottlenecks and higher latency due to the heavy data transfer to and from the cloud or data centers.

Security Implications in Information vs Edge Intelligence

Information processed centrally faces higher risks of data breaches due to large-scale aggregation making it a lucrative target for cyber-attacks. Edge intelligence minimizes these risks by locally processing data on devices, reducing the flow of sensitive information to central servers. This decentralized approach enhances data privacy and lowers latency, crucial for real-time security applications in IoT and critical infrastructure.

Industry Applications: Use Cases Comparison

Information systems primarily process and analyze large volumes of centralized data, enabling industries like manufacturing to optimize supply chains and improve decision-making through comprehensive data analytics. Edge intelligence enhances these capabilities by performing real-time processing and analytics directly at the data source, crucial for applications such as predictive maintenance in smart factories and autonomous vehicle navigation in transportation. Combining cloud-based information systems with edge intelligence supports scalable, low-latency solutions essential for Industry 4.0 innovations and IoT deployment.

Challenges in Implementing Edge Intelligence

Implementing edge intelligence presents significant challenges, including limited computational resources and energy constraints on edge devices, which hinder the processing of complex data algorithms locally. Ensuring data security and privacy at the edge is difficult due to decentralized data storage and transmission vulnerabilities. Furthermore, managing heterogeneous devices and maintaining real-time data synchronization across distributed networks complicate the deployment of scalable and efficient edge intelligence systems.

Future Trends: Integrating Information and Edge Intelligence

Future trends in integrating information and edge intelligence emphasize real-time data processing at the network edge, reducing latency and improving decision-making accuracy. Advances in AI-powered edge devices enable seamless synchronization with centralized data systems, fostering adaptive and context-aware applications across industries. The fusion of cloud-based information management and distributed edge intelligence is driving scalable, secure, and efficient IoT ecosystems for smart cities, autonomous vehicles, and healthcare innovations.

Related Important Terms

Edge Inferencing

Edge Intelligence processes data locally on devices, enabling real-time edge inferencing without relying on centralized cloud servers, which reduces latency and enhances privacy. Unlike traditional information systems that transmit raw data to cloud platforms for analysis, edge inferencing leverages on-device AI models to make immediate decisions at the data source.

Distributed Intelligence Fabric

Edge intelligence leverages a distributed intelligence fabric to process data locally on edge devices, reducing latency and bandwidth usage compared to traditional centralized information processing systems. This decentralized approach enhances real-time decision-making and scalability by integrating distributed computing, data analytics, and AI models across networked nodes.

Federated Learning

Federated Learning enables decentralized data processing by aggregating model updates from multiple edge devices without transferring raw data, enhancing privacy and reducing latency. This contrasts with traditional centralized Information systems, where data is collected and processed in a central server, posing higher risks of data breaches and increased communication overhead.

TinyML

Information processing at the edge leverages TinyML to enable real-time data analysis on low-power devices, reducing latency and bandwidth usage compared to traditional centralized cloud computing. Edge intelligence, powered by TinyML, integrates machine learning capabilities directly into sensors and IoT devices, enhancing efficiency and privacy while supporting autonomous decision-making in resource-constrained environments.

Fog Nodes

Fog nodes, integral to edge intelligence, process data locally to reduce latency and bandwidth usage compared to traditional centralized information systems. These nodes enhance real-time analytics by enabling immediate data handling at the network edge, improving response times and scalability in distributed IoT environments.

On-Device Analytics

On-device analytics enables real-time data processing at the source, significantly reducing latency and bandwidth usage compared to traditional centralized information systems. Edge intelligence leverages localized computing power to deliver faster insights and enhanced data privacy while minimizing reliance on cloud infrastructures.

Real-Time Data Orchestration

Information systems prioritize centralized data processing, which often leads to latency issues in real-time applications; edge intelligence enhances real-time data orchestration by processing data locally on edge devices, reducing response times and bandwidth usage. This decentralized approach enables faster decision-making and improved efficiency in IoT and distributed network environments.

AI at the Edge

Edge intelligence leverages AI algorithms directly on edge devices, enabling real-time data processing and decision-making without relying on centralized cloud servers. This approach reduces latency, enhances privacy by limiting data transmission, and optimizes bandwidth usage compared to traditional cloud-based information systems.

Contextual Data Pruning

Contextual data pruning in Edge Intelligence enhances data processing efficiency by filtering irrelevant information at the source, minimizing latency and bandwidth use compared to traditional centralized Information systems. This targeted approach enables faster, context-aware decision-making crucial for real-time applications in IoT and smart devices.

Latency-Aware Decisioning

Latency-aware decisioning enhances edge intelligence by processing data locally, reducing the delay inherent in transmitting information to centralized systems, thus enabling real-time responses and improved operational efficiency. This approach contrasts with traditional information processing, which relies on centralized data analysis, often leading to higher latency and slower decision-making.

Information vs Edge Intelligence Infographic

industrydif.com

industrydif.com