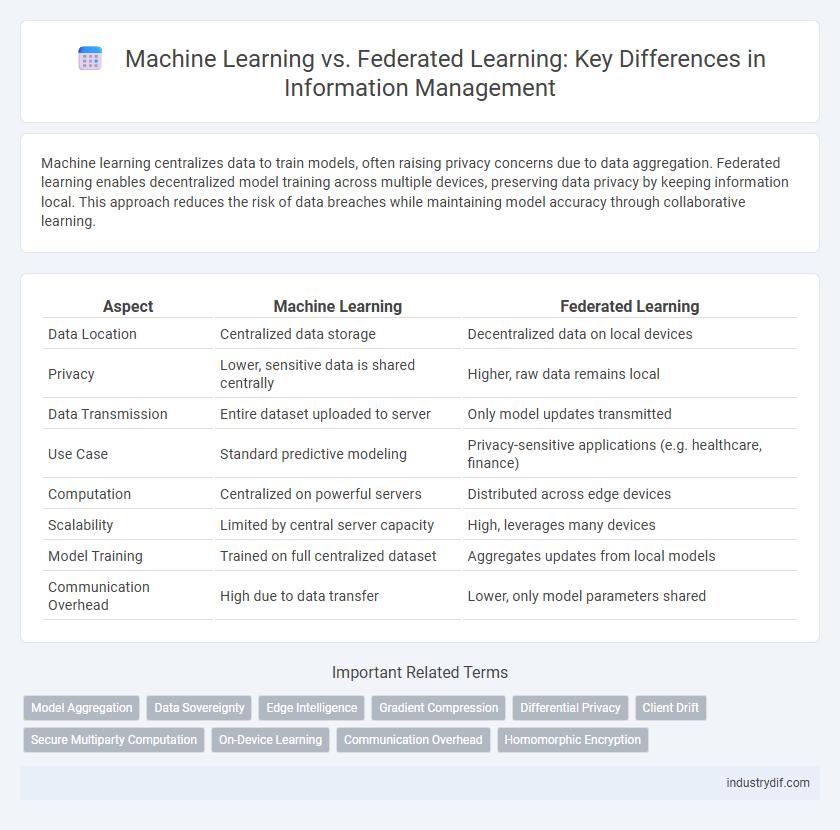

Machine learning centralizes data to train models, often raising privacy concerns due to data aggregation. Federated learning enables decentralized model training across multiple devices, preserving data privacy by keeping information local. This approach reduces the risk of data breaches while maintaining model accuracy through collaborative learning.

Table of Comparison

| Aspect | Machine Learning | Federated Learning |

|---|---|---|

| Data Location | Centralized data storage | Decentralized data on local devices |

| Privacy | Lower, sensitive data is shared centrally | Higher, raw data remains local |

| Data Transmission | Entire dataset uploaded to server | Only model updates transmitted |

| Use Case | Standard predictive modeling | Privacy-sensitive applications (e.g. healthcare, finance) |

| Computation | Centralized on powerful servers | Distributed across edge devices |

| Scalability | Limited by central server capacity | High, leverages many devices |

| Model Training | Trained on full centralized dataset | Aggregates updates from local models |

| Communication Overhead | High due to data transfer | Lower, only model parameters shared |

Definition of Machine Learning

Machine learning is a subset of artificial intelligence that enables computers to learn from data and improve their performance on tasks without explicit programming. It involves algorithms that identify patterns, make predictions, and automate decision-making based on input data. Common types include supervised, unsupervised, and reinforcement learning, each tailored to different data structures and objectives.

Definition of Federated Learning

Federated learning is a decentralized machine learning approach where models are trained across multiple devices or servers holding local data samples without exchanging them. This method enhances data privacy by keeping sensitive information on local devices while aggregating model updates centrally. Compared to traditional machine learning, federated learning minimizes data transfer risks and supports compliance with data protection regulations.

Key Differences Between Machine Learning and Federated Learning

Machine Learning centralizes data on a single server to train algorithms, whereas Federated Learning processes data locally on multiple devices, enhancing privacy and security. Machine Learning requires substantial data transfer and storage, while Federated Learning minimizes bandwidth usage by only sharing model updates, not raw data. The decentralized nature of Federated Learning addresses data governance and compliance challenges that traditional Machine Learning faces.

Data Privacy and Security Considerations

Machine Learning centralizes data for model training, raising significant risks of data breaches and privacy violations due to aggregated sensitive information. Federated Learning enhances data privacy by enabling decentralized model training directly on users' devices, minimizing the exposure of raw data. Security considerations in Federated Learning include safeguarding communication channels and ensuring robust aggregation techniques to prevent data leakage and adversarial attacks.

Training Architecture: Centralized vs Distributed

Machine learning typically relies on a centralized training architecture where data from multiple sources is aggregated in a central server for model training, enabling efficient computation but raising privacy concerns. Federated learning employs a distributed training architecture that processes data locally on edge devices, sharing only model updates to a central server, thus enhancing data privacy and reducing communication overhead. This decentralized approach is particularly advantageous for applications requiring compliance with data protection regulations and real-time learning in heterogeneous environments.

Scalability and Resource Allocation

Machine learning typically requires centralized data processing, which can lead to significant challenges in scalability and increased demand for computational resources. Federated learning distributes model training across multiple devices, enhancing scalability by leveraging local data and computing power, thus reducing the strain on centralized servers. This decentralized approach optimizes resource allocation, minimizes latency, and improves privacy by keeping data on edge devices.

Communication Overhead and Latency

Machine Learning often requires centralized data processing, leading to higher communication overhead and increased latency due to constant data transfer to central servers. Federated Learning reduces communication overhead by processing data locally on edge devices, transmitting only model updates rather than raw data. This decentralized approach significantly lowers latency and enhances privacy while maintaining model performance across distributed networks.

Use Cases and Industry Applications

Machine learning drives personalized recommendations, fraud detection, and predictive maintenance across finance, healthcare, and retail by leveraging centralized data models. Federated learning enhances privacy-sensitive applications such as healthcare diagnostics, autonomous vehicles, and edge computing by enabling decentralized model training without sharing raw data. Industries adopting federated learning gain improved data security and compliance while maintaining model accuracy in distributed environments.

Challenges in Implementation

Machine Learning implementation faces challenges such as data privacy concerns, the need for large centralized datasets, and high computational costs for training complex models. Federated Learning, while addressing data privacy by enabling decentralized training on local devices, presents difficulties in handling heterogeneous data distributions, ensuring communication efficiency between devices, and managing model updates securely. Both approaches require robust strategies for scalability, data security, and real-time processing to achieve effective deployment in real-world applications.

Future Trends in Machine Learning and Federated Learning

Future trends in machine learning emphasize enhanced model accuracy, scalability, and integration of deep learning with reinforcement learning for complex decision-making tasks. Federated learning is advancing toward privacy-preserving AI, enabling decentralized data processing while maintaining data security across multiple devices and institutions. Innovations in edge computing and 5G connectivity are set to accelerate real-time federated learning applications, particularly in healthcare, finance, and autonomous systems.

Related Important Terms

Model Aggregation

Machine learning relies on centralized data aggregation for model training, whereas federated learning performs model aggregation by combining locally trained models from distributed devices without transferring raw data. This decentralized model aggregation enhances data privacy and reduces communication overhead while maintaining effective collaborative learning.

Data Sovereignty

Machine Learning typically involves centralizing data on a server for model training, which can raise concerns about data sovereignty due to cross-border data transfers. Federated Learning, by enabling decentralized model training on local devices, enhances data sovereignty by keeping personal data within its original jurisdiction and minimizing privacy risks.

Edge Intelligence

Machine learning centralizes data processing in cloud servers, whereas federated learning enables edge devices to collaboratively train models locally, enhancing data privacy and reducing latency. Edge intelligence leverages federated learning to optimize real-time decision-making by utilizing distributed computational resources at the network edge.

Gradient Compression

Gradient compression in machine learning reduces communication overhead by quantizing or sparsifying gradient updates during distributed training, enhancing efficiency. Federated learning further leverages gradient compression to minimize data transmission between edge devices and the central server, preserving privacy while optimizing bandwidth usage.

Differential Privacy

Machine Learning relies on centralized data processing, which can expose sensitive information, whereas Federated Learning decentralizes model training by keeping data on devices, enhancing privacy. Differential Privacy integrates noise into local computations in Federated Learning to mathematically guarantee individual data protection while maintaining model accuracy.

Client Drift

Client drift in federated learning occurs when local models diverge due to non-IID (non-independent and identically distributed) data across clients, causing challenges in model convergence and overall accuracy. In traditional machine learning, centralizing data mitigates client drift by ensuring uniform training data, whereas federated learning requires robust aggregation techniques to counteract model divergence stemming from client heterogeneity.

Secure Multiparty Computation

Secure Multiparty Computation (SMC) enables federated learning to train machine learning models collaboratively without sharing raw data, enhancing privacy and security compared to traditional centralized machine learning. SMC protocols distribute computations across multiple parties, ensuring sensitive data remains confidential while achieving accurate model aggregation and reducing risks of data breaches.

On-Device Learning

Machine learning typically relies on centralized data processing and server-based models, whereas federated learning enables on-device learning by training algorithms locally on users' devices, preserving data privacy and reducing latency. On-device federated learning leverages decentralized data sources to update global models efficiently without transferring sensitive information to central servers.

Communication Overhead

Machine Learning typically requires centralized data processing, leading to significant communication overhead as raw data is transmitted to a central server. Federated Learning minimizes this overhead by enabling local model training on edge devices, only sharing model updates, which reduces data transmission and preserves privacy.

Homomorphic Encryption

Machine learning involves centralized data processing which increases privacy risks, whereas federated learning enables decentralized model training on local devices, significantly enhancing data privacy. Homomorphic encryption further secures federated learning by allowing computations on encrypted data, ensuring sensitive information remains confidential throughout analysis.

Machine Learning vs Federated Learning Infographic

industrydif.com

industrydif.com