Data analysis involves extracting meaningful patterns and insights from complex pet health datasets to inform diagnostics and treatment strategies. Explainable AI enhances transparency by providing clear, interpretable models that clarify how predictions and decisions are made in pet healthcare applications. Fusing data analysis with explainable AI fosters trust and accuracy, enabling veterinarians to make informed decisions based on understandable computational reasoning.

Table of Comparison

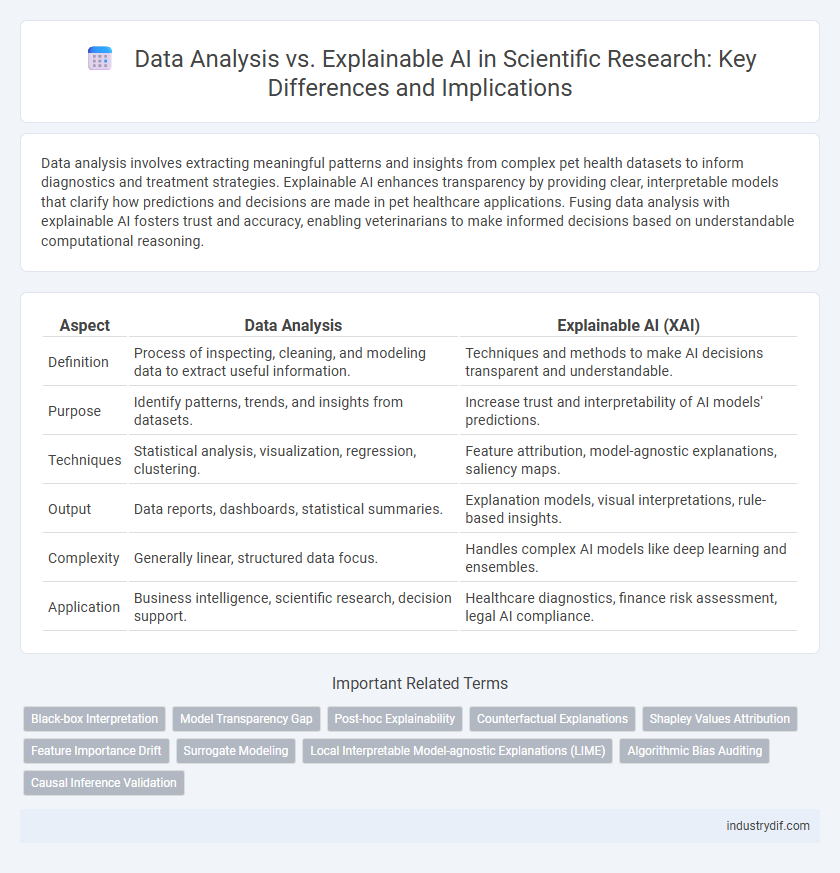

| Aspect | Data Analysis | Explainable AI (XAI) |

|---|---|---|

| Definition | Process of inspecting, cleaning, and modeling data to extract useful information. | Techniques and methods to make AI decisions transparent and understandable. |

| Purpose | Identify patterns, trends, and insights from datasets. | Increase trust and interpretability of AI models' predictions. |

| Techniques | Statistical analysis, visualization, regression, clustering. | Feature attribution, model-agnostic explanations, saliency maps. |

| Output | Data reports, dashboards, statistical summaries. | Explanation models, visual interpretations, rule-based insights. |

| Complexity | Generally linear, structured data focus. | Handles complex AI models like deep learning and ensembles. |

| Application | Business intelligence, scientific research, decision support. | Healthcare diagnostics, finance risk assessment, legal AI compliance. |

Introduction to Data Analysis and Explainable AI

Data analysis involves systematically inspecting, cleaning, and modeling data to discover useful information, patterns, and trends that support decision-making. Explainable AI (XAI) enhances traditional data analysis by providing transparency and interpretability in complex machine learning models, making their predictions understandable to humans. Integrating XAI with data analysis improves trust, accountability, and effective application across scientific research and industry sectors.

Defining Data Analysis in Scientific Research

Data analysis in scientific research involves systematically examining raw data using statistical and computational methods to uncover patterns, test hypotheses, and validate experimental results. It transforms complex datasets into meaningful insights that drive evidence-based conclusions and support reproducibility. Unlike explainable AI, which emphasizes interpreting model decisions, data analysis primarily focuses on rigorous data validation and hypothesis testing within empirical frameworks.

What is Explainable AI? Key Concepts and Importance

Explainable AI (XAI) refers to methods and techniques in artificial intelligence that make the decision-making process of AI models transparent and interpretable to humans. Key concepts include model interpretability, transparency, and the ability to provide understandable justifications for AI predictions, which are crucial for trust, accountability, and regulatory compliance. The importance of XAI lies in enhancing user confidence, facilitating ethical AI deployment, and enabling better debugging and improvement of AI systems.

Core Differences Between Data Analysis and Explainable AI

Data analysis involves extracting insights from raw data through statistical techniques and algorithms, emphasizing pattern recognition and predictive modeling. Explainable AI (XAI) focuses on providing transparent, interpretable models that clarify the decision-making processes of artificial intelligence systems. The core difference lies in data analysis prioritizing outcome extraction, whereas XAI emphasizes model interpretability and user trust in AI-driven conclusions.

Methodologies: Statistical Techniques vs. AI Models

Data analysis primarily relies on statistical techniques such as regression, hypothesis testing, and clustering to extract meaningful patterns and validate hypotheses from datasets. Explainable AI employs advanced AI models like decision trees, SHAP values, and LIME to provide transparency in complex neural networks and machine learning algorithms. The integration of interpretable AI methods enhances the understanding and trustworthiness of automated decision-making systems in scientific research.

Interpretability in Scientific Data Analysis

Interpretability in scientific data analysis enhances the transparency and trustworthiness of complex models by providing clear insights into the decision-making process. Explainable AI (XAI) techniques such as feature importance, rule-based models, and visualization tools enable researchers to understand and validate predictions, which is crucial for hypothesis testing and scientific discovery. Integrating interpretability methods within data analysis pipelines improves model reliability and facilitates the adoption of AI-driven solutions in research environments.

Transparency and Trust: Explainable AI in Science

Explainable AI enhances transparency in scientific data analysis by providing interpretable models that reveal underlying decision-making processes. This clarity fosters greater trust among researchers, enabling validation, reproducibility, and collaborative advancements. Transparent AI systems bridge the gap between complex algorithms and scientific understanding, crucial for reliable and ethical scientific discoveries.

Advantages and Limitations of Data Analysis

Data analysis offers robust capabilities for discovering patterns and insights from large datasets through statistical methods and machine learning algorithms, enhancing decision-making accuracy. However, its limitations include potential biases in data, lack of transparency, and difficulty explaining complex model decisions to stakeholders. Unlike Explainable AI, which prioritizes model interpretability, traditional data analysis may struggle to provide clear rationale behind predictive outcomes.

Challenges of Implementing Explainable AI

Implementing Explainable AI (XAI) faces significant challenges, including the complexity of interpreting black-box models such as deep neural networks and the trade-off between model accuracy and transparency. Data analysts must navigate issues related to the lack of standardized metrics for explainability and the difficulty in aligning model explanations with human cognitive processes. Furthermore, ensuring regulatory compliance, maintaining user trust, and integrating domain-specific knowledge complicate the practical deployment of XAI systems in scientific research.

Future Trends: Integrating Data Analysis and Explainable AI

Future trends in integrating data analysis with explainable AI emphasize the development of hybrid models that enhance transparency and interpretability while maintaining predictive accuracy. Advances in algorithms aim to provide detailed insights into complex datasets, facilitating more informed decision-making across scientific disciplines. Emerging standards and frameworks will drive the adoption of explainable methods embedded within data analysis pipelines, promoting trust and accountability in AI-driven research.

Related Important Terms

Black-box Interpretation

Black-box interpretation in Explainable AI (XAI) aims to make complex, opaque models like deep neural networks or ensemble methods more understandable without sacrificing performance, using techniques such as SHAP, LIME, and counterfactual explanations. Data analysis workflows often rely on transparent, statistical models, but integrating black-box interpretation methods enhances trust and insight by revealing feature importance, model behavior, and decision rationale behind predictive outcomes.

Model Transparency Gap

Data analysis often relies on traditional statistical methods that offer clear interpretability, whereas Explainable AI (XAI) aims to bridge the model transparency gap by providing insights into complex machine learning algorithms. This transparency gap highlights the challenge of understanding opaque AI models, necessitating advanced techniques to elucidate decision-making processes and improve trustworthiness in scientific applications.

Post-hoc Explainability

Post-hoc explainability techniques in Explainable AI (XAI) provide interpretability by generating human-understandable explanations after model training, contrasting with traditional data analysis that prioritizes direct statistical inference and model transparency. Methods such as SHAP values, LIME, and counterfactual explanations enable insights into complex black-box models, enhancing trust and decision-making in scientific research.

Counterfactual Explanations

Counterfactual explanations provide interpretable insights by illustrating how minimal changes to input data can alter AI model predictions, bridging the gap between data analysis and explainable AI. This approach enhances model transparency and supports decision-making by revealing underlying causal relationships in complex datasets.

Shapley Values Attribution

Shapley values attribution, rooted in cooperative game theory, quantifies the contribution of individual features in complex models, enhancing transparency in explainable AI frameworks. This method surpasses traditional data analysis by providing precise, interpretable feature importance that supports model validation and decision-making in scientific research.

Feature Importance Drift

Feature importance drift in data analysis refers to the change in the significance of variables over time, impacting the reliability of predictive models. Explainable AI frameworks address this challenge by providing transparent methods to detect and interpret shifts in feature importance, enhancing model trustworthiness and adaptability.

Surrogate Modeling

Surrogate modeling in Explainable AI provides interpretable approximations of complex machine learning models, enabling clearer understanding of predictions compared to traditional black-box data analysis methods. These models enhance transparency by simplifying high-dimensional data patterns, facilitating trust and actionable insights in scientific research.

Local Interpretable Model-agnostic Explanations (LIME)

Local Interpretable Model-agnostic Explanations (LIME) enhances data analysis by providing transparent, interpretable insights into black-box machine learning models, enabling users to understand predictions at a local level without relying on the underlying model's complexity. LIME's model-agnostic approach facilitates the evaluation of individual data points, improving trust and accountability in AI-driven decision-making processes across diverse scientific applications.

Algorithmic Bias Auditing

Data analysis techniques are crucial for identifying patterns and correlations in large datasets, while Explainable AI provides transparency into algorithmic decision-making processes essential for effective algorithmic bias auditing. Algorithmic bias auditing combines statistical data analysis methods with interpretable AI models to detect, quantify, and mitigate biases, ensuring fairness and accountability in automated systems.

Causal Inference Validation

Data analysis methods often struggle to establish robust causal inference without transparent model mechanisms, whereas Explainable AI frameworks enhance causal inference validation by providing interpretable insights into model decisions and underlying data relationships. This transparency facilitates the identification of confounding variables and supports more accurate assessment of causal pathways in complex scientific datasets.

Data Analysis vs Explainable AI Infographic

industrydif.com

industrydif.com