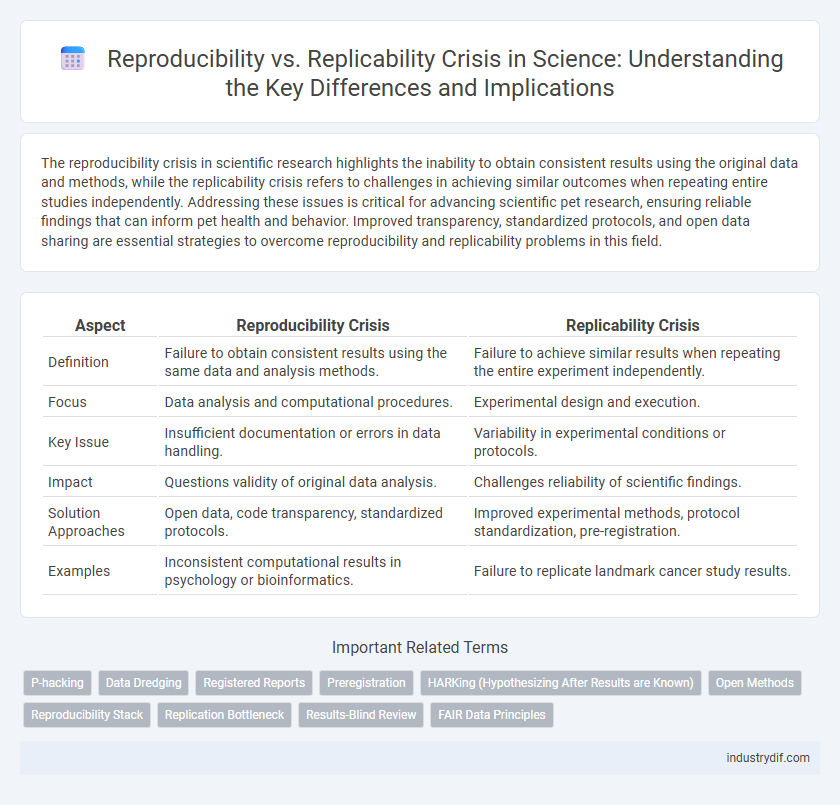

The reproducibility crisis in scientific research highlights the inability to obtain consistent results using the original data and methods, while the replicability crisis refers to challenges in achieving similar outcomes when repeating entire studies independently. Addressing these issues is critical for advancing scientific pet research, ensuring reliable findings that can inform pet health and behavior. Improved transparency, standardized protocols, and open data sharing are essential strategies to overcome reproducibility and replicability problems in this field.

Table of Comparison

| Aspect | Reproducibility Crisis | Replicability Crisis |

|---|---|---|

| Definition | Failure to obtain consistent results using the same data and analysis methods. | Failure to achieve similar results when repeating the entire experiment independently. |

| Focus | Data analysis and computational procedures. | Experimental design and execution. |

| Key Issue | Insufficient documentation or errors in data handling. | Variability in experimental conditions or protocols. |

| Impact | Questions validity of original data analysis. | Challenges reliability of scientific findings. |

| Solution Approaches | Open data, code transparency, standardized protocols. | Improved experimental methods, protocol standardization, pre-registration. |

| Examples | Inconsistent computational results in psychology or bioinformatics. | Failure to replicate landmark cancer study results. |

Defining Reproducibility and Replicability in Science

Reproducibility in science refers to the ability to obtain consistent results using the same data and analytical methods as the original study, ensuring reliability of findings. Replicability involves conducting new experiments or studies under similar conditions to verify whether the original results can be independently observed. Clear definitions of these concepts are essential for addressing the reproducibility vs replicability crisis that challenges scientific credibility and progress.

Historical Perspective on the Reproducibility Crisis

The reproducibility crisis gained significant attention in the early 2010s when multiple high-profile studies in psychology and biomedical sciences failed replication attempts, exposing systemic issues in research methodology. Historical analyses trace these concerns back to earlier decades, highlighting inadequate reporting standards, publication bias, and statistical misapplications as persistent contributors to reproducibility challenges. Landmark meta-research initiatives, such as the Open Science Collaboration's 2015 replication project, have since provided empirical evidence quantifying the crisis and catalyzed widespread reforms in scientific publishing and experimental protocols.

Causes of Irreproducibility in Scientific Research

Irreproducibility in scientific research often arises from factors such as insufficient methodological transparency, variability in experimental protocols, and selective reporting of results. Inconsistent data collection procedures and lack of standardized materials contribute significantly to challenges in reproducing studies. Furthermore, statistical errors and publication bias exacerbate the reproducibility crisis by obscuring true scientific findings.

Impact of the Crisis on Scientific Credibility

The reproducibility and replicability crisis has significantly undermined scientific credibility by exposing the inability to consistently verify experimental results across independent studies. This crisis erodes trust in published findings, leading to skepticism among researchers, policymakers, and the public, which can delay scientific progress and reduce funding opportunities. Addressing these challenges requires systemic reforms in research methodology, data transparency, and publication standards to restore confidence in scientific outputs.

Statistical Methods and Data Transparency

Statistical methods directly impact the reproducibility and replicability crisis by influencing the accuracy of hypothesis testing and effect size estimation. Transparency in data sharing and analysis protocols enhances reproducibility by allowing independent verification of results and reduces selective reporting biases. Implementing standardized statistical workflows and open data repositories is essential to address methodological inconsistencies and improve scientific integrity.

Role of Open Science Initiatives

Open Science initiatives enhance research transparency by promoting data sharing, preregistration, and open peer review, directly addressing the reproducibility and replicability crisis in science. By enabling broader access to research materials and methodologies, these initiatives facilitate independent verification and replication of findings across disciplines. Such increased openness improves methodological rigor and reduces biases, fostering trust and accelerating scientific progress.

Best Practices for Enhancing Replicability

Implementing rigorous pre-registration of study protocols and hypotheses reduces researcher bias and increases transparency in scientific research. Utilizing standardized data collection and analysis methods ensures consistency across different research teams and experimental setups. Sharing raw data and code publicly fosters collaborative verification and facilitates the identification of errors, thereby enhancing the overall replicability of scientific findings.

Technological Tools for Improved Reproducibility

Technological tools such as version control systems, workflow automation platforms, and containerization technologies significantly enhance reproducibility by enabling precise tracking of data, code, and computational environments. Open-source platforms like GitHub and Jupyter Notebooks facilitate transparent sharing and collaborative verification, while containerization solutions like Docker and Singularity ensure consistent execution across diverse computational settings. Integration of these tools within research workflows systematically addresses variability, thereby mitigating the reproducibility crisis in scientific investigations.

Policy Responses and Funding Agency Requirements

Policy responses to the reproducibility and replicability crisis emphasize stricter guidelines for experimental design, data sharing, and pre-registration of studies to enhance transparency and accountability in scientific research. Funding agencies increasingly mandate open access to raw data, methodological protocols, and encourage replication studies as part of grant requirements to ensure robustness and reliability of findings. These measures aim to improve research quality by minimizing publication bias and promoting validation efforts across diverse scientific disciplines.

Future Directions in Addressing the Crisis

Future directions in addressing the reproducibility and replicability crisis prioritize the development of standardized protocols, enhanced transparency in data collection, and open sharing of methodologies and code to facilitate verification. Implementing robust pre-registration of studies and promoting interdisciplinary collaboration strengthen methodological rigor and reduce biases. Advances in automated data analysis tools and incentivizing replication studies further support the establishment of reliable scientific findings.

Related Important Terms

P-hacking

P-hacking undermines scientific integrity by manipulating data analysis to achieve statistically significant p-values, contributing significantly to the reproducibility and replicability crisis in research. This practice inflates false positives, eroding trust in published findings and complicating efforts to validate experimental results across independent studies.

Data Dredging

Data dredging, also known as p-hacking, involves extensive searching through data to find statistically significant patterns without pre-specified hypotheses, contributing significantly to the reproducibility and replicability crisis in scientific research. This practice inflates false positives and undermines the validity of findings, making it difficult to replicate studies or reproduce consistent results across independent experiments.

Registered Reports

Registered Reports enhance scientific rigor by preregistering study protocols, reducing biases that contribute to the Reproducibility and Replicability Crisis. This approach increases transparency and reliability by committing to publish results regardless of outcome, thereby addressing publication bias and improving the overall trustworthiness of research findings.

Preregistration

Preregistration enhances scientific rigor by requiring researchers to specify hypotheses, methods, and analysis plans before data collection, thereby reducing biases associated with data dredging and selective reporting. This practice addresses the reproducibility and replicability crisis by increasing transparency and enabling independent verification of results.

HARKing (Hypothesizing After Results are Known)

HARKing (Hypothesizing After Results are Known) undermines scientific integrity by generating hypotheses based on data already observed, leading to inflated false-positive rates and biased findings. This practice significantly contributes to the reproducibility and replicability crisis by distorting the distinction between exploratory and confirmatory research phases.

Open Methods

Open methods enhance scientific transparency and data accessibility, directly addressing the reproducibility crisis by allowing independent verification of experimental procedures and results. Sharing detailed protocols and raw data fosters replicability, enabling researchers to accurately duplicate studies and validate findings across diverse settings.

Reproducibility Stack

The Reproducibility Stack encompasses the integrated tools, protocols, and data management systems essential for verifying scientific results by enabling other researchers to precisely reproduce computational analyses and experimental conditions. Enhancing this stack strengthens transparency, data integrity, and methodological consistency, addressing critical issues in the reproducibility crisis within scientific research.

Replication Bottleneck

The replication bottleneck in the reproducibility versus replicability crisis significantly hampers scientific progress by limiting the ability to verify findings across diverse laboratories due to resource constraints, methodological variability, and inadequate data sharing practices. Enhancing standardized protocols, incentivizing replication studies, and improving open-access data repositories are essential to overcoming these bottlenecks and fostering robust scientific validation.

Results-Blind Review

Results-blind review enhances scientific rigor by evaluating research based solely on methodology and study design, reducing publication bias and addressing issues in the reproducibility and replicability crisis. This approach promotes transparent reporting standards and encourages the verification of findings through replication efforts.

FAIR Data Principles

The Reproducibility and Replicability Crisis in science highlights the urgent need for transparent research data management, where FAIR Data Principles--Findability, Accessibility, Interoperability, and Reusability--serve as critical guidelines to enhance reproducibility of experiments and validation of results. Implementing FAIR Data standards ensures that scientific datasets are systematically organized and easily shareable, thereby significantly reducing barriers to replication and fostering greater trust in empirical findings across disciplines.

Reproducibility vs Replicability Crisis Infographic

industrydif.com

industrydif.com