Traditional statistics relies on fixed parameters and frequency-based inference, focusing on hypothesis testing and p-values to draw conclusions from data. Bayesian statistics incorporates prior knowledge through probability distributions, updating beliefs as new data becomes available, offering a more flexible and intuitive framework for scientific analysis. This approach enables researchers to quantify uncertainty and make probabilistic predictions, enhancing decision-making processes in scientific studies.

Table of Comparison

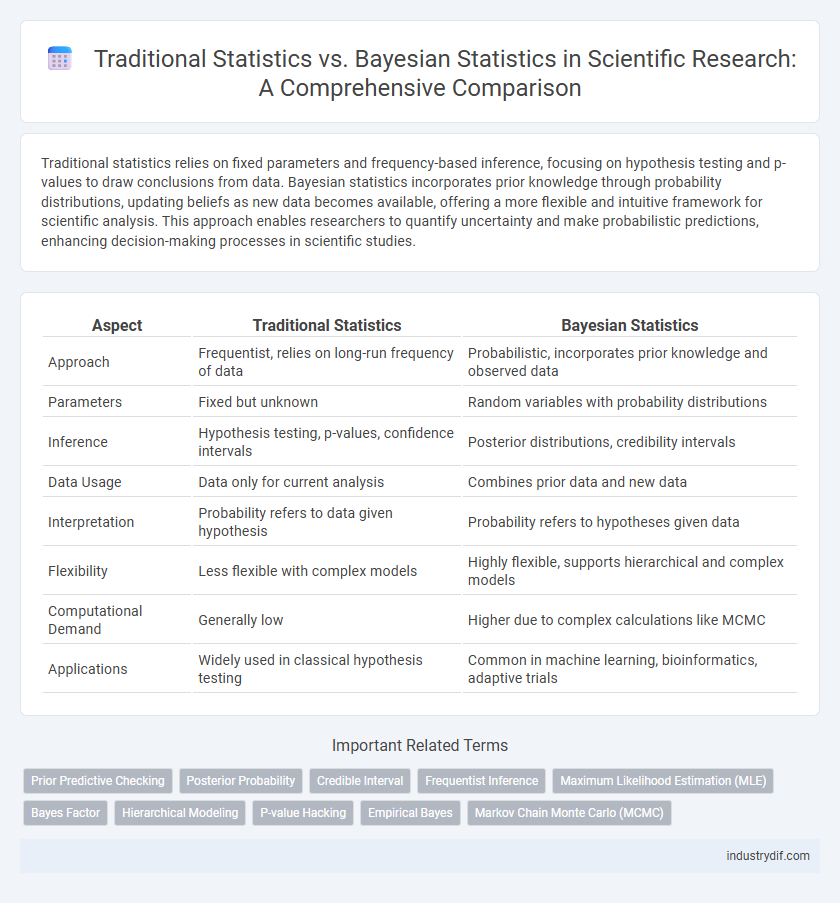

| Aspect | Traditional Statistics | Bayesian Statistics |

|---|---|---|

| Approach | Frequentist, relies on long-run frequency of data | Probabilistic, incorporates prior knowledge and observed data |

| Parameters | Fixed but unknown | Random variables with probability distributions |

| Inference | Hypothesis testing, p-values, confidence intervals | Posterior distributions, credibility intervals |

| Data Usage | Data only for current analysis | Combines prior data and new data |

| Interpretation | Probability refers to data given hypothesis | Probability refers to hypotheses given data |

| Flexibility | Less flexible with complex models | Highly flexible, supports hierarchical and complex models |

| Computational Demand | Generally low | Higher due to complex calculations like MCMC |

| Applications | Widely used in classical hypothesis testing | Common in machine learning, bioinformatics, adaptive trials |

Introduction to Traditional and Bayesian Statistics

Traditional statistics relies on frequentist methods using fixed parameters and hypothesis testing based on long-run frequencies, whereas Bayesian statistics incorporates prior knowledge through probability distributions, updating beliefs with new data. Bayesian methods provide a probabilistic framework, allowing for direct probability statements about parameters and models, contrasting with the non-probabilistic interpretation in traditional approaches. The choice between traditional and Bayesian statistics impacts data analysis, inference, and decision-making strategies in scientific research.

Fundamental Principles of Traditional Statistics

Traditional statistics is grounded in the frequentist paradigm, which relies on long-run frequency properties of estimators and hypothesis tests. It emphasizes point estimates, confidence intervals, and p-values to make inferences based on sample data without incorporating prior information. The approach assumes fixed parameters and analyzes the probability of observing data given these unknown parameters, focusing on objective and repeatable experimental outcomes.

Core Concepts in Bayesian Statistics

Bayesian statistics centers on the use of prior distributions combined with observed data to update the probability of a hypothesis through Bayes' theorem. The concept of posterior distribution is fundamental, representing the revised belief about parameters after incorporating evidence, contrasting with traditional frequentist confidence intervals. Bayesian methods provide a flexible framework for modeling uncertainty, inherently allowing for the incorporation of new data and expert knowledge in statistical inference.

Key Differences Between Frequentist and Bayesian Approaches

Frequentist statistics interprets probability as long-run frequency and relies on fixed parameters, using p-values and confidence intervals to make inferences, whereas Bayesian statistics treats parameters as random variables with probability distributions, updating prior beliefs through observed data to generate posterior distributions. In Frequentist methods, hypothesis tests focus on rejecting null hypotheses without incorporating prior knowledge, while Bayesian approaches explicitly incorporate prior information and provide direct probabilistic interpretations of parameters and hypotheses. This fundamental difference results in Bayesian statistics offering more flexible inference, especially in complex models or limited data scenarios, where Frequentist methods might struggle with interpretability and incorporating external evidence.

Role of Probability in Traditional and Bayesian Frameworks

Traditional statistics interprets probability as the long-run frequency of events, emphasizing fixed parameters and objective inference based on sample data. Bayesian statistics treats probability as a subjective degree of belief, allowing for the incorporation of prior knowledge and updating of parameter estimates via Bayes' theorem. This fundamental distinction shapes hypothesis testing, confidence intervals in traditional methods, and posterior distributions in Bayesian analysis.

Data Interpretation: Confidence Intervals vs. Credible Intervals

Confidence intervals in traditional statistics estimate a range within which the true parameter value is expected to fall with a certain frequency over repeated sampling. Credible intervals in Bayesian statistics provide a direct probabilistic statement about the parameter given the observed data and prior beliefs. This fundamental difference leads to distinct interpretations: confidence intervals relate to long-run frequency properties, while credible intervals represent updated belief distributions conditioned on evidence.

Hypothesis Testing: p-values vs. Posterior Probabilities

Traditional statistics relies on p-values to test hypotheses, measuring the probability of observing data as extreme as the sample under the null hypothesis. Bayesian statistics evaluates hypotheses using posterior probabilities, updating prior beliefs with observed data to directly quantify uncertainty about parameters. Posterior probabilities provide a more intuitive interpretation by expressing the probability of a hypothesis given the data, unlike p-values which only indicate data extremity under the null.

Computational Methods and Algorithmic Requirements

Traditional statistics often relies on analytical solutions and asymptotic approximations, making computational methods straightforward but potentially limited in flexibility for complex models. Bayesian statistics requires intensive computational algorithms like Markov Chain Monte Carlo (MCMC) and variational inference to estimate posterior distributions, demanding significant processing power and sophisticated programming. Advances in high-performance computing and efficient sampling algorithms have made Bayesian methods increasingly accessible for large-scale scientific data analysis.

Applications in Scientific Research and Industry

Traditional statistics relies heavily on fixed parameter estimation and hypothesis testing, commonly used in clinical trials and quality control processes, providing clear decision thresholds. Bayesian statistics incorporates prior knowledge and updates probabilities with new data, making it ideal for adaptive trial designs and real-time risk assessment in industries like finance and manufacturing. The choice between these methods depends on the specific research context, data availability, and the need for flexibility in inference and decision-making.

Current Trends and Future Directions in Statistical Methodology

Current trends in statistical methodology reveal a growing integration of Bayesian statistics within traditional frameworks, emphasizing probabilistic modeling and uncertainty quantification. Advances in computational algorithms like Markov Chain Monte Carlo and variational inference facilitate scalable Bayesian analyses across diverse scientific domains. Future directions prioritize hybrid approaches combining frequentist robustness with Bayesian flexibility to enhance reproducibility and decision-making in complex data environments.

Related Important Terms

Prior Predictive Checking

Prior predictive checking in Bayesian statistics involves generating data from the prior distribution before observing actual data to evaluate model assumptions, contrasting with traditional statistics where model validation primarily relies on frequentist hypothesis tests and confidence intervals. This Bayesian approach enhances model robustness by incorporating prior knowledge, allowing for a more comprehensive assessment of model fit and predictive performance.

Posterior Probability

Traditional statistics relies on fixed parameters and frequency-based interpretations, while Bayesian statistics employs posterior probability to update the likelihood of hypotheses based on observed data and prior beliefs. Posterior probability integrates prior distributions with the likelihood function to provide a probabilistic measure of uncertainty that evolves as new evidence is incorporated.

Credible Interval

Traditional statistics uses confidence intervals to estimate parameter ranges with a specified frequency under repeated sampling, whereas Bayesian statistics employs credible intervals that directly represent the probability of a parameter lying within a given range based on prior information and observed data. Credible intervals provide a more intuitive interpretation in Bayesian inference by quantifying uncertainty in terms of posterior probability distributions.

Frequentist Inference

Frequentist inference in traditional statistics relies on the long-run frequency properties of estimators and hypothesis tests, emphasizing p-values and confidence intervals without incorporating prior knowledge. This approach contrasts with Bayesian statistics, which updates probability distributions based on prior beliefs combined with observed data.

Maximum Likelihood Estimation (MLE)

Maximum Likelihood Estimation (MLE) in traditional statistics aims to find parameter values that maximize the likelihood of observed data under a fixed model, relying solely on the data without incorporating prior knowledge. Bayesian statistics extends MLE by integrating prior distributions with the likelihood, producing posterior distributions that reflect updated beliefs and quantify uncertainty in parameter estimation.

Bayes Factor

Bayes Factor quantifies the strength of evidence for one statistical model over another by comparing their posterior odds, providing a direct measure of support absent in traditional p-value based statistics. Unlike traditional frequentist methods that rely on long-run frequency properties and null hypothesis significance testing, Bayesian analysis incorporates prior knowledge and updates beliefs with observed data, enhancing interpretability and decision-making in scientific research.

Hierarchical Modeling

Hierarchical modeling in Bayesian statistics allows for the incorporation of multiple levels of variability and prior information, enhancing parameter estimation in complex data structures. Traditional statistics often rely on fixed effects and separate estimates, limiting flexibility in capturing nested or grouped data patterns.

P-value Hacking

P-value hacking in traditional statistics involves manipulating data or testing multiple hypotheses to achieve statistically significant P-values, undermining the reliability of results. Bayesian statistics mitigates this issue by incorporating prior distributions and updating beliefs with observed data, providing more robust probabilistic interpretations less prone to false positives.

Empirical Bayes

Empirical Bayes methods combine traditional frequentist approaches with Bayesian inference by estimating prior distributions directly from observed data, improving parameter estimation in hierarchical models. This approach leverages large datasets to refine prior assumptions, enhancing model accuracy compared to classical statistics and fully Bayesian techniques that require predefined priors.

Markov Chain Monte Carlo (MCMC)

Markov Chain Monte Carlo (MCMC) methods revolutionize Bayesian statistics by enabling the approximation of complex posterior distributions through random sampling, contrasting with traditional statistics that often rely on closed-form solutions or asymptotic approximations. The iterative nature of MCMC algorithms allows for flexible modeling of high-dimensional parameter spaces, facilitating robust inference and uncertainty quantification beyond the capabilities of classical frequentist approaches.

Traditional Statistics vs Bayesian Statistics Infographic

industrydif.com

industrydif.com