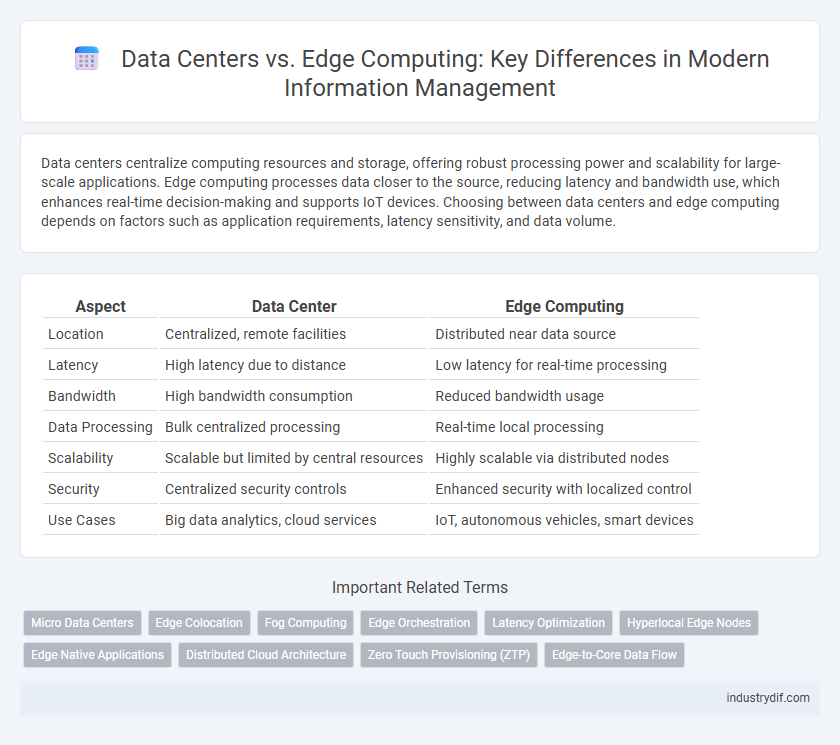

Data centers centralize computing resources and storage, offering robust processing power and scalability for large-scale applications. Edge computing processes data closer to the source, reducing latency and bandwidth use, which enhances real-time decision-making and supports IoT devices. Choosing between data centers and edge computing depends on factors such as application requirements, latency sensitivity, and data volume.

Table of Comparison

| Aspect | Data Center | Edge Computing |

|---|---|---|

| Location | Centralized, remote facilities | Distributed near data source |

| Latency | High latency due to distance | Low latency for real-time processing |

| Bandwidth | High bandwidth consumption | Reduced bandwidth usage |

| Data Processing | Bulk centralized processing | Real-time local processing |

| Scalability | Scalable but limited by central resources | Highly scalable via distributed nodes |

| Security | Centralized security controls | Enhanced security with localized control |

| Use Cases | Big data analytics, cloud services | IoT, autonomous vehicles, smart devices |

Understanding Data Centers: Core Concepts

Data centers are centralized facilities housing servers, storage systems, and networking equipment designed for large-scale data processing and storage. They provide critical infrastructure for cloud services, enterprise applications, and high-performance computing by ensuring redundancy, scalability, and robust security measures. Understanding data centers involves recognizing their role in handling massive workloads with optimized cooling, power management, and physical security to maintain uptime and data integrity.

What is Edge Computing? Key Definitions

Edge computing refers to a distributed computing paradigm that processes data near the source of data generation rather than relying exclusively on centralized data centers. It minimizes latency, reduces bandwidth usage, and enhances real-time data analysis by bringing computation and storage closer to IoT devices, sensors, and end users. Key definitions within edge computing include edge nodes, which perform localized processing, and edge devices, which generate and sometimes pre-process data before sending relevant information to core data centers.

Data Center vs Edge Computing: Fundamental Differences

Data centers centralize massive computational power and storage in secure, large-scale facilities, optimizing efficiency and resource management for extensive data processing tasks. Edge computing distributes processing closer to data sources, reducing latency and bandwidth use by handling real-time data on local devices or edge servers. The fundamental difference lies in their architecture: centralized infrastructure in data centers versus decentralized nodes in edge computing, shaping their applications and performance dynamics.

Performance Comparison: Latency and Speed

Edge computing significantly reduces latency by processing data closer to the source, enabling real-time analytics and faster response times critical for applications like autonomous vehicles and IoT devices. In contrast, traditional data centers, while offering substantial computing power, often experience higher latency due to data transmission over longer distances to centralized servers. The speed advantage of edge computing is evident in scenarios demanding immediate data processing, whereas data centers excel in handling large-scale, compute-intensive tasks with robust infrastructure.

Scalability and Flexibility Considerations

Data centers offer centralized scalability with vast resources and robust infrastructure to handle growing workloads efficiently, while edge computing provides enhanced flexibility by processing data closer to the source, reducing latency and bandwidth usage. Scalability in data centers relies on expanding physical hardware and network capacity, whereas edge computing scales horizontally by adding distributed devices tailored to specific geographical locations. Combining both approaches enables organizations to optimize performance and adaptability across diverse operational environments.

Security Challenges in Data Centers and Edge Computing

Data centers face significant security challenges such as centralized vulnerability risks, extensive attack surfaces, and the necessity for robust physical and network security measures. Edge computing introduces unique security concerns including data privacy issues, distributed attack vectors, and difficulties in maintaining consistent security policies across diverse and remote devices. Effective cybersecurity strategies must address the complexity of securing both centralized data centers and decentralized edge nodes to protect sensitive information and ensure system integrity.

Cost Efficiency: Centralized vs Distributed Models

Data centers leverage centralized infrastructure to reduce operational costs through economies of scale and optimized resource allocation. Edge computing distributes processing closer to data sources, cutting latency and bandwidth expenses but often increasing hardware and maintenance costs across numerous locations. Balancing centralized data center efficiencies with the localized benefits of edge computing requires evaluating specific workload demands and cost trade-offs.

Use Cases for Data Center and Edge Architectures

Data centers excel in handling large-scale data processing, centralized storage, and complex analytics, making them ideal for cloud computing, big data analysis, and enterprise resource management. Edge computing architectures are optimized for low-latency applications such as IoT device management, real-time video processing, and autonomous vehicle navigation, where immediate data processing near the source is critical. Combining both architectures supports hybrid models that leverage centralized power with localized responsiveness to enhance overall system efficiency and scalability.

Integration Strategies for Hybrid Environments

Hybrid environments benefit from integrating data centers with edge computing by deploying centralized data centers for heavy processing while leveraging edge nodes for low-latency, real-time analytics closer to data sources. Implementing robust orchestration tools and APIs ensures seamless data synchronization and workload distribution across the core and edge infrastructure. Prioritizing security protocols and scalable network architectures enables efficient hybrid deployment, optimizing performance and resource utilization.

Future Trends in Data Center and Edge Computing

Future trends in data center and edge computing emphasize hybrid architectures combining centralized cloud resources with distributed edge nodes to optimize latency and bandwidth. Advances in AI-driven management and automation enhance efficiency, while increased adoption of 5G and IoT devices drives demand for edge computing capacity. Security innovations like zero-trust models and enhanced encryption protocols become critical as data processing shifts closer to end users.

Related Important Terms

Micro Data Centers

Micro data centers offer localized computing power and storage, reducing latency and bandwidth usage compared to traditional centralized data centers. These compact units enable efficient edge computing by processing data closer to source devices, enhancing real-time analytics and IoT applications.

Edge Colocation

Edge colocation optimizes data processing by situating servers closer to end-users, reducing latency compared to traditional centralized data centers. This approach enhances real-time data analytics, supports IoT applications, and improves network efficiency through localized computing resources.

Fog Computing

Fog computing extends cloud capabilities by processing data closer to the source in edge devices, reducing latency and bandwidth use compared to traditional centralized data centers. It enhances real-time analytics and supports distributed IoT applications by bridging the gap between data center cloud infrastructure and edge computing nodes.

Edge Orchestration

Edge orchestration optimizes data processing by managing workloads across distributed edge nodes, reducing latency and bandwidth use compared to centralized data centers. This approach enables real-time analytics and seamless application deployment closer to data sources, enhancing operational efficiency and scalability.

Latency Optimization

Data centers offer centralized processing with higher latency due to physical distance, while edge computing minimizes latency by processing data closer to the source, enabling real-time analytics and faster response times. Optimizing latency through edge computing is critical for applications like autonomous vehicles, IoT devices, and augmented reality that demand immediate data processing.

Hyperlocal Edge Nodes

Hyperlocal edge nodes process data close to the source, reducing latency and bandwidth usage compared to traditional data centers that centralize computing resources. This localized approach enhances real-time analytics and supports applications like IoT and autonomous systems by delivering faster, context-aware processing.

Edge Native Applications

Edge native applications leverage localized data processing capabilities to reduce latency and enhance real-time analytics, enabling efficient handling of IoT devices and sensor data. Unlike traditional data centers, edge computing distributes computing power closer to data sources, optimizing bandwidth use and improving response times for critical applications.

Distributed Cloud Architecture

Distributed cloud architecture extends traditional data center capabilities by decentralizing computing resources closer to edge locations, reducing latency and improving real-time data processing. This model enhances scalability and resilience by integrating edge computing nodes with centralized data centers, optimizing workload distribution across a global network.

Zero Touch Provisioning (ZTP)

Zero Touch Provisioning (ZTP) streamlines device onboarding and configuration in both Data Center and Edge Computing environments by automating setup processes, reducing manual intervention and deployment time. Edge Computing particularly benefits from ZTP by enabling rapid, remote device provisioning at geographically dispersed locations, enhancing scalability and operational efficiency.

Edge-to-Core Data Flow

Edge computing processes data locally near the source, reducing latency and bandwidth usage by filtering and analyzing information before sending critical insights to centralized data centers. This edge-to-core data flow optimizes real-time decision-making and enhances overall system efficiency by minimizing the volume of raw data transmitted for further processing.

Data Center vs Edge Computing Infographic

industrydif.com

industrydif.com