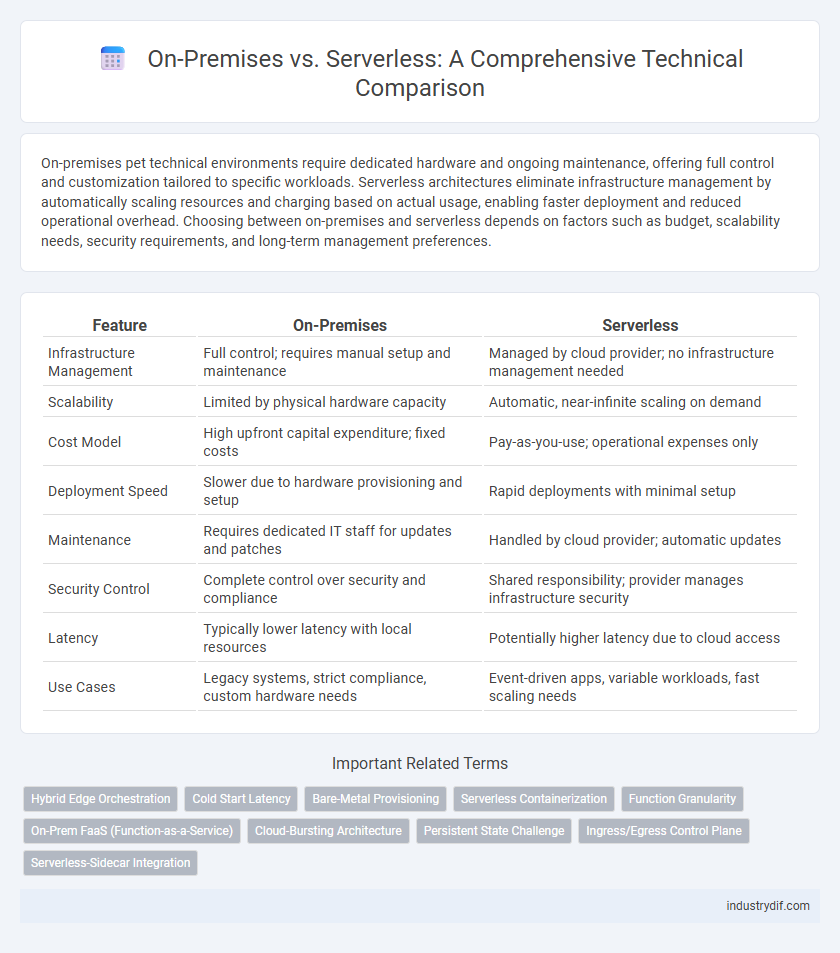

On-premises pet technical environments require dedicated hardware and ongoing maintenance, offering full control and customization tailored to specific workloads. Serverless architectures eliminate infrastructure management by automatically scaling resources and charging based on actual usage, enabling faster deployment and reduced operational overhead. Choosing between on-premises and serverless depends on factors such as budget, scalability needs, security requirements, and long-term management preferences.

Table of Comparison

| Feature | On-Premises | Serverless |

|---|---|---|

| Infrastructure Management | Full control; requires manual setup and maintenance | Managed by cloud provider; no infrastructure management needed |

| Scalability | Limited by physical hardware capacity | Automatic, near-infinite scaling on demand |

| Cost Model | High upfront capital expenditure; fixed costs | Pay-as-you-use; operational expenses only |

| Deployment Speed | Slower due to hardware provisioning and setup | Rapid deployments with minimal setup |

| Maintenance | Requires dedicated IT staff for updates and patches | Handled by cloud provider; automatic updates |

| Security Control | Complete control over security and compliance | Shared responsibility; provider manages infrastructure security |

| Latency | Typically lower latency with local resources | Potentially higher latency due to cloud access |

| Use Cases | Legacy systems, strict compliance, custom hardware needs | Event-driven apps, variable workloads, fast scaling needs |

Defining On-Premises Architecture

On-premises architecture refers to hosting IT infrastructure and applications within a company's own data centers, ensuring full control over hardware and security. This model requires significant upfront investment in physical servers, networking equipment, and maintenance personnel. On-premises deployments enable customization and compliance alignment but may limit scalability and increase operational complexity compared to cloud-based solutions.

Understanding Serverless Computing

Serverless computing eliminates the need for physical server management by outsourcing infrastructure tasks to cloud providers, allowing developers to focus solely on code execution. It scales automatically based on demand, optimizing resource utilization and reducing operational costs compared to traditional on-premises setups. Key services like AWS Lambda, Azure Functions, and Google Cloud Functions exemplify this model, enabling event-driven, stateless applications without dedicated server maintenance.

Infrastructure Management: Control vs Automation

On-premises infrastructure demands direct management of hardware, network configuration, and maintenance, offering granular control but requiring significant IT resources and expertise. Serverless computing automates infrastructure management, enabling developers to focus on code without handling server provisioning, scaling, or patching. This shift from control to automation reduces operational overhead and accelerates deployment cycles, improving agility and cost efficiency.

Deployment Complexity and Flexibility

On-premises deployment demands extensive hardware setup and ongoing maintenance, increasing complexity and limiting agility. Serverless architecture abstracts infrastructure management, enabling rapid scaling and deployment with minimal operational overhead. This flexibility allows developers to focus on code and innovation rather than environment configuration.

Scalability and Performance Considerations

On-premises infrastructure often requires significant upfront investment to scale hardware capacity, limiting rapid response to fluctuating workloads, whereas serverless architectures enable automatic scaling based on demand without manual intervention. Performance in on-premises setups can be optimized with dedicated resources, but may suffer from underutilization or bottlenecks during peak loads. Serverless platforms leverage cloud provider-managed resources to deliver consistent performance with elastic scalability, reducing latency spikes and improving responsiveness for variable traffic patterns.

Security and Compliance Implications

On-premises environments offer direct control over physical security measures and data residency, enabling organizations to meet strict regulatory requirements such as GDPR and HIPAA through tailored infrastructure policies. Serverless architectures introduce abstraction layers that can complicate compliance efforts, necessitating reliance on cloud provider certifications like SOC 2 and ISO 27001 to ensure data protection and regulatory adherence. Robust identity access management (IAM) and encryption protocols are critical in both models to mitigate risks associated with unauthorized access and data breaches.

Cost Structure Comparison

On-premises solutions require significant upfront capital investment in hardware, software licenses, and ongoing maintenance costs, leading to predictable but often higher fixed expenses. Serverless computing operates on a pay-as-you-go model, where costs scale directly with usage, eliminating expenses related to idle resources and infrastructure management. The variable cost structure of serverless can lead to optimized budgeting and operational flexibility, particularly beneficial for workloads with fluctuating demand.

Maintenance and Operational Overheads

On-premises infrastructure demands continuous hardware upkeep, software updates, and dedicated IT staff to manage operational complexities, leading to higher maintenance costs. Serverless architectures shift maintenance responsibilities to cloud providers, significantly reducing operational overhead and minimizing downtime risks. This model enables teams to focus on application development rather than infrastructure management, optimizing resource allocation and efficiency.

Use Cases: Best Fit Scenarios

On-premises solutions excel in scenarios requiring stringent data control, low latency, and compliance with regulatory standards, making them ideal for industries like finance and healthcare. Serverless architectures are best suited for event-driven applications, rapid scaling needs, and unpredictable workloads, often employed in startups and SaaS products. Hybrid models combine on-premises and serverless benefits, supporting legacy system integration alongside cloud-native innovation.

Future Trends in Enterprise Infrastructure

Enterprise infrastructure is rapidly shifting towards hybrid models that integrate on-premises systems with serverless computing to enhance scalability and reduce operational costs. Advances in edge computing and AI-driven automation are expected to drive serverless adoption while maintaining on-premises solutions for critical data sovereignty and latency requirements. Emerging trends include multi-cloud serverless architectures combined with container orchestration frameworks, enabling enterprises to optimize workload distribution and improve resilience.

Related Important Terms

Hybrid Edge Orchestration

Hybrid edge orchestration leverages both on-premises infrastructure and serverless computing to optimize workload distribution, reduce latency, and enhance data processing at the network edge. This approach enables seamless integration of local resources with cloud services, improving scalability and maintaining compliance with data residency requirements.

Cold Start Latency

On-Premises architectures typically experience minimal cold start latency due to persistent resource allocation, enabling immediate response times. Serverless platforms often face higher cold start latency caused by dynamic resource provisioning and container initialization, impacting performance in latency-sensitive applications.

Bare-Metal Provisioning

Bare-metal provisioning in on-premises environments offers direct hardware control, allowing for customized configurations and optimized performance critical for latency-sensitive applications. Serverless architectures abstract hardware management entirely, eliminating the need for bare-metal provisioning but potentially introducing overhead that can impact fine-tuned resource allocation and predictable performance.

Serverless Containerization

Serverless containerization allows developers to deploy and manage containerized applications without provisioning or managing underlying infrastructure, enhancing scalability and reducing operational overhead. Popular platforms like AWS Fargate and Google Cloud Run provide automatic scaling, seamless integration with CI/CD pipelines, and efficient resource utilization for serverless container workloads.

Function Granularity

On-premises environments often require managing larger, monolithic functions due to fixed infrastructure and resource constraints, whereas serverless architectures enable fine-grained, event-driven functions that scale independently. This granular function design in serverless models reduces latency, optimizes resource utilization, and enhances deployment agility compared to traditional on-premises solutions.

On-Prem FaaS (Function-as-a-Service)

On-premises Function-as-a-Service (FaaS) solutions enable enterprises to run serverless applications within their own data centers, offering enhanced control over data security, compliance, and latency compared to public cloud alternatives. These systems leverage local infrastructure to execute event-driven functions, reducing dependency on external cloud services while maintaining scalability and integration with existing on-prem resources.

Cloud-Bursting Architecture

Cloud-bursting architecture leverages on-premises infrastructure to handle baseline workloads while dynamically offloading excess demand to serverless cloud environments, optimizing scalability and cost-efficiency. This hybrid approach ensures seamless resource elasticity by integrating private data centers with public cloud serverless functions, minimizing latency and maximizing availability during peak usage.

Persistent State Challenge

On-premises infrastructures offer direct control over persistent data storage, but require significant management overhead to maintain state consistency and durability. Serverless architectures simplify deployment and scaling by abstracting infrastructure management, yet they pose challenges in ensuring persistent state due to stateless function execution and reliance on external stateful services.

Ingress/Egress Control Plane

On-premises environments offer granular ingress and egress control plane configurations through physical network appliances and custom firewall rules, enabling precise traffic management and compliance enforcement. Serverless architectures rely on cloud provider-managed ingress and egress control planes, leveraging native security groups and API gateways to handle dynamic traffic routing with built-in scalability and reduced operational overhead.

Serverless-Sidecar Integration

Serverless-Sidecar integration enhances microservices architecture by offloading functions like security, logging, and monitoring to sidecar containers without affecting serverless function performance. This approach optimizes scalability and operational efficiency by decoupling infrastructure concerns from core application logic within cloud-native environments.

On-Premises vs Serverless Infographic

industrydif.com

industrydif.com